Hello, welcome back to another Portworx Lightboard Session. My name is Ryan Wallner. And today we’re gonna go over how to configure your Portworx storage cluster. So this is gonna take sort of a higher level view of a Kubernetes and Portworx system as well as what things you should think about in terms of configuring your Portworx cluster on Kubernetes and which commands and flags you actually want to give Portworx in order to install it correctly. So here we have a setup with a group of Kubernetes masters for high availability, this is recommended. And a set of worker nodes, which we’re going to go ahead and visualize installing Portworx on.

So Portworx itself needs a number of different resources. So each one of these worker nodes needs a number of CPU associated with it, whether in cloud or on-prem using bare-metal, you want at least four cores. And recommended probably more than that, depending on what type of workloads you’re running on each individual workers, say if you’re running a lot of databases, four cores is probably not enough. But the minimum we need for Portworx with some applications on it is four cores. The other bit of this is recommended at least four gigabytes of memory.

Now again, same goes with cores here, the more is probably the more powerful. But depending on your cost to workload ratio and what type of workload you’re using, how memory intensive it is, you can configure these resource constraints within Kubernetes to kind of maintain the level of utilization across the system that Kubernetes really enables you to do. But for Portworx to run, at least have these two minimums. The other thing is, for Portworx we’re a persistent storage system and data management system, so we need some sort of backing disk or backing drive.

Now these drives should be at least eight gig, but we recommend 128 gig. Now, 8 gig’s pretty small because say if we have four nodes, you’re not looking at a total amount of storage that’s gonna allow you to have a lot of gains here. But with 128 on each node, you’re looking at a significant storage pool that can support many storage systems. Now, it’s not uncommon to see each one of these storage nodes to have upwards of a terabyte on each node. So depending on your workload and what you’re gonna be running on it, this is what you consider. Network again, we recommend a 10-gig link between them.

Now there is a management and data network. So you can separate your control plane traffic from your data aka replication and storage I/O from applications onto separate networks. And we’ll talk a little bit about that. So a data network will exist, we’re just labelling this D, and a management network will also exist and I’ll label this M. So the management network can be connected on an internet device and the data network can do the same. Now this allows you to separate, like I said, I/O data traffic versus management and control plane. We’ll talk a little bit about how to actually configure that in a second, but make sure at least the data network is using that 10-gig link. Management’s probably fine with a one. Reports needed for Portworx, at least we’re gonna need 9001 through 9021, and this range is configurable. This is the default range really. Unless you have overlapping port conflicts, there’s really no need to change that as this is kind of a standard way that Portworx communicates.

If you don’t have to, definitely no need to do that. Kernel version, you want at least 310 for your Linux operating system distribution, just because we use a lot of the modern Linux kernel integration and workflows and data flows. Key-value store database, this is either, etcd is definitely the most common, or Consul from HashiCorp, also an option there. And yes, your Kubernetes cluster probably runs etcd on the Masters, but keep in mind this key-value database is completely separate.

So I’ll label this here on this diagram etcd and this is going to be attached to our Portworx over the network and it is completely separate. We keep some cluster information, metadata, and those kind of things. Now, for clusters that are decent sized, like 20, 25 nodes of Portworx, we recommend the external database, key-value store database, because it’s going to leave that I/O and CPU kind of capacity on an external system as well as keep it on a separate failure domain.

So this is a good idea to have on a separate failure domain as well as kind of make sure to configure it with compaction and backups and snapshots so that you can recover that etcd database. The same things you do for your Kubernetes etcd database as well. So we’ll talk about both options. There is an option to have a built-in key-value store, which basically means Portworx spins went up and maintains the whole thing for you, you never have to think about a thing. It even takes snapshots. There are operations to back up and restore from that internal one. Again, that’s under 20 to 25 Portworx nodes. So now we have kind of a good picture of the resources needed for Portworx, we’re gonna talk about how to actually configure it. So the first thing I’m gonna talk about is our -C option. Now, after I go through some of these, I’ll show kind of an example or a link to an example of what this looks like within the Kubernetes deployment or DaemonSet as Portworx runs as. And so you can get a view of kinda what this looks like from the command line or EMO view.

But -C is our cluster name. And you can name this kinda whatever, it’s best to be unique. There is opportunity of conflict, if you spin up this application and it configures itself in etcd and uses the same name again, you may have conflict. So just make sure that cluster name is unique and this is an important one, it is required. So the other part of this that we’re gonna talk about is our storage, our data storage command options. So there’s a few here, I’m gonna go through a few. So you’re gonna wanna configure at least one of these for identifying your storage disks or your backing store, those 8 or 128 gigabyte drives which will exist on the infrastructure or they’ll be provisioned through some kind of cloud drive or SAN attached storage. But in either case this -S will be used to say, point to a specific drive, meaning dev/sdb. And you can have a number of them listed or this can actually be used with a volume template. Now, I’ll linked to the volume templates. But this is used in cloud to say, I want to use GB2 with 200 gigabyte volumes and this would be considered a template. And Portworx will automatically go and talk to that cloud API, provision them and attach them to Portworx workers.

-Z is a zero storage node. So use this if you want the Portworx node to contribute in the Portworx cluster, meaning it wants to attach and mount the volumes and allow applications to have I/O to and from it. But it’s not gonna participate in giving the storage cluster any more storage, meaning it doesn’t have to have a disc. So -Z, zero storage node. And -A is basically, give me everything you find. So Portworx will go ahead and kind of interrogate the system and see what kind of free unmounted drives you have. You can actually add a -F to say, force use any drive that has a file system on it.

But these are sort of the operations and parameters used most typically. Now, we talked about those networks, data and management, those are configured using -D for data and -M for management network, eth0, eth1, those kind of things. And there is another piece that’s probably important for this example, which is -X, which is your scheduler. So you’re gonna actually tell that your scheduler is Kubernetes which tells it, “Please talk to my Kubernetes scheduler to do a little bit extra.”

We talked about STORK, there’s a separate video on STORK, which is storage orchestrator for Kubernetes. And this integrates very tightly with the Kubernetes scheduler and this is kinda what enables it. The key-value store, also very important one, it’s going to be IP and port. And again, this typically runs on 2379 I believe, but the idea here is that this would be the IP address of your load balancer or virtual IP of your etcd database that Portworx can communicate with. The other part of this is that if you don’t want to use an external database, you can’t have -B. -B says, “Use my internal etcd database or key-value store database.” And Portworx will go ahead and automatically provision a key-value… Highly available key-valuable store on at least three of the nodes and configure things like compaction backups and things like that. Again under 25 nodes, 20 nodes, you’ll want to probably take advantage of that because whereas you have to maintain the ware of the etcd clusters here as well as the cluster itself, this sort of just leave it to Portworx.

Now, there’s a number of different other pieces to this puzzle where if you’re using the internal one, you’ll wanna configure a metadata flag which says these are specific drives for key-value store database to send the metadata I/O, so it doesn’t get in the way of the data I/O, separate discs. There’s other things like cache device, which is a cool one, which essentially allows you to choose a fast drive on each one of these nodes. Say each one of these Portworx nodes had a GB2 volume and then on that server it had an NVMe or an io1 type disc, that is definitely gonna be faster than general purpose drives.

You can use that as a cache device which essentially allows Portworx to use it as cache as it entails. So these are some of the more important flags. Again, -C for cluster name. -A for everything in terms of grabbing disc. -Z for zero storage nodes. S for the exact storage, meaning the disc template or storage device itself. -D for data network. -M for the management network. -X for schedulers or Swarm, or Kubernetes. And -K for an external key-value store. -B for the internal key-value store database, cache device or configuring cache device, cache metadata for having the metadata for internal key-value store.

And there are more. Not a ton more, but I will link to what this looks like and don’t be concerned about how am I gonna keep track of all these options. We have something called the spec generator and you can access this at central.portworx.com. And if you head there, I’ll kind of show on the screen what this looks like. And if you go through the spec generator it will automatically configure or allow you to select these options in a graphical way. And as I’m talking through this, I’ll probably put a video of how this looks. So definitely take a look at this. And this should give you an idea of kind of what you should think about when configuring your Portworx storage cluster. So until next time, I hope you enjoyed that. Take care.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Ryan Wallner

Portworx | Technical Marketing Manager

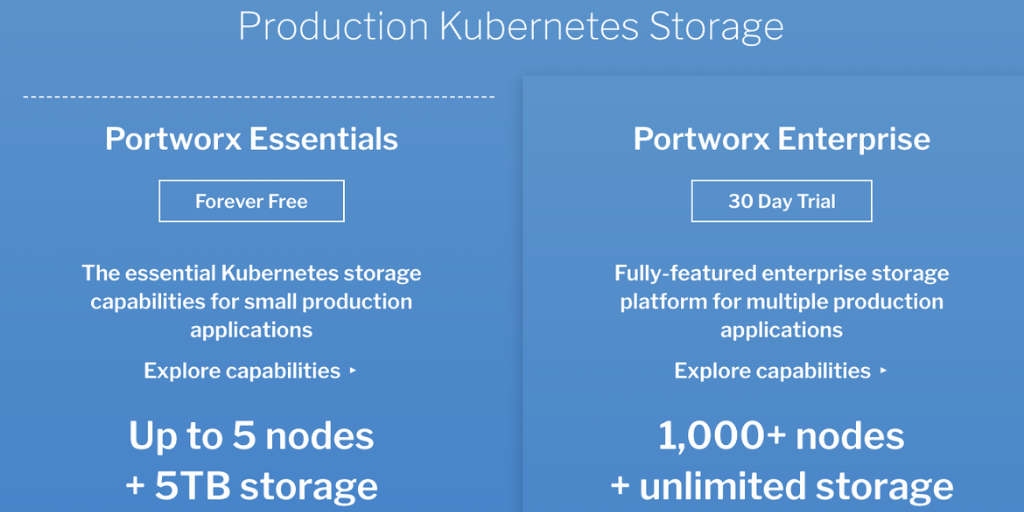

Announcing Portworx Essentials: The #1 Kubernetes storage platform for any app