This post is a follow up to our recent article focused on running Jenkins Docker in an HA configuration using Docker Swarm. Given the popularity of that post, we wanted to dive into Jenkins a little more and share some tips on speeding up Jenkins building. Why?

CI/CD (Continuous Integration / Continuous Delivery) is one of the pillars of the modern DevOps tool chain. CI/CD, as exemplified by Jenkins, automates the back-end of software development (building, tooling, and testing) prior to software release promotion. Jenkins is an open-source automation server created by Kohsuke Kawaguchi and written in Java. A Jenkins build can be triggered by various means, the most common being a commit in a version control system like Git. Once a Jenkins build has been triggered, a test suite runs, enabling developers to automatically test their software for bugs prior to releasing to production. By some estimates, Jenkins has up to 70% of marketshare for all CICD tools, making its use today ubiquitous. But like all software, developers are always looking for ways to speed it up. If you are using Jenkins but want to accelerate your pipelines, this post answers the question “how do I speed up Jenkins builds”?

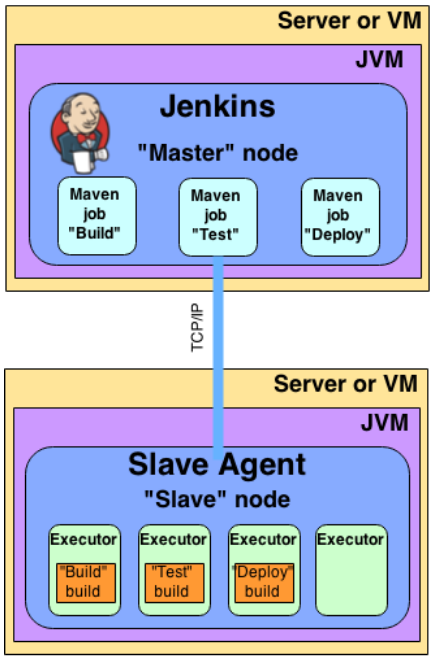

Background on Jenkins architecture

Jenkins architecture revolves around master / slave roles. Standard Jenkins deployment models were based initially on bare-metal servers and VMs, and like many aspects of today’s DevOps environments are now moving to containers.

The conventional use of storage in Jenkins has both pros and cons:

Pros:

- Jenkins has a simple and stateless model.

- Jenkins makes no assumptions regarding initial state.

- Jenkins initiates every build from scratch.

- Jenkins can create n slaves when you need to scale.

Cons:

- Jenkins has relatively poor utilization in scaleout models involving lots of slaves.

- Each slave instance must build its pipeline from scratch, which typically involves a complete repository clone, followed by a complete compilation/build.

- If you don’t build your pipeline from scratch, incremental builds can fail due to subtle changes in starting state

.

Where Portworx fits and why

Portworx provides remarkable benefits for containerized Jenkins models. Following are Portworx-specific benefits for common use cases:

- Shared volumes: In this model, there is one master and one slave. The advantage of using a Portworx shared volume is similar to the advantage of NFS: easy transfer of state between master and slave. Furthermore, this shared volume model works equally well, regardless of whether Jenkins is running containerized, in a VM, or on bare metal.

- Faster incremental builds: Typically both slaves and their data are ephemeral, with data and state discarded when the slave exits. However, if the slave makes use of two different volumes (“build” and “artifact”), then a slave can exit while preserving its artifact repository, to help accelerate subsequent, or incremental builds.

- Monolithic master: slaves are optional. Smaller Jenkins environments with fewer resources and requirements may opt to simply run only a single monolithic master. In the conventional model, all data/state on the master will be lost when the master exits. With Portworx, data persists in this model, allowing the master to exit, yet quickly restart when needed. When monolithic masters run in an AWS/Cloud, compute charges are only paid for (for example on “m4.16xlarge”) when needed.

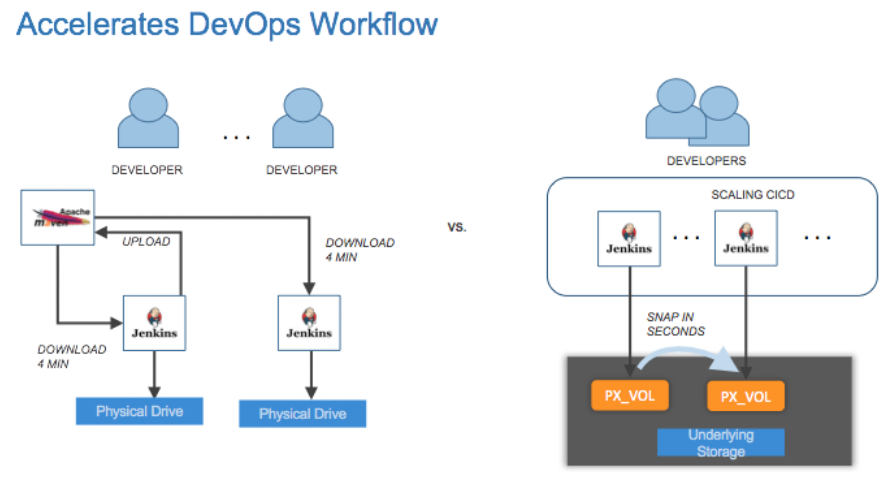

- Highly parallel / fully distributed: Without Portworx, the master delegates, and each slave does its own complete build and test cycles. With Portworx, only the master performs the build cycle, followed by multiple test cycles all performed in parallel by multiple slaves. In this most powerful model, the master takes multiple volume snapshots when it completes its build cycle, and then assigns a read/writeable snapshot for each slave to use as its own private volume for their respective test cycles.

.

. - Easily scale out test cycles: Without Portworx, each slave would need its own private build volume, which typically performs slowly when doing container-to-container copies. With Portworx, test cycles can easily scale out, by having the master create private read/writeable snapshots and spawning new slaves dynamically on demand.

Proof pudding

Actual performance matters much more than any theoretical discussion. So to demonstrate, we used the Jenkins “Game of Life” demo to compare the conventional way of running Jenkins, versus using a combination of the “Faster Incremental Builds” and “Highly Parallel / Fully Distributed” models, both described above.

Each “baseline” feature build job include the following steps

- Create slave build container on ECS cluster.

- Git clone the project and checkout the particular feature branch.

- Copy the Library folder contents from Jenkins master or NFS location to the slave container workspace. Requires copying 5-6 GiB files from library folder on Jenkins master to slave containers.

- Run the build process.

- Archive the build output artifact back to Jenkins master.

On the other hand, using snapshots for your Jenkins slaves, you can accelerate your builds dramatically.

To speed up Jenkins builds you need to:

- Create snapshot for the Library folder

- Create slave build container and use the created snapshot volume as slave Jenkins workspace.

- Git clone the project and checkout the particular feature branch

- Run the build process

- Archive the build output artifact back to the Build artifact shared volume

* A dedicated snapshot volume is created for each feature build process.

* The snapshot volumes will be deleted after build process completed.

* The build artifact PX shared volume is mounted to each slave build agent during the container start

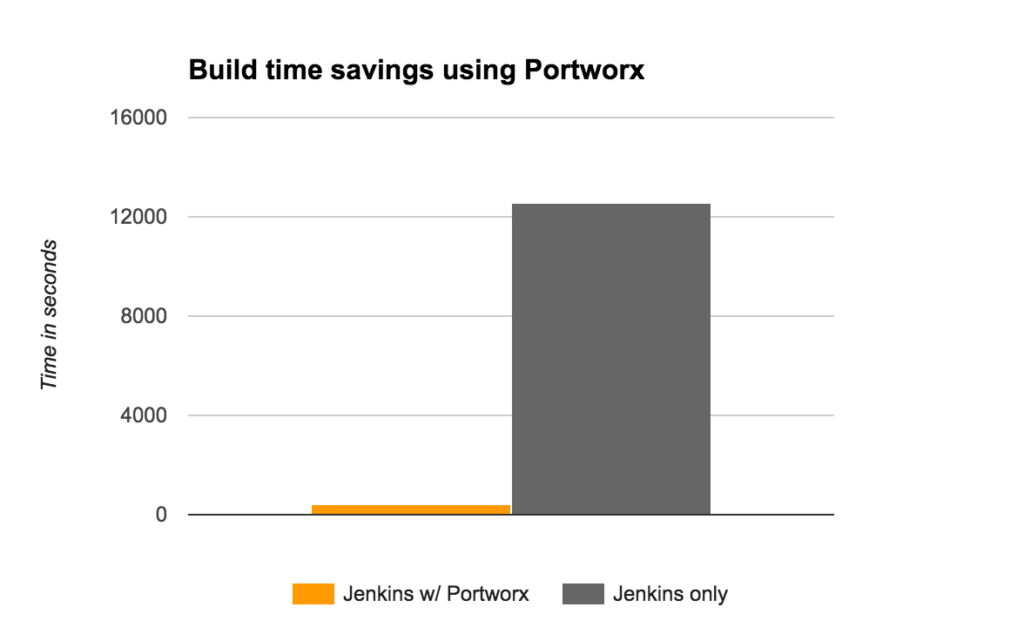

The savings in time is simply astounding:

| Jenkins job name | Job build time with PX snapshots and artifact saves | Job build time without PX snapshots |

| ecs-job1 | 6 min 9 sec | 3 hr 26 min |

| ecs-job2 | 5 min 58 sec | 3 hr 28 min |

| ecs-job3 | 2 min 30 sec | 3 hr28 min |

| ecs-job4 | 6 min 3 sec | 3 hr 27 min |

| ecs-job5 | 5 min 25 sec | 3 hr 28 min |

| ecs-job6 | 3 min 27 sec | 3 hr 26 min |

| ecs-job7 | 3 min 22 sec | 3 hr 28 min |

| ecs-job8 | 6 min 23 sec | 3 hr 25 min |

| ecs-job9 | 3 min 23 sec | 3 hr 26 min |

| ecs-job10 | 2 min 27 sec | 3 hr 25 min |

Total time for 10 parallel jobs built with PX snapshots and saved artifacts: 0:07:14

Total time for 10 parallel jobs built without PX snapshots: 3:29:00.

Concluding thoughts

When companies adopt the CI/CD model and methodologies, they do so to be more responsive and adaptive. Everything changes. Staying on top of complexities arising from continuous change matters most. The notion of “responsiveness” has an implied time-oriented dimension that typically lacks quantitative description: “quick”. Given the desire to respond as quickly as possible, using Portworx to solve common CI/CD problems provides demonstrable and astounding performance benefits: what used to take hours now takes minutes.

Want to learn more about running Jenkins in containers? Read more about Docker storage, Kubernetes storage and Marathon persistent storage so you can use your scheduler to automate the deployment of Jenkins in a container.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Jeff Silberman

Portworx | Global Solutions ArchitectExplore Related Content:

- ci/cd