Portworx Guided Hands On-Labs. Register Now

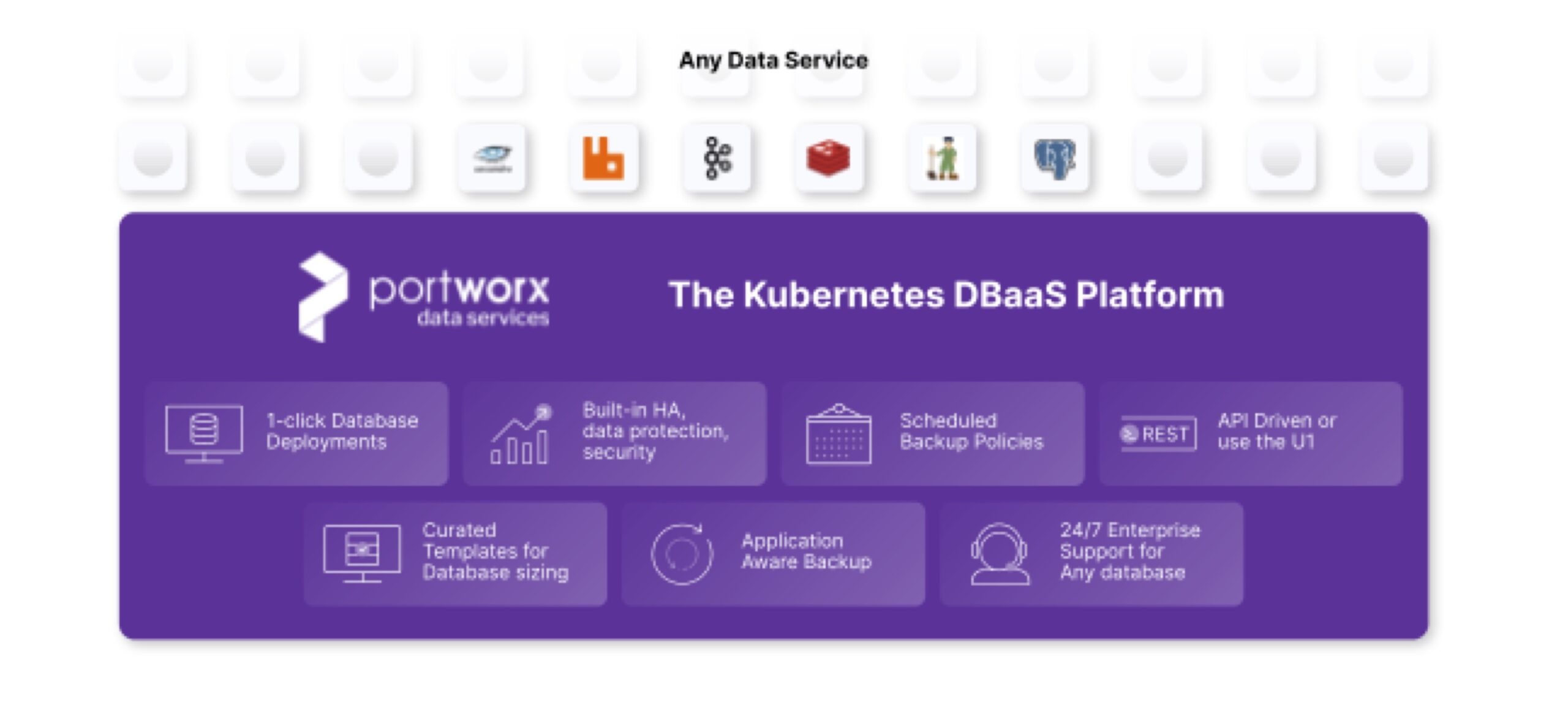

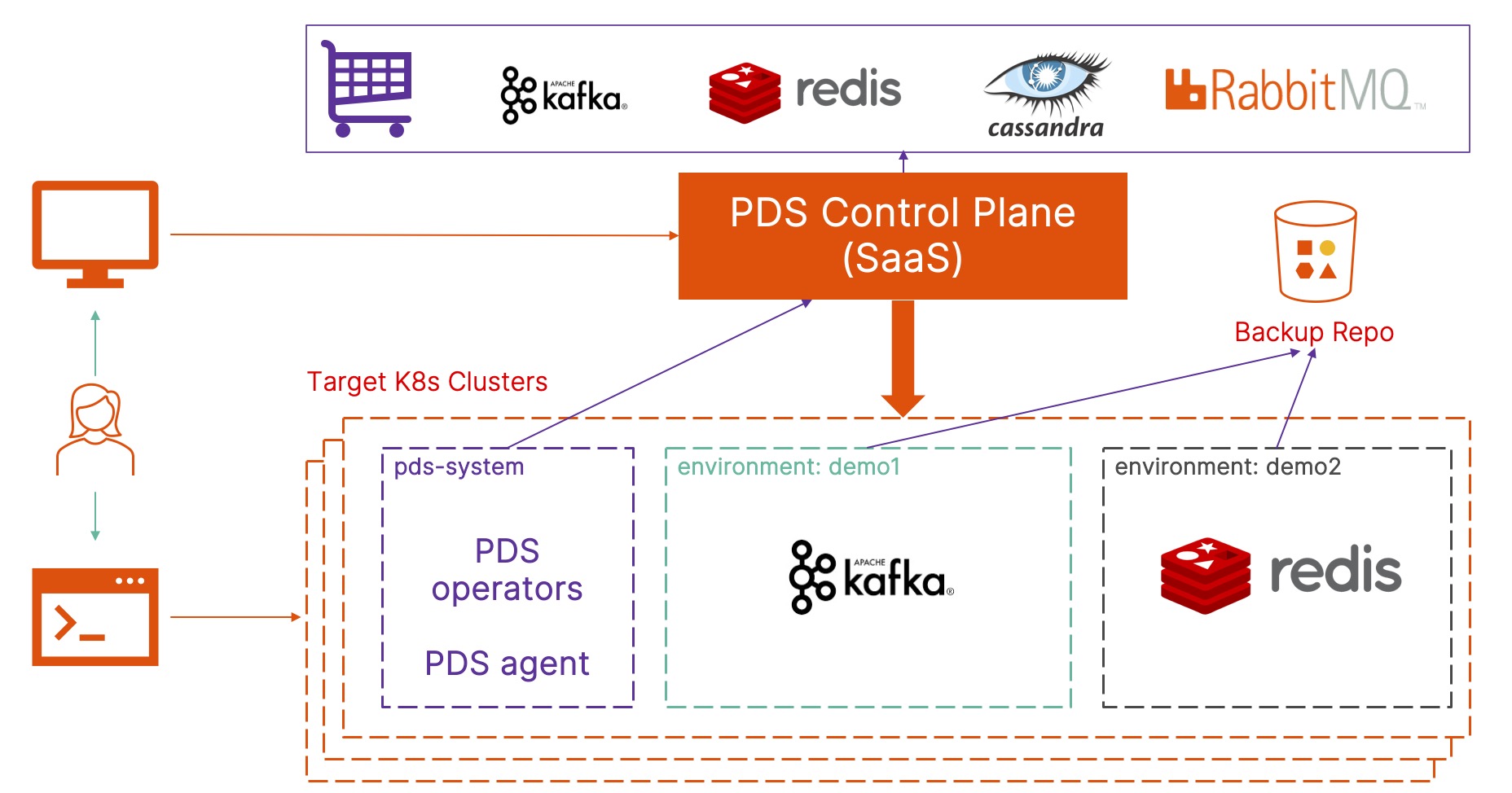

Portworx is a Kubernetes data-services platform that provides persistent storage, data protection, disaster recovery, and other capabilities for containerized applications. Portworx Data Services (PDS) builds upon and advances these capabilities by automating database deployment, management, and day-2 operations through a Database-as-a-Service platform for Kubernetes.

PDS is provided in the form of a catalog where a number of data services can be deployed. These data services include databases such as PostgreSQL, Cassandra, Kafka, Redis, Zookeeper, and Rabbitmq which can be deployed to any location where a Kubernetes cluster with Portworx Enterprise is present. To see how easy it is to accelerate data services deployment with PDS take a look at the general availability release blog post.

PostgreSQL, also known as Postgres, is a free and open-source relational database system designed to handle different workloads from small, isolated databases to data warehouses scaled across multiple systems. When deploying a new PostgreSQL data service from PDS there is a likelihood a migration of data from existing systems needs to take place. This migration of data could be from a non-containerized deployment, a single database in a cluster, or from an existing unmanaged container solution. The purpose of this blog is to showcase different PostgreSQL migrations that can be performed from a number of deployment types to PostgreSQL in PDS.

During the different migration scenarios the following tools included with PostgreSQL deployments will be used :

- pg_dump – Creates a consistent logical backup of a single database in a cluster.

- pg_dumpall – Creates a consistent logical backup of all databases and global objects in a cluster.

- pg_restore – Restores databases created using the archive file format.

- psql – A terminal-based front end to PostgreSQL. This is a command-line-based interactive tool for managing PostgreSQL clusters.

When migrating or copying one or more databases to PDS a logical backup of the database is created and restored to the intended PostgreSQL data service. The logical backups can be created using the output of pg_dump or pg_dumpall and then depending on the method of its creation, restored using psql or pg_restore. The commands shown in this blog can be run in any location where PostgreSQL is installed.

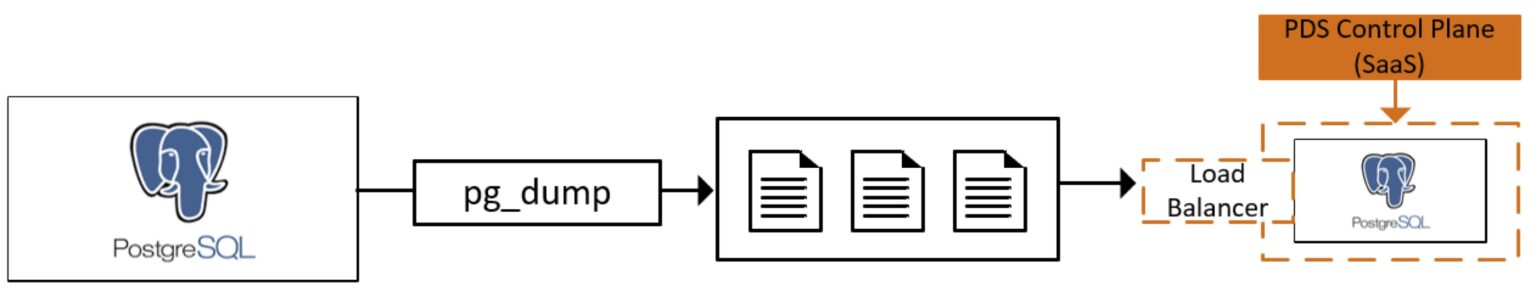

Migration using dump files

Using tools such as pg_dump or pg_dumpall outputs a logical stream of database object definitions and data. This data stream can output to a physical file(s) which can then be transferred to other systems. Dump files are especially useful when moving data between air-gapped systems with no line of sight connectivity to one another.

The following commands showcase how pg_dump should be used in each scenario :

Backup a local and individual database to local filesystem

This command creates a single file on the local system in a cleartext format.

pg_dump -d <database name> <backup file>.sql

Backup a remote and individual database to local system

This command creates a single file on a local system when connecting to a remote system. This can be useful for moving data between different systems using a pull method.

pg_dump -h <remote system address> -U <username> -p <port> -d <database name> > database.sql

Backup a local, individual database to a local system using file format options

Using file format options allows for greater flexibility in terms of performance and compression options. For larger databases that require accelerated backup and recovery with pg_dump this method is suitable and requires that pg_restore be used on recovery. This command uses the directory format with 8 jobs to speed up the backup process.

pg_dump -f <backup directory> -j 8 -F directory -d <database name>

Restore to a PDS data service using a local file in cleartext format

The permissions that are present for the source database may need to be recreated prior to restoring the objects to the PDS database. This can be done manually or through the use of pg_dumpall with the globals option as below :

Backup credentials : pg_dumpall --globals-only --file=globals.sql

Prior to restoring the credentials and database options, it may be necessary to review the output of the globals file to ensure that only the required options will be applied to the PDS database.

Restore credentials : psql -h <pds load balancer address> -u <pds user> globals.sql

The single file created as cleartext output from pg_dump can be piped directly into the psql command-line utility in one of two ways :

Using psql with a remote host :

psql -h <remote load balancer data service address> -U <PostgreSQL User> <backup file>.sql

Using psql directly against the container pod with kubectl :

kubectl exec -i <postgresql pod name> -n <pds namespace> -- psql -h /tmp/ <backup file on kubectl host>.sql

Restore to a PDS data service using file format options

When creating backups with pg_dump using the directory file format option then the restore procedure must utilize pg_restore. The format for restoring to a PDS data service with 8 jobs will follow this pattern :

pg_restore -h <remote load balancer data service address> -U pds -d <database> -j 8 <backup folder location>

Backup all databases and permissions

The following command using pg_dumpall will output all of the databases and global objects (credentials) into a single file:

pg_dumpall > <backup file>.sql

Using psql with a remote host :

psql -h <remote load balancer data service address> -U <PostgreSQL User> > <backup file>.sql

Using psql directly against the container pod with kubectl :

kubectl exec -i <postgresql pod name> -n <pds namespace> -- psql -h /tmp/ > <backup file on kubectl host>.sql

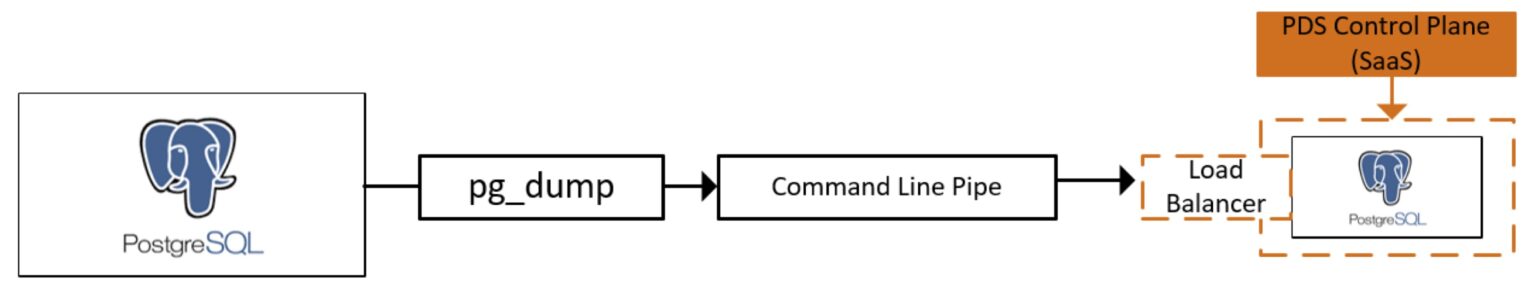

Migration using pipeline output

This method of migration is appropriate where the PostgreSQL data service has a line of sight connectivity with the original PostgreSQL cluster, or a PostgreSQL deployment is present with a line of sight to both. These commands will read directly from the source deployment and output it directly to the PostgreSQL data service. Due to the security implications of storing passwords in command line history this method is best suited for container to container migration.

Copy a single database

First copy the credentials and global objects :

pg_dumpall --globals-only --dbname=postgresql://<user>:<password>@<hostname>:<port> | psql -h <remote load balancer data service address> -U pds

Then copy the object definitions and data:

pg_dump --dbname=postgresql://<user>:<password>@<hostname>:<port>/<database name> | psql -h <remote load balancer data service address> -U pds

Copy all credentials and databases

pg_dumpall --dbname=postgresql://<user>:<password>@<hostname>:<port> | psql -h <remote load balancer data service address> -U pds

Learn more about Portworx Data Services

If you have PostgreSQL, Cassandra, Redis , RabbitMQ or Kafka data services that might benefit from being managed services, check out Portworx Data Services.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Andrew Sillifant

Senior Solutions Manager & ArchitectExplore Related Content:

- Portworx Data Services

- postgres

- postgresql