Blog

Read articles about Kubernetes container storage technology, kubernetes backup, and data services written by the thought leaders at Portworx.

read more

Feb 2, 2024

Product AnnouncementsPortworx Enterprise 3.1.0 - Introducing Journal I/O Profile

Subscribe to our Newsletter

Thanks for subscribing!

Filter by:

-

Category

Please enter a value

Clear All

LOADING...

Learn More About Portworx

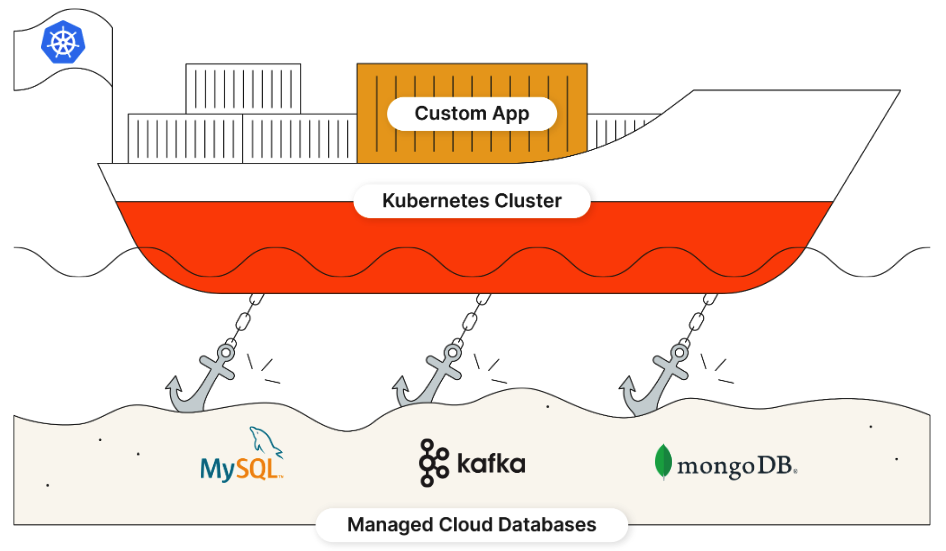

Enterprises trust Portworx to run mission-critical applications in containers in production. The #1 most used Kubernetes data services platform by Global 2000 companies, Portworx provides a fully integrated solution for persistent storage, data protection, disaster recovery, data security, cross-cloud and data migrations, and automated capacity management for applications running on Kubernetes.

Contact Sales