Portworx Guided Hands On-Labs. Register Now

One of the major features in our recent Portworx Enterprise 3.1.0 release was the introduction of the “journal” I/O Profile. Portworx uses I/O profiles to optimize the performance of Portworx volumes by changing how a Portworx volume interacts with the disks backing the abstracted Portworx StorageCluster in Kubernetes. This allows users to tune performance at a StorageClass or volume level depending on the workload using the Portworx volume, providing granular control of performance characteristics on a per-application basis.

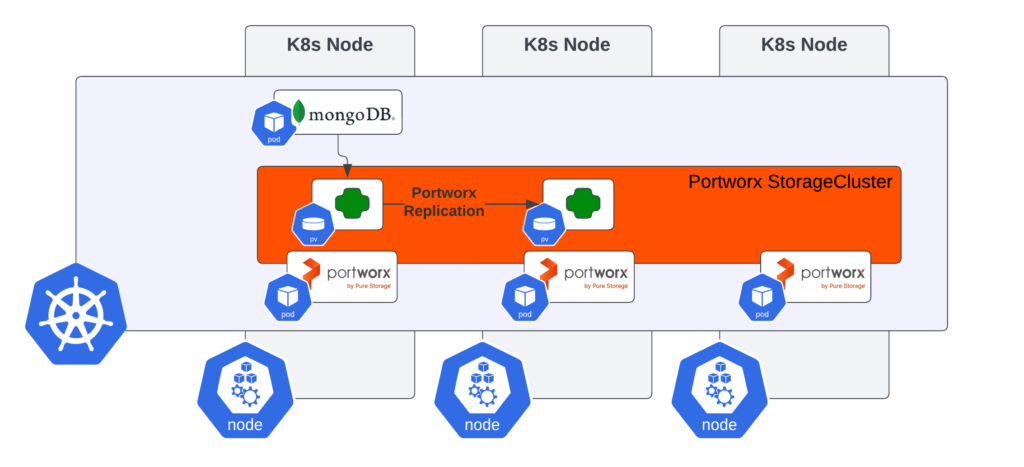

Portworx Volume and Replication Factor Overview

One of the core features of Portworx Enterprise is to provide availability and resiliency of PersistentVolumes (PV) in a Kubernetes cluster. This is achieved by defining a “replication factor” for each PV via the StorageClass used to provision the PersistentVolumeClaim (PVC), or by using the Portworx CLI utility pxctl. Portworx allows a replication factor of one, two, or three for each Portworx volume and synchronously writes any changes to all of the replicas prior to reporting back to the application that the data was successfully written. The illustration below shows Portworx replication occurring at the storage level instead of replication at the application/database level.

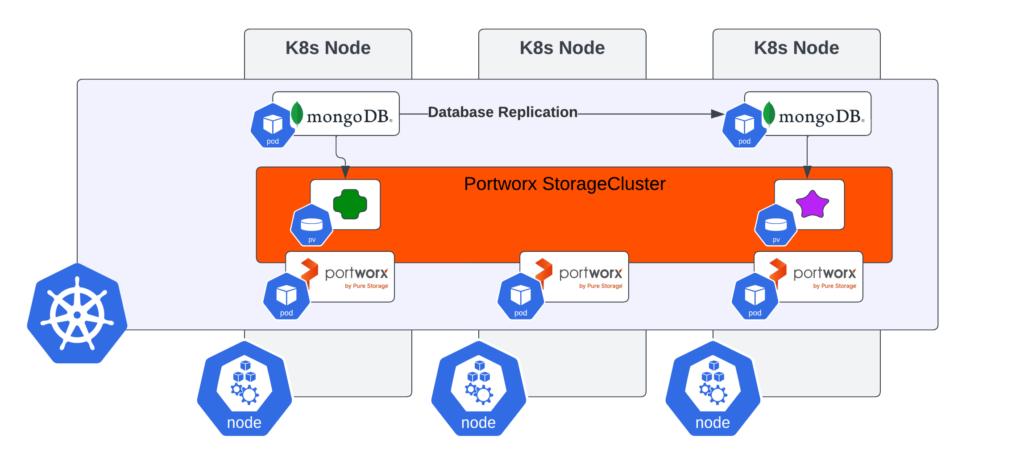

By spreading PV replicas out across failure domains (Availability Zones in public clouds, physical racks and servers in on-premises infrastructure), Portworx provides applications with a copy of their data in the case of a worker node going down that is currently hosting an application pod and its data. This allows users to not have to rely on in-application replication if desired, and let Portworx handle availability of the underlying data for their application. Conversely, if the user desires to use in-application replication, they can set the replication factor to one, and let the application provide availability and resiliency of the underlying data. The illustration below shows in-application replication occurring at the database level instead of replication at the Portworx storage level.

Portworx uses our “auto” I/O profile by default, and automatically assigns the “db_remote” I/O profile when a replication factor of two or three is detected. The db_remote I/O profile implements a write-back flush coalescing algorithm that combines multiple syncs that occur within a 100ms window. This can provide enhanced performance for write-intensive modern databases due to a sync not needing to occur for every write operation, and the application performing the writes is completely unaware of what is happening under the covers – but is able to benefit from the boost in performance when write operations occur.

Why A New I/O Profile?

As mentioned above, Portworx only performs write-back flush coalesce operations when the replication factor for a PV is set to two or three. This means that for users who are using in-application replication, there has been no ability to boost performance for single replica volumes.

The new journal I/O profile brings similar performance to single replica volumes when using Portworx 3.1.0 or later. This is achieved by staging writes to a small journal disk within the Portworx StorageCluster until they are stable. Once the write is stable on the journal device, the write is acknowledged back to the requesting application, and later flushed to the storage pool backing the PV provisioned for the application. Writing data to the journal amortizes the cost of a sync operation performed by the application, and these operations provide a similar performance boost customers are used to with the Portworx db_remote I/O profile.

In addition to the standard journal I/O profile, Portworx Enterprise 3.1.0 also introduced the “auto_journal” I/O profile. This is an I/O pattern detector that analyzes the last 24 seconds of I/O to a PV, and determines whether the journal I/O profile could improve the performance of the data being written by the application. Once the pattern detector achieves the required confidence level, it will configure the volume to either use or avoid the journal I/O profile. If journal I/O profile avoidance is triggered, the volume will use the “none” I/O profile and no optimization between Portworx and the storage devices backing the storage pool will be performed.

The requirements for the journal device are simple – the IOPS and throughput capabilities assigned to the journal device must be equal to or greater than the IOPS and throughput of the highest performing backing device within the Portworx storage pool which the PV is hosted on. For example, if your Portworx storage pool was comprised of gp3 EBS volumes on AWS that had capabilities of 16,000 IOPS and 1,000 MB/s of throughput, your journal device within the Portworx StorageCluster would need to have minimum capabilities (or greater) of 16,000 IOPS and 1,000 MB/s of throughput.

Testing PostgreSQL TPS and Results

To illustrate the performance boost that the journal I/O profile provides on single replicas, we setup a test harness to test transactions per second (TPS) and average latency for PostgreSQL, which was comprised of the following:

- A three node EKS 1.27 cluster using m5.4xlarge instances in AWS

- On each EKS worker node, the following EBS volumes were provisioned for the Portworx StorageCluster:

- 150GB GP3 volumes for storage pool backing drives (16k IOPS, 1k MB/s)

- 32GB GP3 volumes for journal device drive (16k IOPS, 1k MB/s)

- postgres:16.1-alpine image used for pgbench testing

We then configured two distinct StorageClasses for the PVC and PVs used by PostgreSQL, both with a single replica, one with the journal I/O profile enabled, and one without. The StorageClass definition that had journal enabled (note the “io_profile” parameter):

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: annotations: name: px-journal-repl1 parameters: io_profile: journal repl: "1" provisioner: pxd.portworx.com reclaimPolicy: Delete volumeBindingMode: Immediate allowVolumeExpansion: true

And the StorageClass definition that did not have the journal I/O profile enabled (again, note the “io_profile” parameter):

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: annotations: name: px-nojournal-repl1 parameters: io_profile: auto repl: "1" journal: "false" provisioner: pxd.portworx.com reclaimPolicy: Delete volumeBindingMode: Immediate allowVolumeExpansion: true

We then created 20GiB PVs using the above StorageClasses via PVC requests, and ran pgbench twice – once with the volume that had journal I/O profile enabled, and once with the volume that had journal I/O profile disabled (“auto”).

Creation of the PostgreSQL DB was performed with the command createdb -U admin sampledb, and initialization of the DB was performed with the command pgbench -U admin -i -s 600 sampledb. The TPS and latency test was then run against the sampledb created using the command pgbench -U admin -c 1 -j 1 -T 600 sampledb.

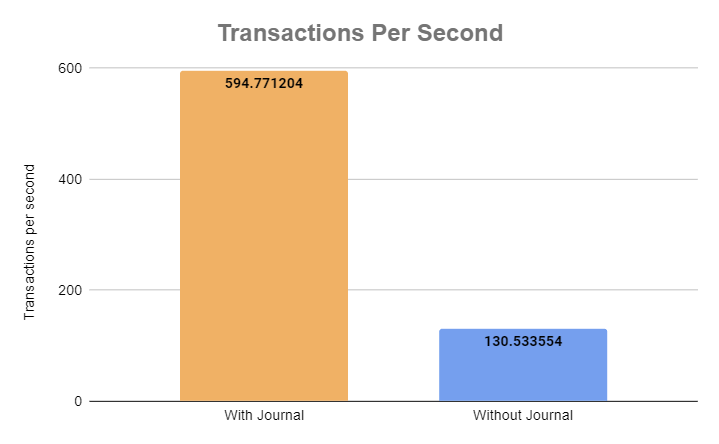

While this test was simple and synthetic using pgbench, the results we obtained were similar to the performance increases that our customers have seen in the field. With journal I/O profile enabled, we saw a 356% increase in transactions per second as compared to not using the journal I/O profile:

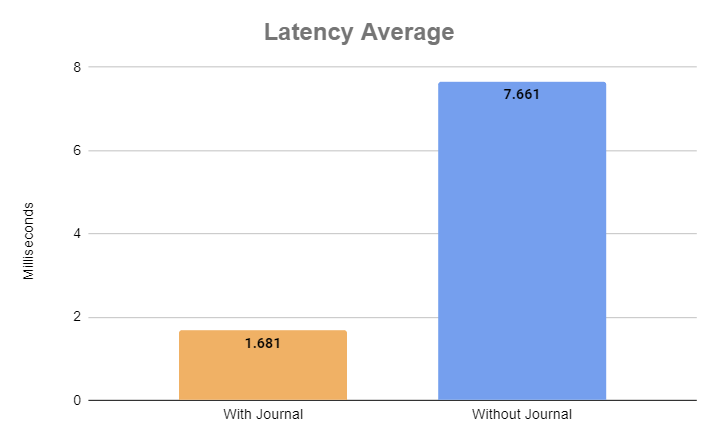

Improvements in latency were also observed when we used the journal I/O profile as opposed to not using it, where we saw a 78% decrease in latency during the pgbench test:

Conclusion

With the 3.1.0 release of Portworx Enterprise, the journal I/O profile feature provides our customers even more flexibility in choosing how they want to provide availability and resiliency for their data within Kubernetes, without sacrificing outstanding performance. If your application architecture requires in-application replication and no resiliency at the PV layer is needed, this I/O profile is for you!

For more information on Portworx Enterprise features, capabilities, and use cases, visit https://portworx.com/services/kubernetes-storage/.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Tim Darnell

Director, Technical MarketingExplore Related Content:

- Feature

- Performance

- Portworx enterprise