Portworx Guided Hands On-Labs. Register Now

Portworx is a cloud native storage platform to run persistent workloads deployed on a variety of orchestration engines, including Kubernetes. With Portworx, customers can manage the database of their choice on any infrastructure using any container scheduler. It provides a single data management layer for all stateful services, no matter where they run.

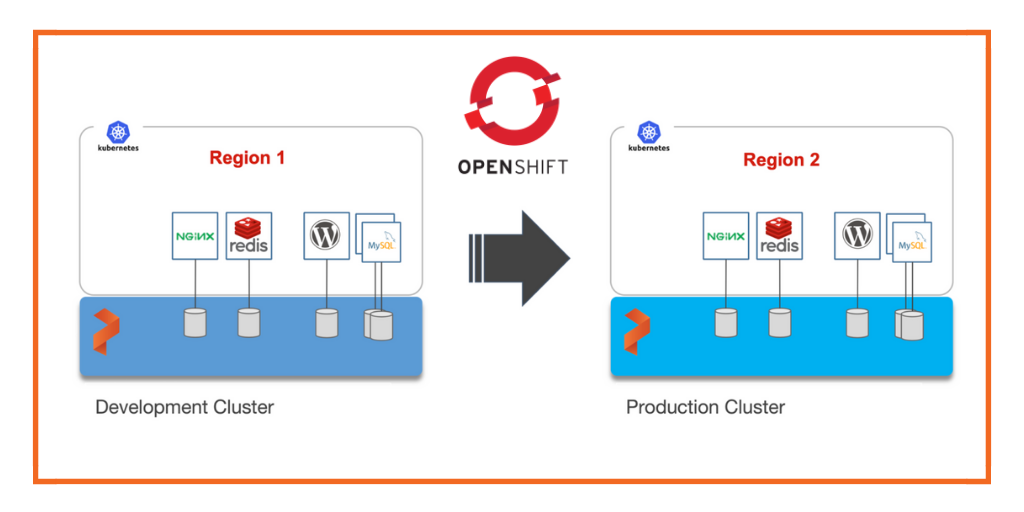

Kubemotion is one of the core building blocks of Portworx storage infrastructure. Introduced in Portworx Enterprise 2.0, it allows Kubernetes users to migrate application data and Kubernetes application configurations between clusters, enabling migration, backup & recovery, blue-green deployments, and more.

This step-by-step guide demonstrates how to move persistent volumes and Kubernetes resources associated with a stateful application from one Red Hat OpenShift cluster to another.

Background

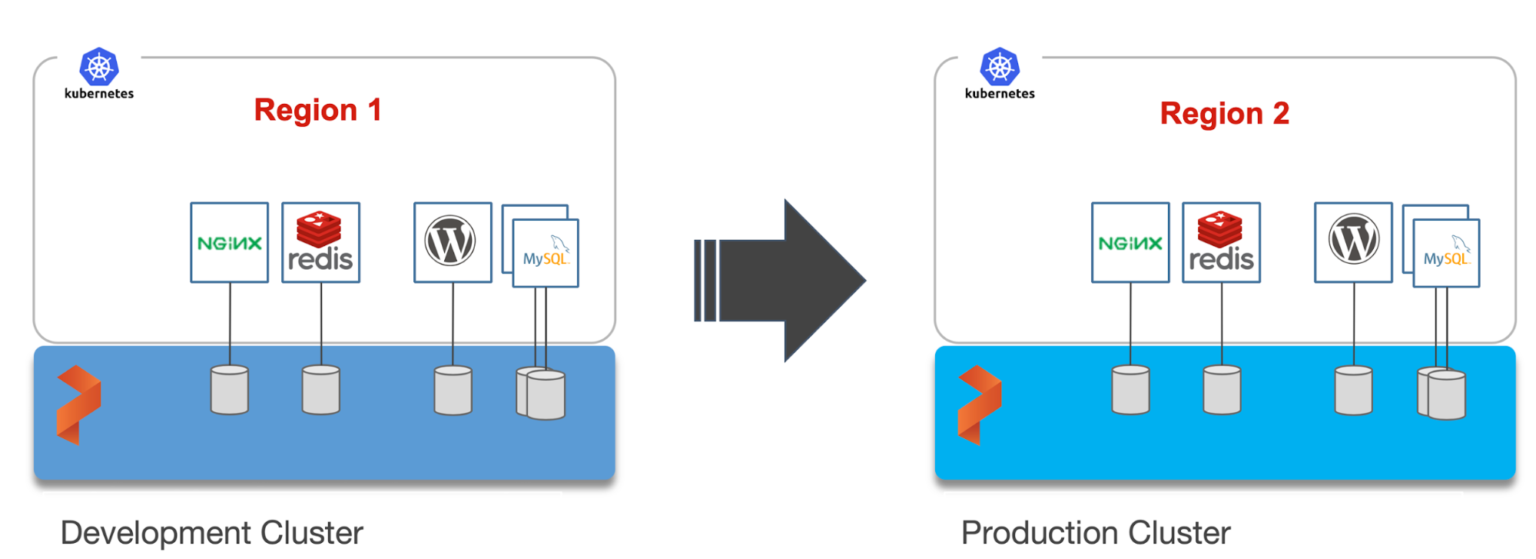

For enterprises, a common scenario is to run development and test environments in one cloud region and the production environment in another. Development teams may choose a region that’s geographically closer to them while deploying production applications in another region that has low latency for the users and customers.

Even though Kubernetes makes it easy to move stateless workloads across environments, achieving parity of stateful workloads remains a challenge.

For this walkthrough, we will move Kubernetes resources between two OpenShift clusters running in US East 2 (Ohio) and US West 2 (Oregon) regions of AWS. The Ohio region is used by the development teams for dev/test, and the Oregon region for the production environment.

After thoroughly testing an application in dev/test, the team will use Portworx and Kubemotion to move the storage volumes and application resources reliably from the development to production environment.

Exploring the Environments

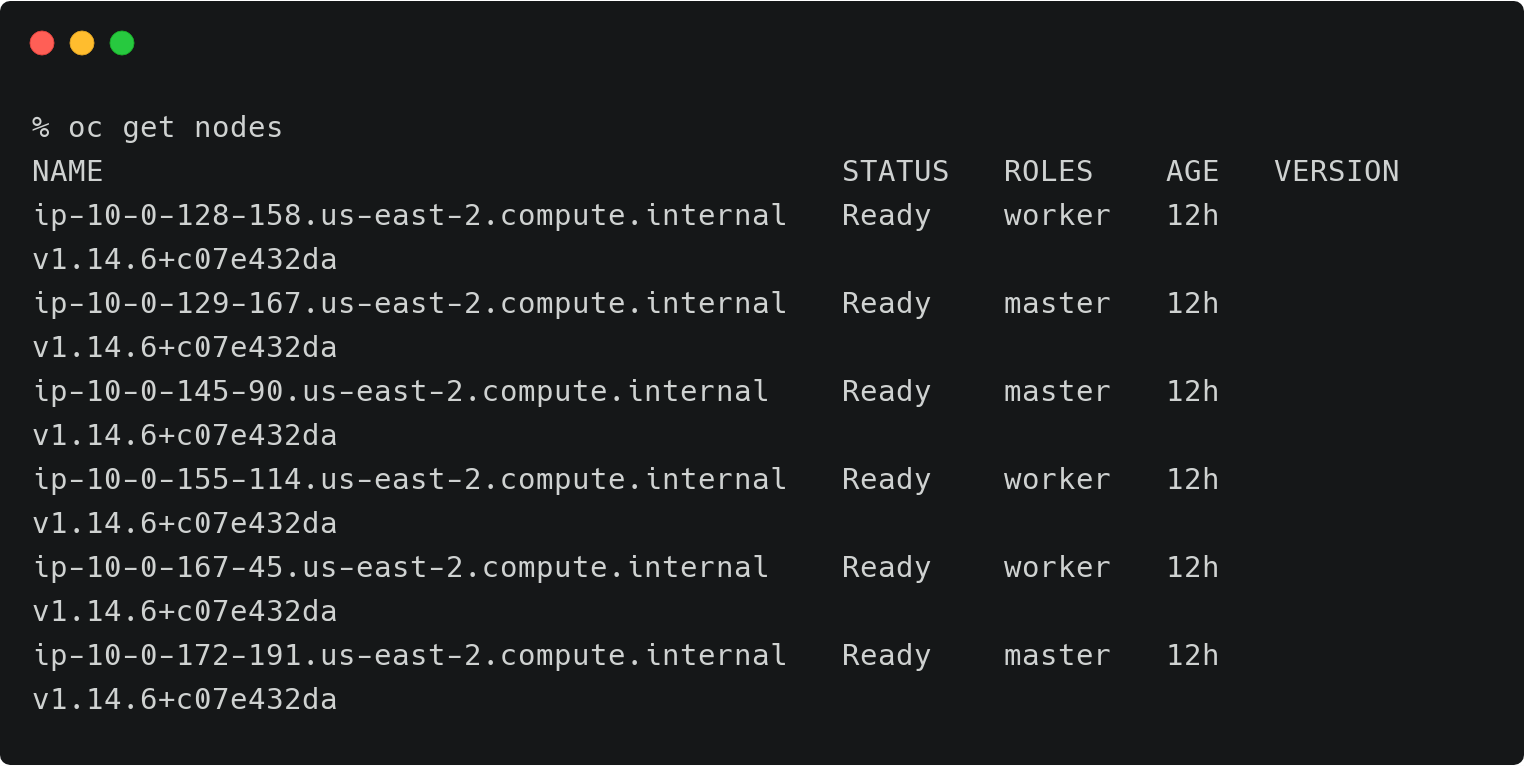

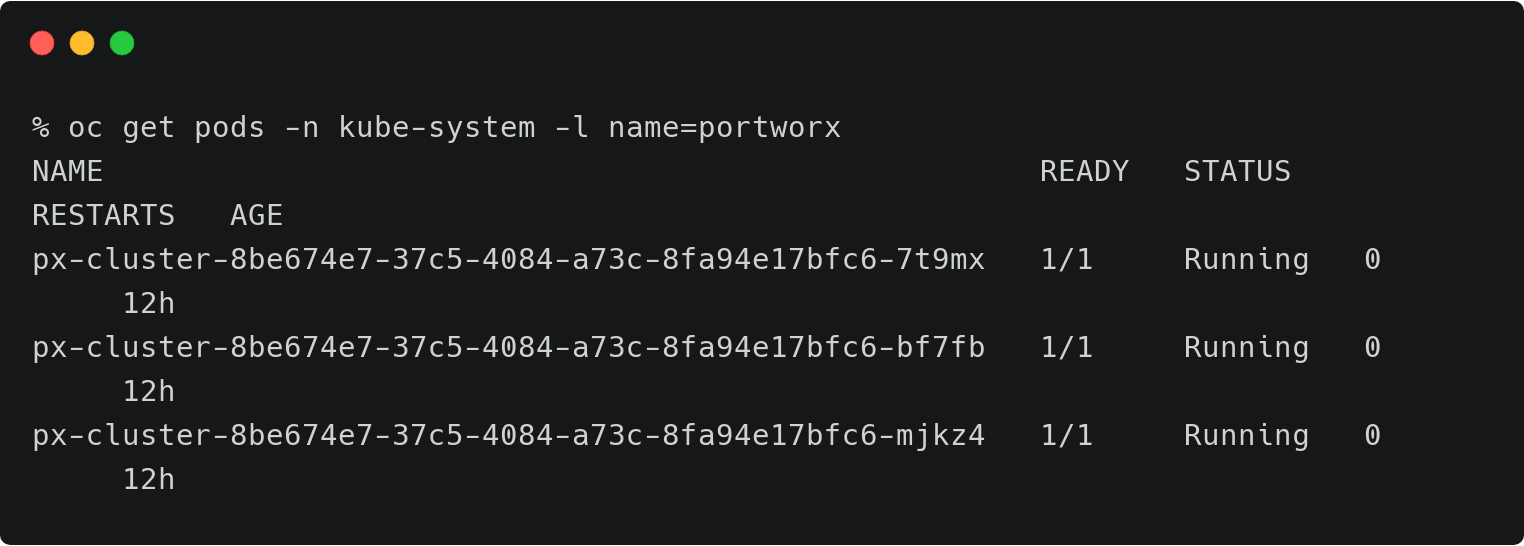

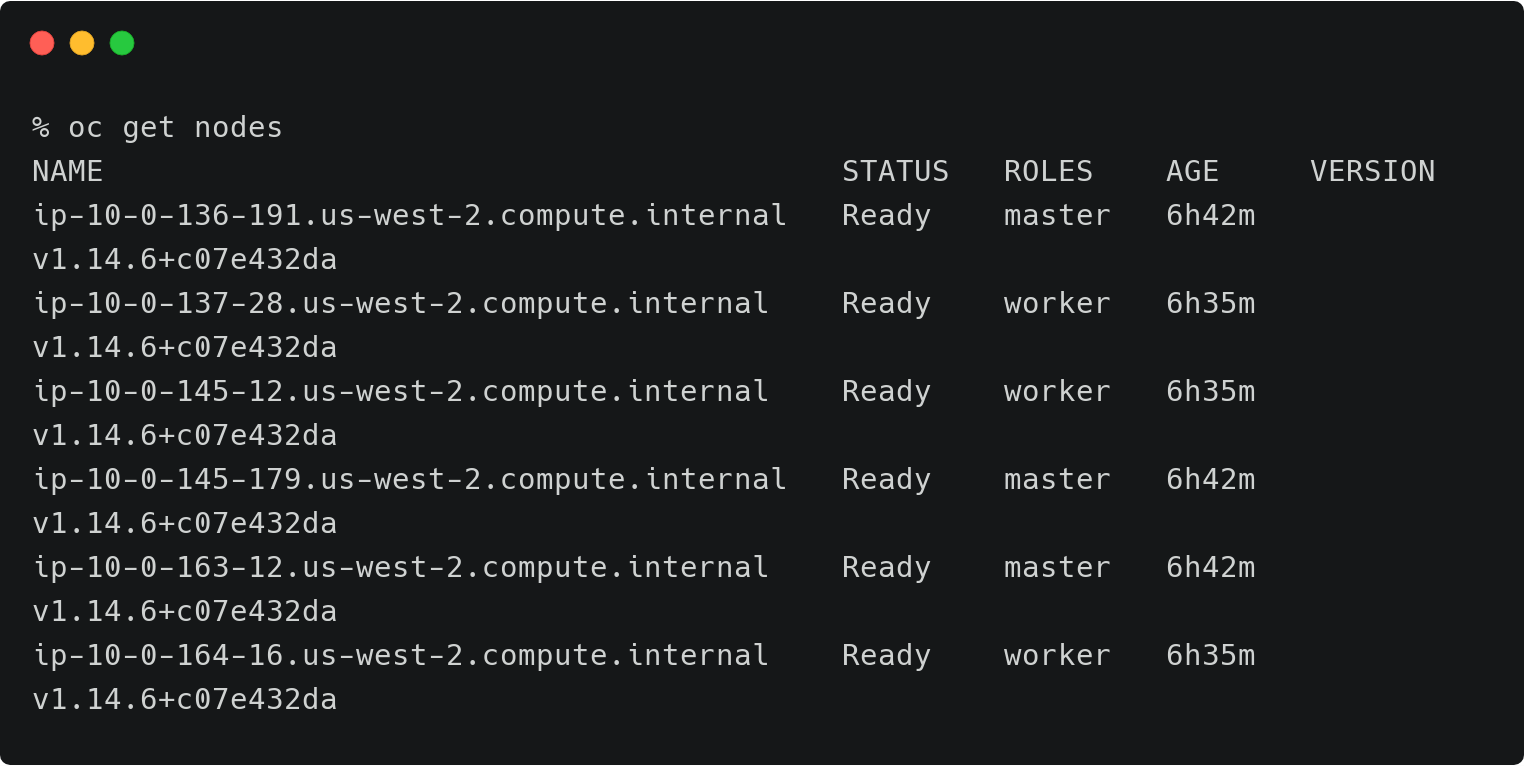

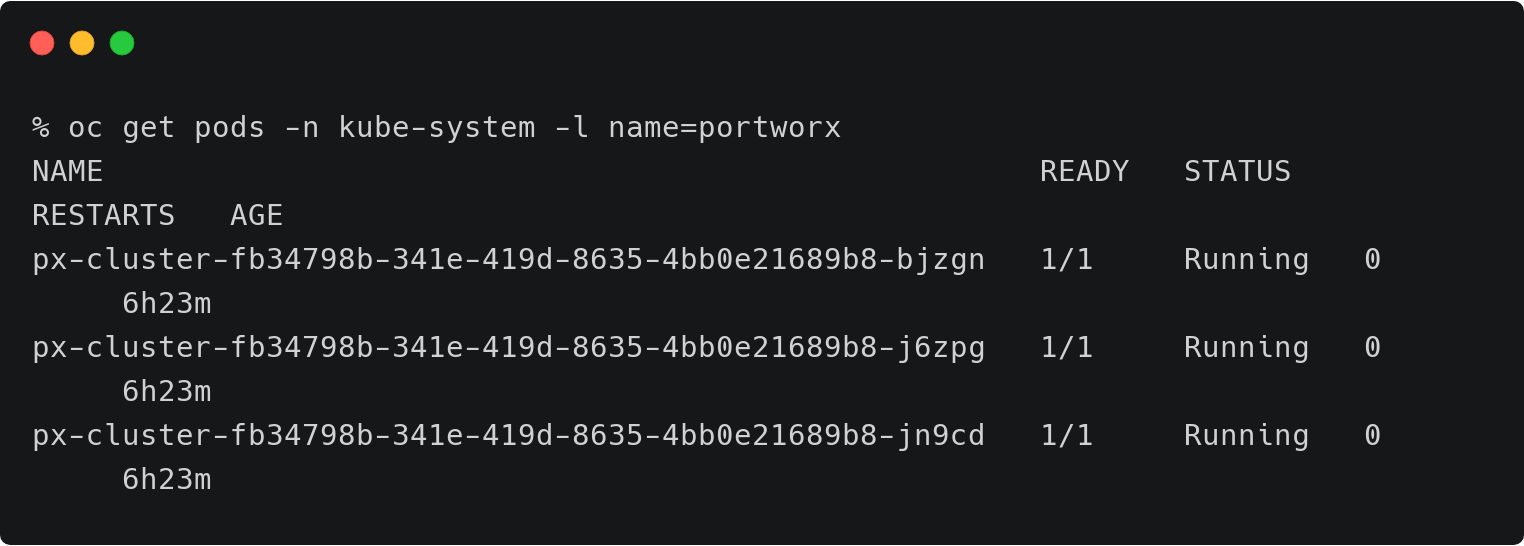

We have two Red Hat OpenShift clusters—dev and production—running in Ohio and Oregon regions of AWS. Both of them have the latest version of Portworx cluster up and running.

The above OpenShift cluster represents the development environment running in Ohio (us-east-2) region of AWS.

The above cluster represents the development environment running in Oregon (us-west-2) region of AWS.

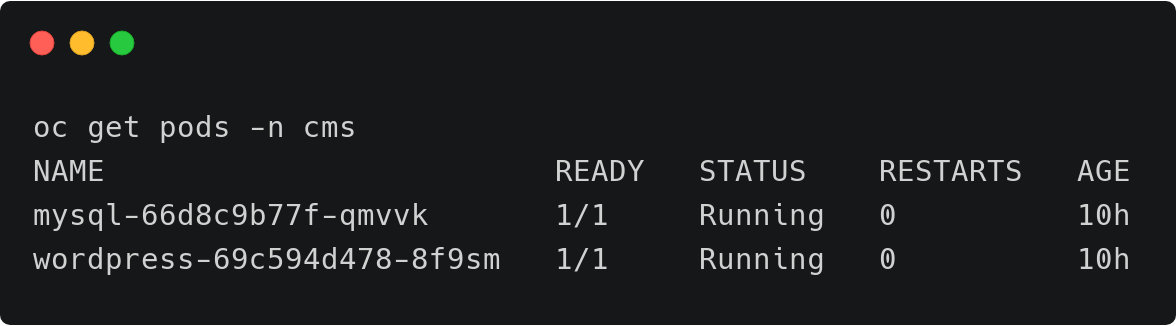

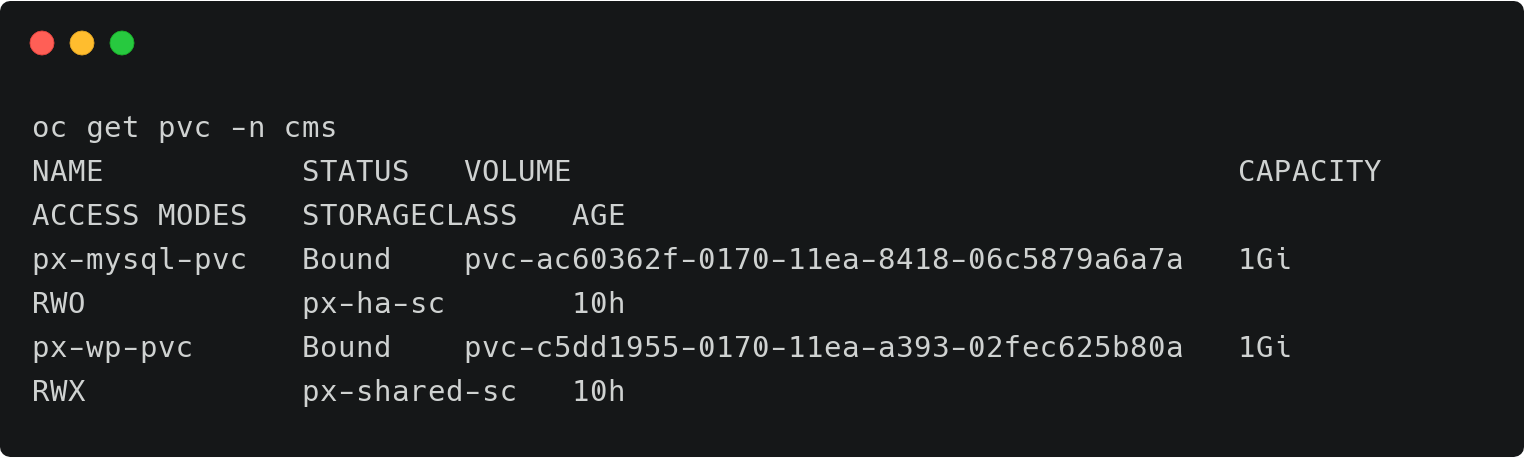

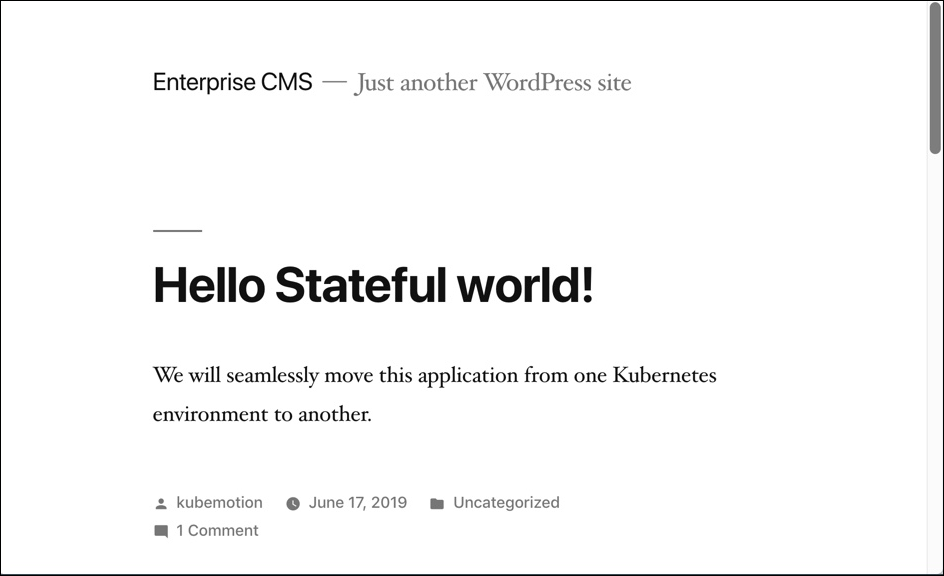

The dev/test environment currently runs a LAMP-based content management system that needs to be migrated to production.

It runs two deployments—MySQL and WordPress—in the cms namespace.

For a detailed tutorial on configuring a highly-available WordPress stack on Red Hat OpenShift, please refer to this guide.

The persistent volumes attached to these pods are backed by a Portworx storage cluster.

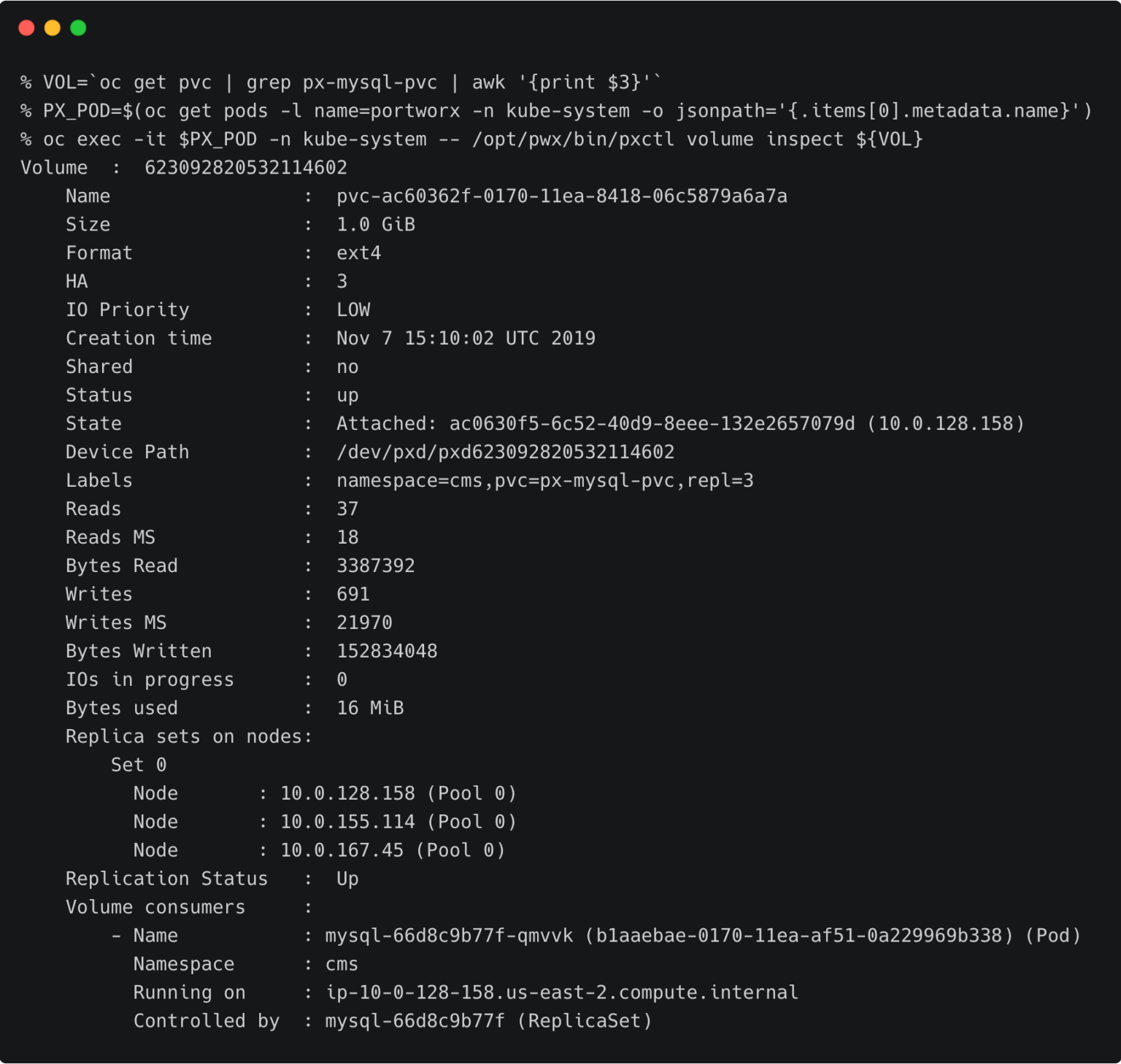

The below volume is attached to the MySQL pod.

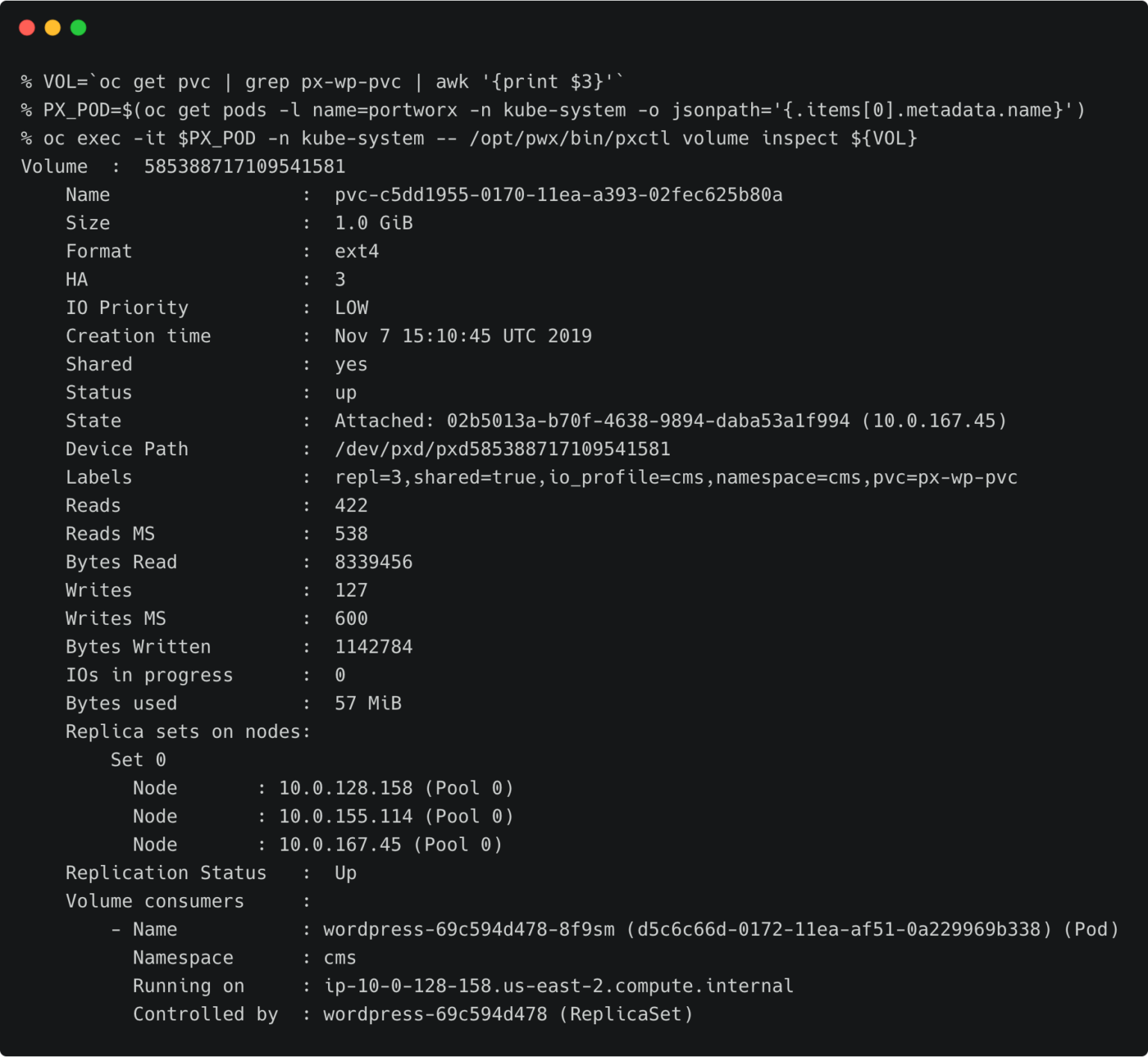

For the WordPress CMS, there is a shared Portworx volume attached to the pods.

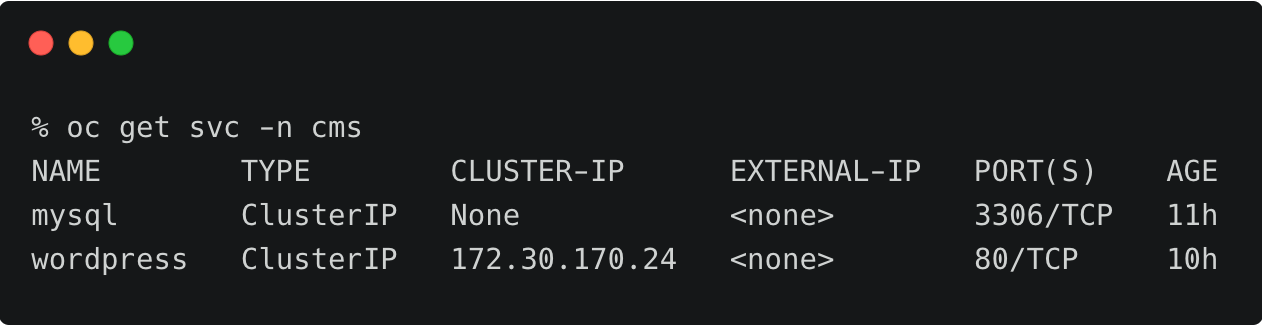

The fully configured application is accessed through the service associated with WordPress.

Preparing the Source and Target Environments

Before we can migrate the resources, we need to configure the source and destination clusters.

Follow the steps below to prepare the environments.

Creating the Object Store Credentials

We need to create the object store credentials on both source and destination clusters. In order to create this, we have to retrieve the UUID of the destination cluster, which will be appended to the name of the credential.

To complete this step, you need the access key and secret key of AWS account. If you have configured the AWS CLI, you can find these values at ~/.aws/credentials.

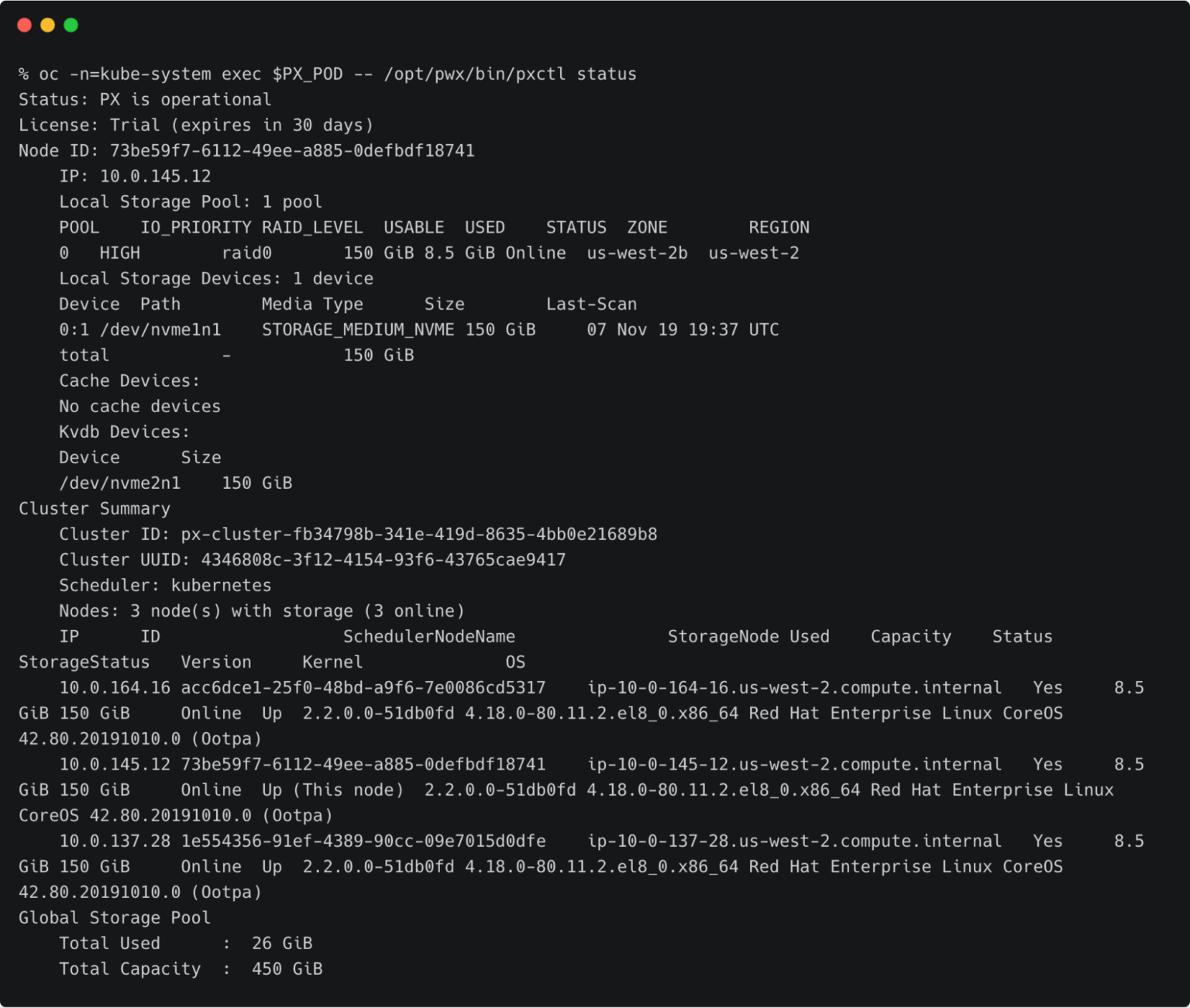

Switch to prod cluster and run the command below to copy the UUID.

PX_POD=$(oc get pods -l name=portworx -n kube-system -o jsonpath='{.items[0].metadata.name}')

oc -n=kube-system exec $PX_POD -- /opt/pwx/bin/pxctl status

Make a note of the cluster UUID and keep it in a safe place.

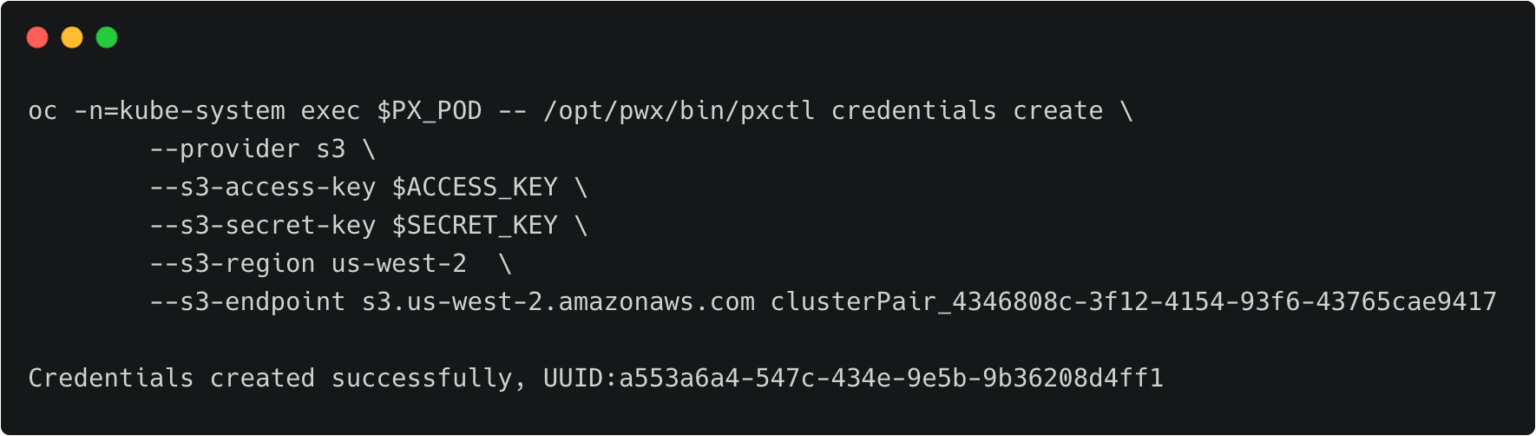

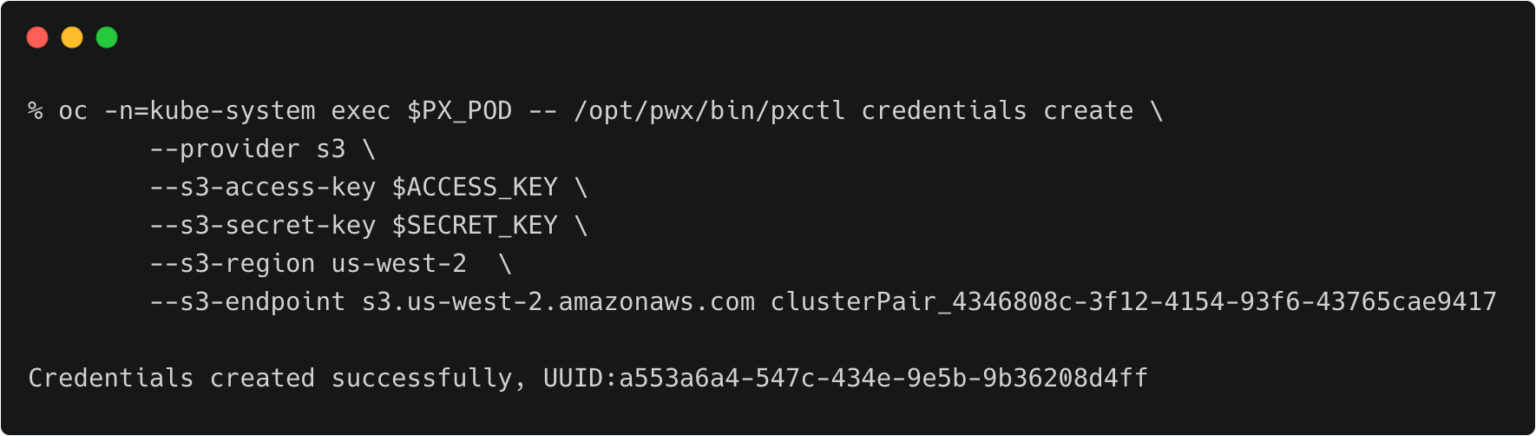

Let’s create the credentials on the production cluster while we are still using its context.

oc -n=kube-system exec $PX_POD -- /opt/pwx/bin/pxctl credentials create \ --provider s3 \ --s3-access-key \ --s3-secret-key \ --s3-region ap-southeast-1 \ --s3-endpoint s3.ap-southeast-1.amazonaws.com clusterPair_c02528e3-30b1-43e7-91c0-c26c111d57b3

Make sure that the credential name follows the convention of ‘clusterPair_UUID’.

Switch to the development cluster and repeat the same steps to create the credentials for the source.

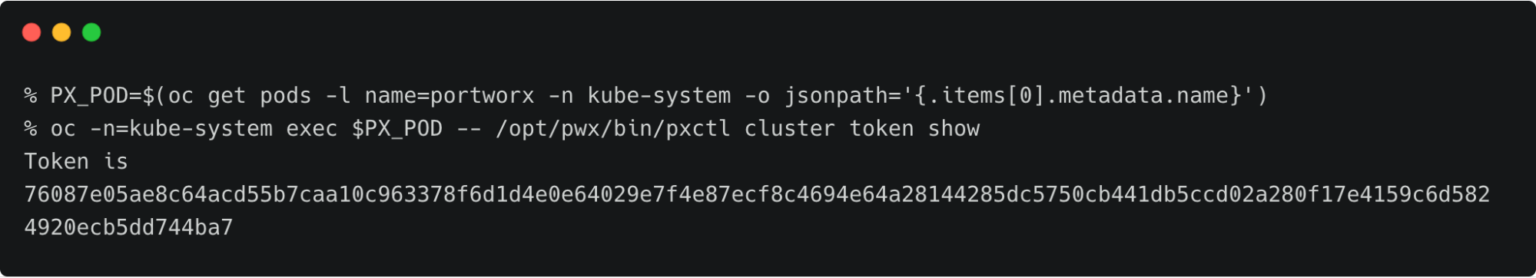

Retrieving the Target Cluster Token

The next step is to retrieve the cluster token from the production cluster, which is used to generate the YAML file with the cluster pair definition.

Let’s switch to the production cluster and run the command below to access the token.

PX_POD=$(oc get pods -l name=portworx -n kube-system -o jsonpath='{.items[0].metadata.name}')

oc -n=kube-system exec $PX_POD -- /opt/pwx/bin/pxctl cluster token show

Make a note of the cluster token and keep it in a safe place.

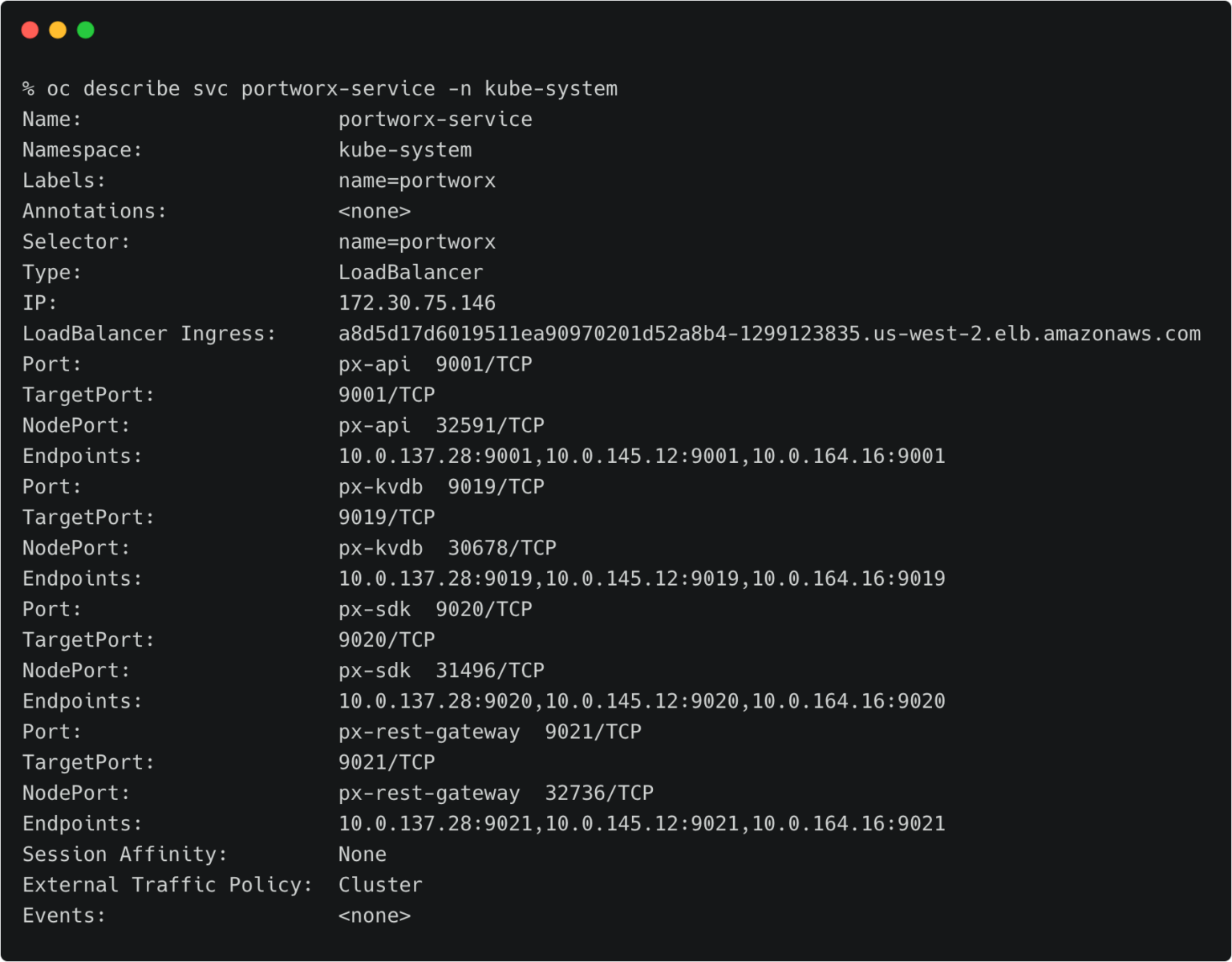

Getting the Load Balancer Endpoint for Portworx Service

We also need the DNS name of the load balancer associated with the Portworx Service on the production cluster. We can retrieve that with the command below.

oc describe svc portworx-service -n kube-system

At this point, you should have the below data ready:

- Token of the destination cluster

- CNAME of the load balancer pointing to portworx-service

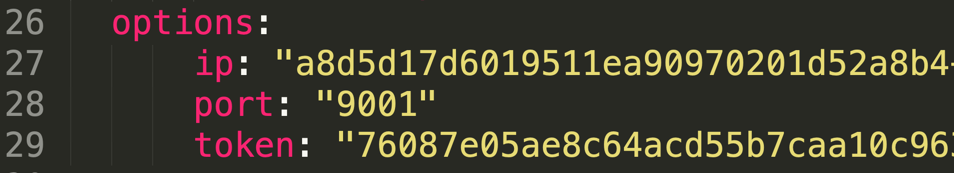

Generating the ClusterPair Specification

Let’s generate the scaffolded YAML file from the production (destination) cluster. This will be applied to the development (source) cluster later.

Make sure you are still using the prod context and run the command below.

storkctl generate clusterpair -n cms prodcluster > clusterpair.yaml

Open clusterpair.yaml and add the below details under options. They reflect the public IP address or DNS name of the load balancer in the destination cluster, port, and the token associated with the destination cluster.

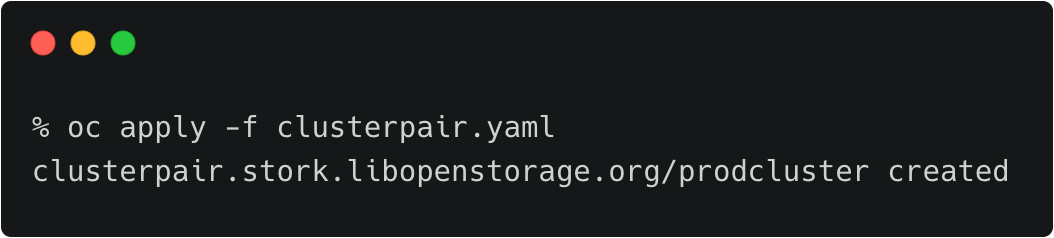

Pairing the Clusters for Kubemotion

We are now ready to pair the clusters by applying the clusterpair specification to the source cluster.

Let’s switch to the source cluster (dev environment) to pair the clusters.

oc apply -f clusterpair.yaml oc get clusterpair -n cms

The output of <pre>oc get clusterpairs</pre> confirms that the pairing has been done.

Verifying the Pairing Status

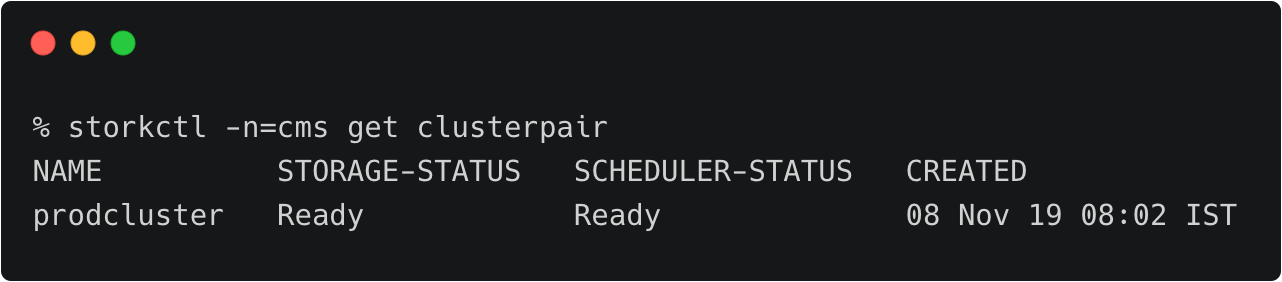

We can verify the pairing status with the storkctl CLI. Ensure that both storage-status and scheduler-status are ready with no errors.

storkctl -n=cms get clusterpair

Congratulations! You have successfully paired the source and destination clusters.

We are now ready to start the migration.

Migrating the CMS Application from Source to Destination

Make sure that you are using the dev context, and follow the below steps to start the migration of the CMS application.

Starting the Migration Job

Create a YAML file called migration.yaml with the content below.

apiVersion: stork.libopenstorage.org/v1alpha1 kind: Migration metadata: name: cmsmigration namespace: cms spec: # This should be the name of the cluster pair created above clusterPair: prodcluster # If set to false this will migrate only the Portworx volumes. No PVCs, apps, etc will be migrated includeResources: true # If set to false, the deployments and stateful set replicas will be set to 0 on the destination. # There will be an annotation with "stork.openstorage.org/migrationReplicas" on the destinationto store the replica count from the source. startApplications: true # List of namespaces to migrate namespaces: - cms

This definition contains critical information, like the name of the clusterpair, namespaces to be included in the migration, and the type of resources to be migrated.

Submit the YAML file to the dev cluster to initiate the migration job.

oc apply -f migration.yaml migration.stork.libopenstorage.org/cmsmigration created

Tracking and Monitoring the Migration Job

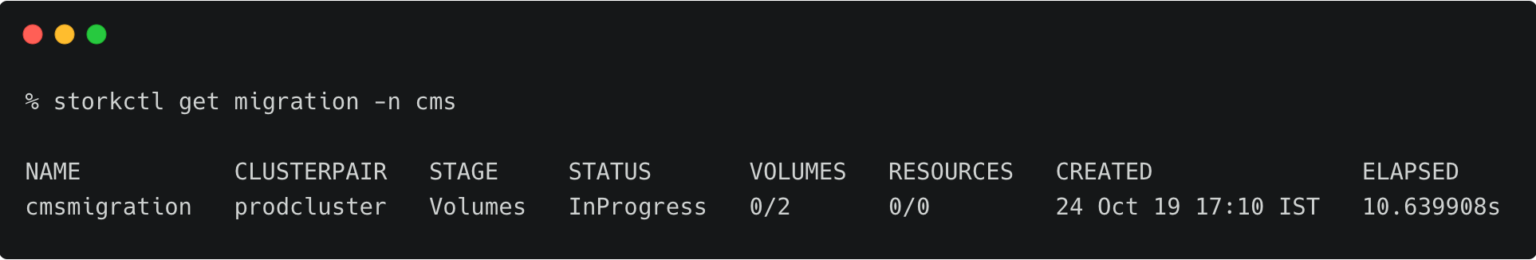

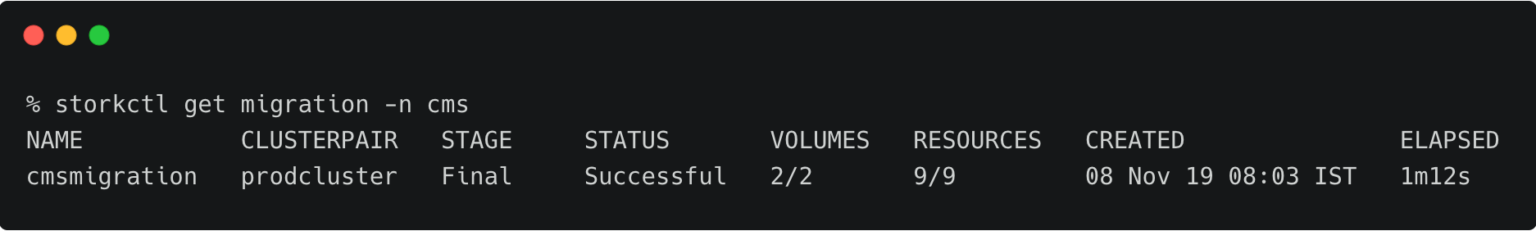

We can monitor the migration through storkctl.

storkctl get migration -n cms

Once the migration is done, storkctl reports the final number of volumes and resources migrated to the destination cluster.

To get detailed information on the migration, run the command below.

oc describe migration cmsmigration -n=cms

% oc describe migration cmsmigration -n=cms

Name: cmsmigration

Namespace: cms

Labels:

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"stork.libopenstorage.org/v1alpha1","kind":"Migration","metadata":{"annotations":{},"name":"cmsmigration","namespace":"cms"}...

API Version: stork.libopenstorage.org/v1alpha1

Kind: Migration

Metadata:

Creation Timestamp: 2019-11-08T02:33:44Z

Generation: 9

Resource Version: 346702

Self Link: /apis/stork.libopenstorage.org/v1alpha1/namespaces/cms/migrations/cmsmigration

UID: 2eeb5d56-01d0-11ea-a393-02fec625b80a

Spec:

Admin Cluster Pair:

Cluster Pair: prodcluster

Include Resources: true

Include Volumes: true

Namespaces:

cms

Post Exec Rule:

Pre Exec Rule:

Selectors:

Start Applications: true

Status:

Finish Timestamp: 2019-11-08T02:34:56Z

Resources:

Group: core

Kind: PersistentVolume

Name: pvc-ac60362f-0170-11ea-8418-06c5879a6a7a

Namespace:

Reason: Resource migrated successfully

Status: Successful

Version: v1

Group: core

Kind: PersistentVolume

Name: pvc-c5dd1955-0170-11ea-a393-02fec625b80a

Namespace:

Reason: Resource migrated successfully

Status: Successful

Version: v1

Group: core

Kind: Service

Name: mysql

Namespace: cms

Reason: Resource migrated successfully

Status: Successful

Version: v1

Group: core

Kind: Service

Name: wordpress

Namespace: cms

Reason: Resource migrated successfully

Status: Successful

Version: v1

Group: core

Kind: PersistentVolumeClaim

Name: px-mysql-pvc

Namespace: cms

Reason: Resource migrated successfully

Status: Successful

Version: v1

Group: core

Kind: PersistentVolumeClaim

Name: px-wp-pvc

Namespace: cms

Reason: Resource migrated successfully

Status: Successful

Version: v1

Group: apps

Kind: Deployment

Name: mysql

Namespace: cms

Reason: Resource migrated successfully

Status: Successful

Version: v1

Group: apps

Kind: Deployment

Name: wordpress

Namespace: cms

Reason: Resource migrated successfully

Status: Successful

Version: v1

Group: route.openshift.io

Kind: Route

Name: wp

Namespace: cms

Reason: Resource migrated successfully

Status: Successful

Version: v1

Stage: Final

Status: Successful

Volumes:

Namespace: cms

Persistent Volume Claim: px-mysql-pvc

Reason: Migration successful for volume

Status: Successful

Volume: pvc-ac60362f-0170-11ea-8418-06c5879a6a7a

Namespace: cms

Persistent Volume Claim: px-wp-pvc

Reason: Migration successful for volume

Status: Successful

Volume: pvc-c5dd1955-0170-11ea-a393-02fec625b80a

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Successful 82s stork Volume pvc-ac60362f-0170-11ea-8418-06c5879a6a7a migrated successfully

Normal Successful 82s stork Volume pvc-c5dd1955-0170-11ea-a393-02fec625b80a migrated successfully

Normal Successful 78s stork /v1, Kind=PersistentVolume /pvc-ac60362f-0170-11ea-8418-06c5879a6a7a: Resource migrated successfully

Normal Successful 78s stork /v1, Kind=PersistentVolume /pvc-c5dd1955-0170-11ea-a393-02fec625b80a: Resource migrated successfully

Normal Successful 78s stork /v1, Kind=Service cms/mysql: Resource migrated successfully

Normal Successful 78s stork /v1, Kind=Service cms/wordpress: Resource migrated successfully

Normal Successful 78s stork /v1, Kind=PersistentVolumeClaim cms/px-mysql-pvc: Resource migrated successfully

Normal Successful 78s stork /v1, Kind=PersistentVolumeClaim cms/px-wp-pvc: Resource migrated successfully

Normal Successful 78s stork apps/v1, Kind=Deployment cms/mysql: Resource migrated successfully

Normal Successful 77s (x2 over 78s) stork (combined from similar events): route.openshift.io/v1, Kind=Route cms/wp: Resource migrated successfully

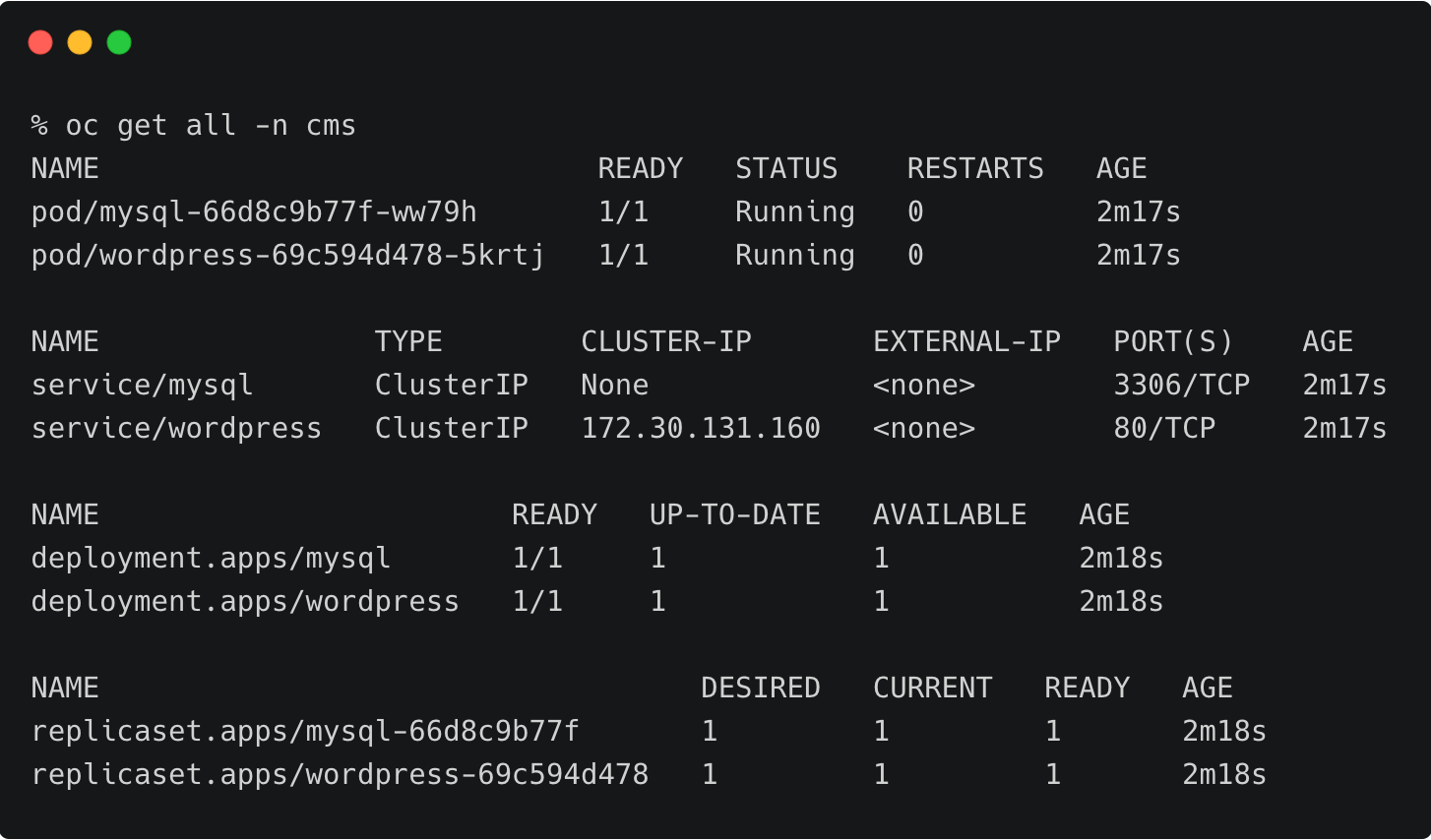

Verifying the Migration in the Production Environment

Now we can switch the context to prod and check all the resources created within the cms namespace.

oc get all -n cms

You can also access the application by enabling port-forwarding for the WordPress pod.

Summary

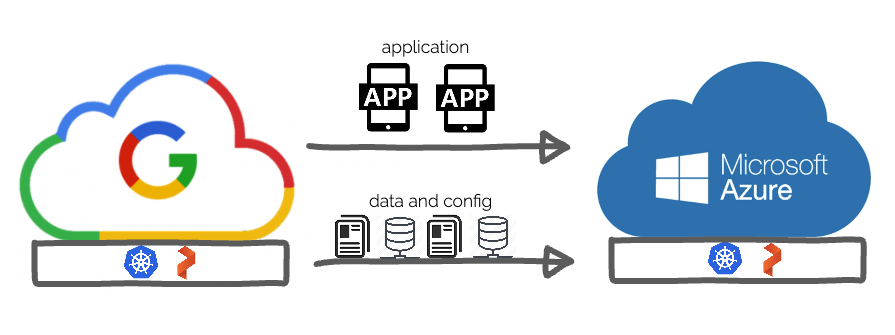

Kubemotion extends the power of portability to stateful workloads. It can be used to seamlessly migrate volumes from on-premises to public cloud (hybrid) environments and cross-cloud platforms.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Janakiram MSV

Contributor | Certified Kubernetes Administrator (CKA) and Developer (CKAD)Explore Related Content:

- kubemotion

- kubernetes

- openshift

- red hat

- red hat OpenShift

- redhat