A Detailed look at adding integrity tests as part of CI/CD pipelines

One of the things I like best about Kubernetes is declarative GitOps. Being able to use infrastructure-as-code to build to a desired state has simplified much of what I used to get paid for as a consultant. But sometimes it can be advantageous to add some imperative logic into GitOps. We sometimes need to add logic to have one infrastructure element start before another (perhaps a database and front-end web server), or perhaps we need to do some extra work to a database before an image upgrade (such as a schema change). These scenarios can require a little more logic than a simple deployment that has a running set of manifests mirror a git repository.

Imagine that you are running a company that does chargeback and showback calculations for customers. When code updates are deployed, one of the most accurate predictors of success was running financial reports on the database before and after the update. This got me to thinking: what if we could run a set of tests against a database before and after an upgrade?

This blog is really the next evolution of a talk that Stephen Atwell and I gave at KubeconNA. Our demo application tracks users and orders for Porx BBQ. During database upgrades, we have noticed that our collections could be missing documents so with my python programmer kid’s number on speed dial I set about to solve the problem.

In this blog, I’m going to show you one such use case.

Portworx BBQ

Here at Portworx we have an application we use for demos called Portworx BBQ. Portworx BBQ may be for a fake BBQ chain, but the application is real. It has feature releases, CI integration, and GitOps deployment examples. This means that we run upgrades on this application. Sometimes those changes can be breaking, but what better way to catch any breaking changes than to check the amount of orders in the database before and after an upgrade?

Keep reading below the video for an explanation with code samples.

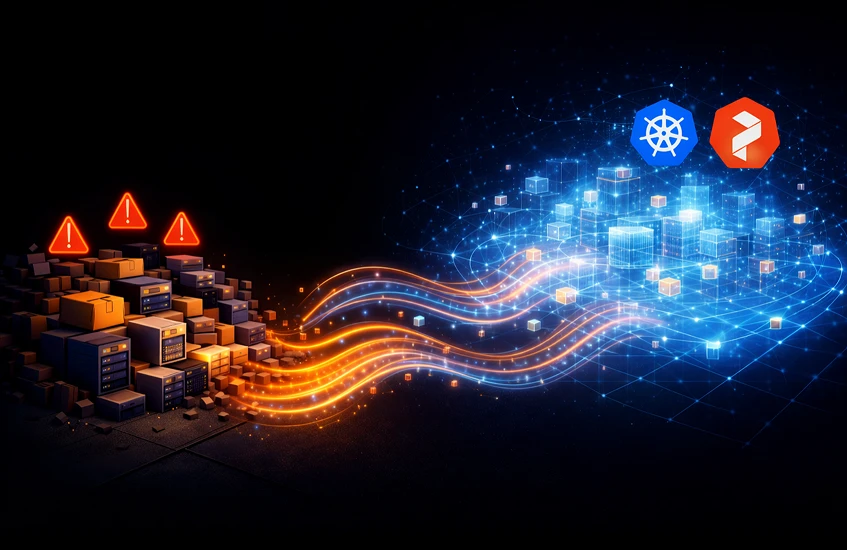

Here is a diagram that shows an overview of the pipeline process we saw above:

There is a bit to unpack here: We have an ArgoCD application on the main branch that is deploys Portworx BBQ:

apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: prod-pxbbq spec: destination: name: 'demo1' namespace: 'pxbbq' source: path: manifests/pxbbq/overlays/prod repoURL: 'https://github.com/ccrow42/homelab' targetRevision: HEAD project: pxbbq

We have also deployed a Portworx DR policy to copy the MongoDB PVC on a 15 minute interval to a stage cluster where we will run our tests. We are using DR to ensure we have a recent copy of our data ready to go, so we don’t have to wait for the data to copy.

apiVersion: stork.libopenstorage.org/v1alpha1

kind: MigrationSchedule

metadata:

name: migrationschedule

namespace: portworx

spec:

template:

spec:

clusterPair: stage

includeResources: false

startApplications: false

includeVolumes: true

namespaces:

- pxbbq

schedulePolicyName: default-interval-policy

suspend: false

autoSuspend: true

Note that we are only moving the persistent volume to stage. We want to manage our own manifests. With our production app explained, let’s move to the ArgoCD app that runs staging.

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: stage-pxbbq

spec:

destination:

name: 'demo2'

namespace: 'pxbbq'

sources:

- repoURL: 'https://github.com/ccrow42/homelab'

path: manifests/pxbbq/overlays/stage

targetRevision: pxbbq-stage

- repoURL: 'https://github.com/ccrow42/homelab'

path: manifests/pxbbq/bbq-taster

targetRevision: pxbbq-stage

project: pxbbq

The Porx BBQ application for our staging environment is looking for a pxbbq-stagebranch, which we will use to hold all of our code changes to the application. It is important to note that I am using kustomize to patch any changes between the prod and stage versions of this app. This also means that a change to the .spec.image will affect both versions were it not for our stage application looking at our pxbbq-stagebranch.

The testing is controlled by 2 jobs that use ArgoCD hooks.

We will first run our ‘pre-taste’ job before the sync:

apiVersion: batch/v1 kind: Job metadata: name: pre-taste namespace: pxbbq annotations: argocd.argoproj.io/hook: PreSync argocd.argoproj.io/hook-delete-policy: HookSucceeded spec: ...

The above is just a snippet to show how to configure a PreSync hook annotation, which ensures that we run this job before the application sync. The Pre-taste job does 2 things:

- It scales up MongoDB and Porx BBQ on the current version of the code

- It runs a report on current orders and registrations and uploads the results to an object store

Next, we will apply changes from the pxbbq-stage branch of our repo, which contains database upgrades or other code changes.

We will now run our ‘post-taste’ job:

apiVersion: batch/v1 kind: Job metadata: name: post-taste namespace: pxbbq annotations: argocd.argoproj.io/hook: PostSync argocd.argoproj.io/hook-delete-policy: HookSucceeded spec: ...

This job performs 3 tasks:

- It tests the application again (this time post upgrade)

- It scales down the deployments

- It runs a webhook to compare results between the two runs and returns a 200 if they are the same, and a 404 if they do not. It also uploads the results to an object store for further investigation.

At this point, we can evaluate our code changes, and merge the pxbbq-stagebranch to main. Any application changes we make will be applied to the production instance of the application.

In the above workflow, any changes to our application will be tested against a recent copy of the data, which is copied on a 15 minute timer. Additionally, our cluster does not need to be a like-for-like configuration as we are managing scaling manually with our pre and post hook jobs. When we are not actively testing the application, the deployments will be scaled down.

Conclusion

In this post, I have shown a way to add imperative logic to a deployment pipeline using ArgoCD pre-sync and post-sync annotations. Adding logic that tests our application against real data ensures that whatever change we made does not cause a failure.

Stay tuned for more GitOps content!

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Chris Crow

Chris is a technical marketing engineer and bash enthusiast.