Portworx Guided Hands On-Labs. Register Now

As the demand for scalable and flexible infrastructure solutions continues to grow, more and more organizations are turning to Kubernetes for their container orchestration needs. While many companies choose to deploy Kubernetes on cloud platforms such as AWS or Google Cloud, there is a growing trend towards deploying Kubernetes on bare metal infrastructure. With Kubernetes and containers, the plane of virtualization has shifted from hardware infrastructure to application, allowing efficient ways to meet the scale and elasticity that the platform desires.

What is bare metal Kubernetes and what makes it different?

Bare metal Kubernetes means deploying Kubernetes clusters and their bare metal containers directly on physical servers instead of inside traditional virtual machines managed by a hypervisor layer. This means that containers running in a bare-metal Kubernetes cluster have direct access to the underlying server hardware. This would not be the case with clusters composed of virtual machines, which would abstract the containers from the underlying servers. Enterprises across the telecom and financial services industries deploy Kubernetes on bare metal for edge computing to avoid the overhead of running a VM layer on small form-factor hardware, or in the data center to reduce costs, improve application workload performance, and avoid the cost of VM licensing.

In fact, there are several reasons why organizations are choosing to deploy Kubernetes on bare metal instead of using cloud providers. Let’s explore the 5 key reasons for doing so:

- Enhanced Control: By deploying Kubernetes on bare metal, organizations have complete control over their infrastructure. They can customize their hardware to meet their specific needs, ensuring better performance, increased reliability, and faster response times for their customers.

- Cost Savings: Deploying Kubernetes on bare metal can result in significant cost savings. This is because bare metal infrastructure typically has lower hardware and operating costs compared to a hybrid cloud platform, which requires additional resources for virtualization and cloud management services. This quickly adds up for larger deployments in the cloud. And, by doing away with the commercial hypervisor layer, you can avoid licensing costs and potentially other expenses like support contracts. Additionally, bare metal infrastructure provides greater flexibility and the ability to scale as needed without incurring additional costs.

- Improved Cluster Security and Control: Security has become a top concern among Kubernetes users. With the Kubernetes bare metal infrastructure, organizations have more control over their security measures in the sense that it gives admins full control over the servers that host the nodes. They can implement custom security protocols and ensure that their data is protected. In contrast, if you run Kubernetes on VMs that are managed by, say, a cloud provider, organizations wouldn’t get any of these controls over the host servers; they would only be able to control the VMs.

- Superior Performance: Bare metal infrastructure offers superior compute performance compared to virtualized environments due to the absence of additional software overhead. On the other hand, the NVMe based infrastructure is getting popular with deploying Kubernetes on NVMe drives, which yields far superior performance in terms of I/O and latency in comparison to cloud environments. The combination of both of these is enabling organizations to optimize their hardware and achieve faster application response times for their customers.

- Flexibility and Scalability: Deploying Kubernetes on bare metal offers greater flexibility and scalability. Organizations can easily add new nodes to the cluster as needed, adjust their infrastructure quickly in response to changing requirements, and maintain their competitive edge.

Achieve increased developer productivity by using Portworx for bare metal Kubernetes

Kubernetes has become the de facto standard for container orchestration, and it provides a powerful set of tools for managing containerized applications. However, it was initially designed to run on virtual machines, and running it on bare metal brings its own set of challenges. That’s where Portworx comes in.

Portworx provides a distributed storage platform that enables persistent storage for containerized applications on bare metal Kubernetes clusters. It is designed to provide enterprise-grade data management capabilities for Kubernetes, including high availability, disaster recovery, data protection, and data security. One of the key benefits of using Portworx for deploying Kubernetes on bare metal is that it simplifies the process of managing storage for containerized applications. With Portworx, you don’t need to worry about provisioning storage volumes or managing storage classes manually. Its container-native storage platform automates these processes, making it easy to deploy and manage stateful applications on Kubernetes.

Another important capability of Portworx is its ability to provide multi-cloud and hybrid cloud support. This means you can deploy Kubernetes clusters across different infrastructure environments, including on-premises data centers, public clouds, and edge sites, and still get consistent storage management capabilities across all of them. Portworx provides agnostic support for how customers deploy and manage Kubernetes clusters, including on bare metal. There are a number of customers across the financial, telecom, and retail industries who use Portworx for bare metal Kubernetes.

Portworx also provides several benefits when deploying air-gapped Kubernetes clusters on bare metal. First and foremost, it enables organizations to run their containerized applications with confidence and ease in a closed, secure environment without access to public clouds or other external resources. One of the key benefits of Portworx in this scenario is its ability to provide high availability and reliability through its distributed storage architecture. This architecture allows for data replication across multiple nodes, ensuring seamless failover in case of node failures and preventing data loss or downtime. Additionally, Portworx provides scalability through its ability to scale storage and compute resources independently. This means that as your organization grows and expands, you can easily add more storage capacity without having to add more compute resources, or vice versa. This helps to ensure that your Kubernetes cluster remains optimized and performing at peak capacity. Lastly, Portworx simplifies storage operations and management by providing a single pane of glass view of your entire storage environment, regardless of where it is deployed. This enables you to manage and monitor your storage resources centrally, reducing complexity and accelerating time to deployment.

Customer stories on successful bare metal Kubernetes deployments using Portworx

The platform engineering team at one of the nation’s largest media and entertainment companies is responsible for building and deploying a platform that ingests petabytes of data into low-latency databases such as ElasticSearch and MongoDB. It is crucial for them to gather subscriber analytics in order to comprehend users’ usage patterns and facilitate quicker problem-solving. Additionally, data analytics and aggregation are carried out to recognize data patterns and enhance subscriber experiences. By having an efficient, low-overhead storage layer with the Portworx Enterprise platform and backing their cloud-native storage solution for Kubernetes storage with high-bandwidth and extremely performant NVMe devices, they ensured that their applications were rarely, if ever, starved at the storage layer in terms of IOPS and throughput. This, combined with the ultra-low latencies provided by NVMe devices, ensured that the volume provisioning and I/O utilization for the applications running on Kubernetes could operate at high speeds and at massive scale. With Portworx, this customer achieves a 1 million+ IOPS increase in underlying NVMe performance with a near-zero failure rate.

One of the largest American car finance companies wanted to transition away from Openshift on VMware to Openshift on bare metal to massively optimize application development and production costs. This transition was only possible with Portworx as their standard and Portworx Disaster Recovery to migrate vast, data-rich applications to the new Openshift Container Platform. Portworx, along with Pure FlashArray, enables this customer to scale capacity and performance without downtime by leveraging FlashArray’s best-in-class data reduction and Portworx’s thin provisioning and volume placement. Their rollout has begun with their Quay registry, Redis apps, and, soon, their Postgres-based data warehouse. With Portworx, they achieve $1.5 million in savings over VMware and 60% storage savings with data reduction.

To summarize, larger bare metal servers have enough compute available and can run a higher number of nodes per server. The storage IO could prove to be a bottleneck. The underlying storage has to scale as well to meet that much density. With Portworx, organizations are able to do app-level IO. You can create storage pools and expose those to appropriate applications; those apps could be tuned to extract the most out of your underlying bare metal infrastructure.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Surbhi Paul

Group Product Marketing Manager

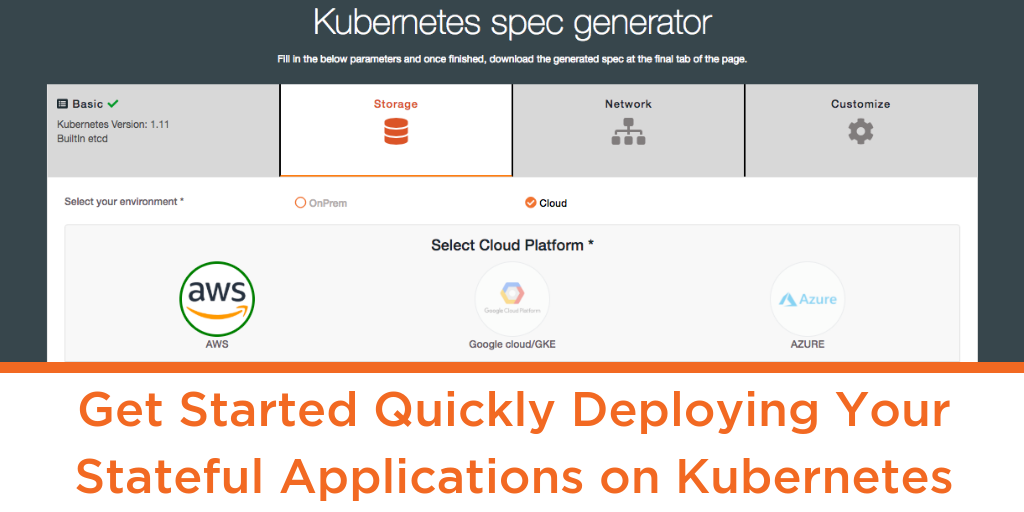

Get Started Quickly Deploying Your Stateful Applications on Kubernetes