This is part one in a series about Openshift Virtualization with Portworx. The series will cover installation and usage, backup and restore, and data protection for virtual machines on Openshift.

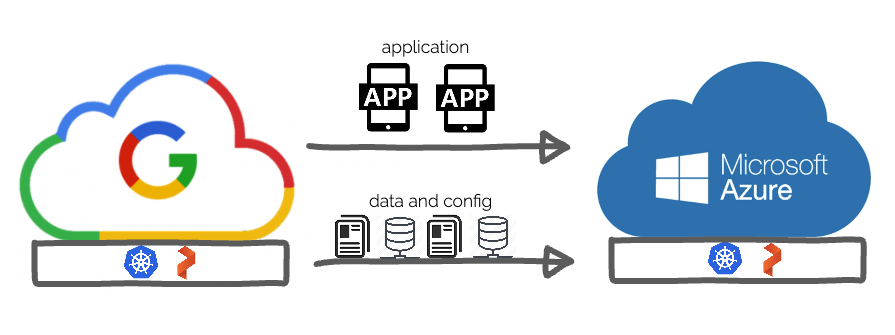

Enterprises are increasingly moving applications to containers, but many teams also have a huge investment in applications that run virtual machines (VMs). Many of these VMs often provide services to new and existing containerized applications as organizations break the monolith into microservices. Enterprises are diving head first into their container platforms, which often provide a seamless integration of computing, storage, networking, and security. Portworx often provides the data management and protection layer for these containerized applications. OpenShift Virtualization uses KubeVirt to offer a way for teams to run their VM-based applications side by side with their containerized apps as teams and applications grow and mature. This provides a single pane of glass for developers and operations alike. In this blog series, we’ll show you how Portworx can provide the data management layer for containers and for VMs. You can think of Portworx much like the v-suite of products in vSphere. Portworx can provide disks to VMs like vSAN, and data protection like vMotion but for both VMs and containers on OpenShift Container Platform.

Install and Configure Portworx for Openshift Virtualization

In part one, we will focus on the installation and configuration of both Portworx and Openshift so that Portworx can provide disks for virtual machines to consume and use as both boot source and application persistence.

Pre-requisites

- Install OpenShift using a version that supports virtualization, such as 4.5. Make sure all the OpenShift requirements are met. This includes tasks such as using a specific OS and sizing your worker nodes to ensure VMs can run properly.

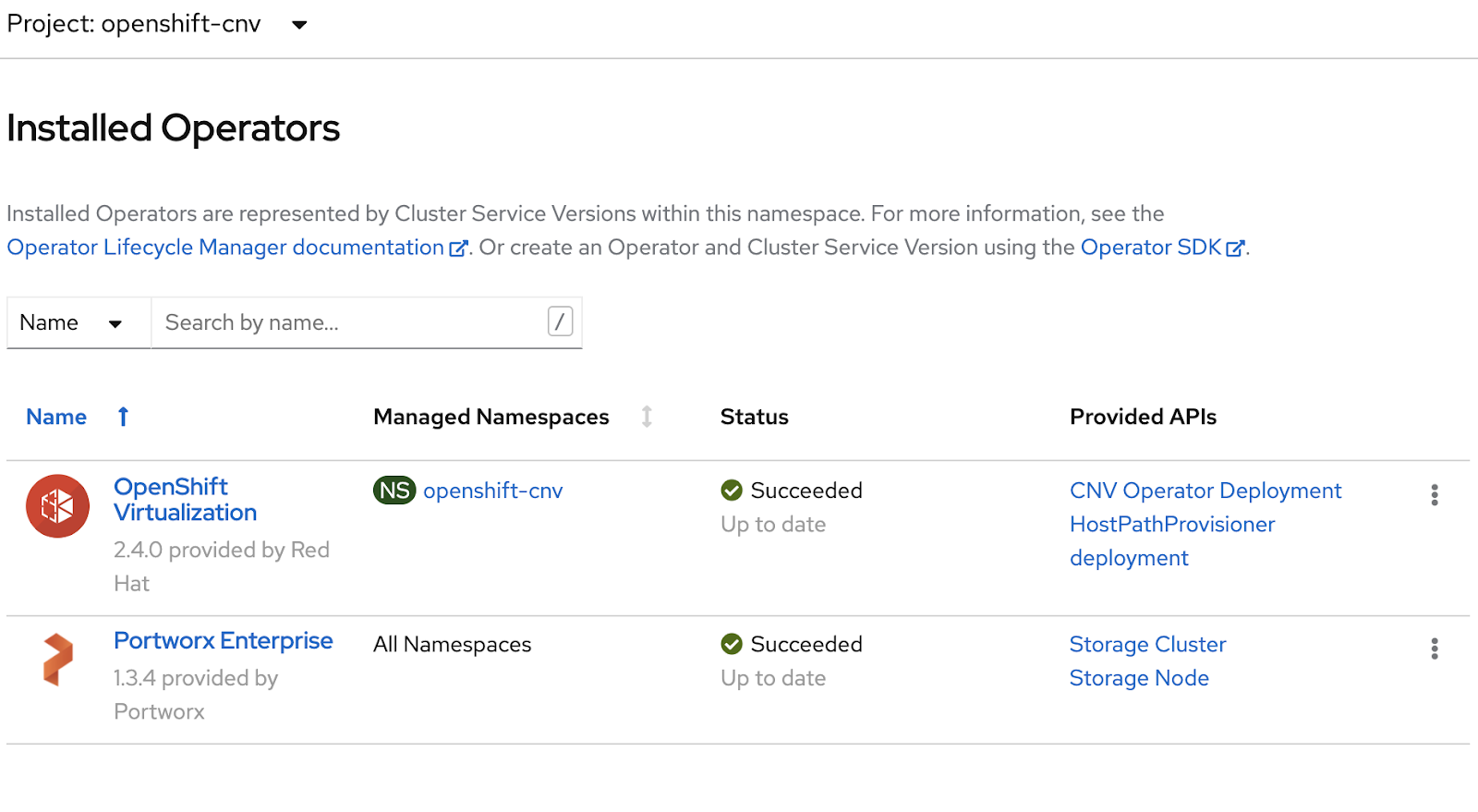

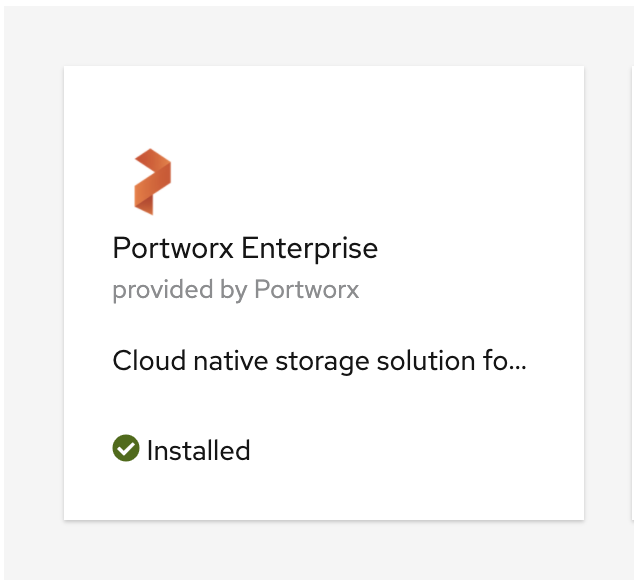

- Install Portworx on OpenShift. This can easily be done using the Portworx Operator in the OpenShift Catalog.

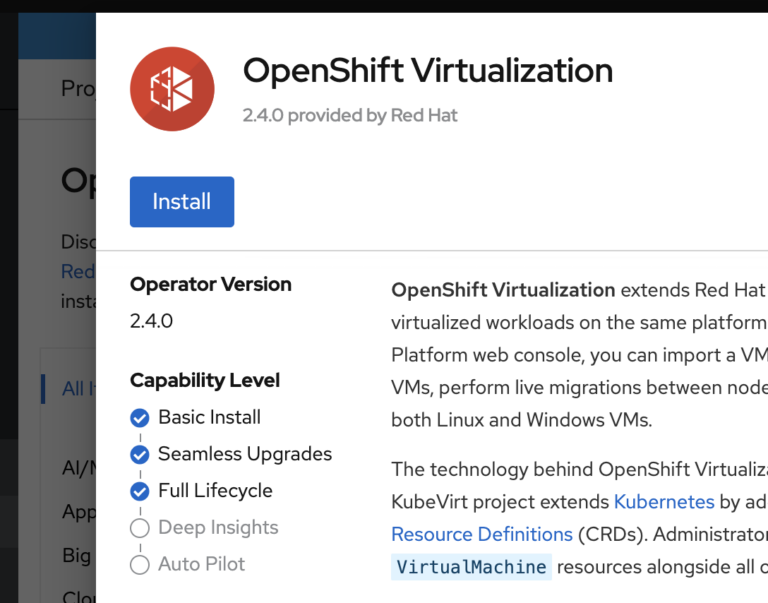

- Install OpenShift Virtualization and CNV operator into your OpenShift cluster. This can be done by installing the OpenShift Virtualization operator from the catalog.

You can verify everything is running properly by listing all pods in the Portworx and openshift-cnv namespace. This indicates that you are ready to create VMs that use Portworx for their storage

$ oc get po -n kube-system -l name=portworx

NAME READY STATUS RESTARTS AGE

portworx-demoocp-cluster-fcsk5 1/1 Running 0 4d21h

portworx-demoocp-cluster-fvcbq 1/1 Running 0 4d21h

portworx-demoocp-cluster-jn4hm 1/1 Running 0 4d21h

portworx-demoocp-cluster-mtst7 1/1 Running 0 4d21h

portworx-demoocp-cluster-qhxjn 1/1 Running 0 4d21h

portworx-demoocp-cluster-zcbh9 1/1 Running 0 4d21h

$ oc get po -n openshift-cnv -l app.kubernetes.io/managed-by=kubevirt-operator

NAME READY STATUS RESTARTS AGE

virt-api-66c966cb5f-c5qtn 1/1 Running 0 4d19h

virt-api-66c966cb5f-xzjv2 1/1 Running 0 4d19h

virt-controller-68c54b968-clpp7 1/1 Running 0 4d19h

virt-controller-68c54b968-n7628 1/1 Running 8 4d19h

virt-handler-2pmsl 1/1 Running 0 4d19h

virt-handler-6df69 1/1 Running 0 4d19h

virt-handler-6smz9 1/1 Running 0 4d19h

virt-handler-bvjhj 1/1 Running 0 4d19h

virt-handler-gfknh 1/1 Running 0 4d19h

virt-handler-p467p 1/1 Running 0 4d19h

virt-handler-pdgkk 1/1 Running 0 4d19h

virt-handler-vk9n9 1/1 Running 0 4d19h

virt-handler-z4pcn 1/1 Running 0 4d19h

Using OpenShift CNV with Portworx

There are various ways that VMs can consume storage. We’ll be covering two common use cases for VMs below:

- Using Portworx to provide the Root Disk for a VM. This will allow you to boot your VM off of a Portworx volume into your Linux operating system.

- Consuming a Portworx volume as an additional disk space. This use case will use a Portworx volume for MySQL running inside the VM.

Using Portworx as root disk

In this example, we will load Fedora 31 onto a Portworx volume; then we will use this disk to boot a new VM.

First, set up a storage class that will be used for your root disk.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: px-replicated

parameters:

repl: "2"

provisioner: kubernetes.io/portworx-volume

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

Next, create a 20GB PVC by creating a file named pwx-cdi.yaml that uses Containerized Data Importer (CDI) to import the Fedora Base Cloud qcow2 image.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: "fedora-disk0"

labels:

app: containerized-data-importer

annotations:

cdi.kubevirt.io/storage.import.endpoint: "https://fedoraproject.org/"

spec

storageClassName: px-replicate

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

Next, create the above PVC. This will start the import process of loading a Fedora cloud image into the PVC.

$ oc create -f pwx-cdi.yaml -n openshift-cnv

You can check on the status of the cloud image import by describing the PVC.

> You must wait until it is complete to use this PVC as a boot source.

$ oc -n openshift-cnv describe pvc fedora-disk0 | grep cdi.kubevirt.io/storage.condition.running.reason

cdi.kubevirt.io/storage.condition.running.reason: Pod is running

$ oc -n openshift-cnv describe pvc fedora-disk0 | grep cdi.kubevirt.io/storage.condition.running.reason

cdi.kubevirt.io/storage.condition.running.reason: ContainerCreating

$ oc -n openshift-cnv describe pvc fedora-disk0 | grep cdi.kubevirt.io/storage.import.importPodName:

cdi.kubevirt.io/storage.import.importPodName: importer-fedora-disk0

$ oc -n openshift-cnv logs importer-fedora-disk0

<snip>

I0828 14:53:34.206940 1 qemu.go:212] %34.40

I0828 14:53:40.765331 1 qemu.go:212] %35.45

$ oc -n openshift-cnv describe pvc fedora-disk0 | grep cdi.kubevirt.io/storage.condition.running.reason

cdi.kubevirt.io/storage.condition.running.reason: Completed

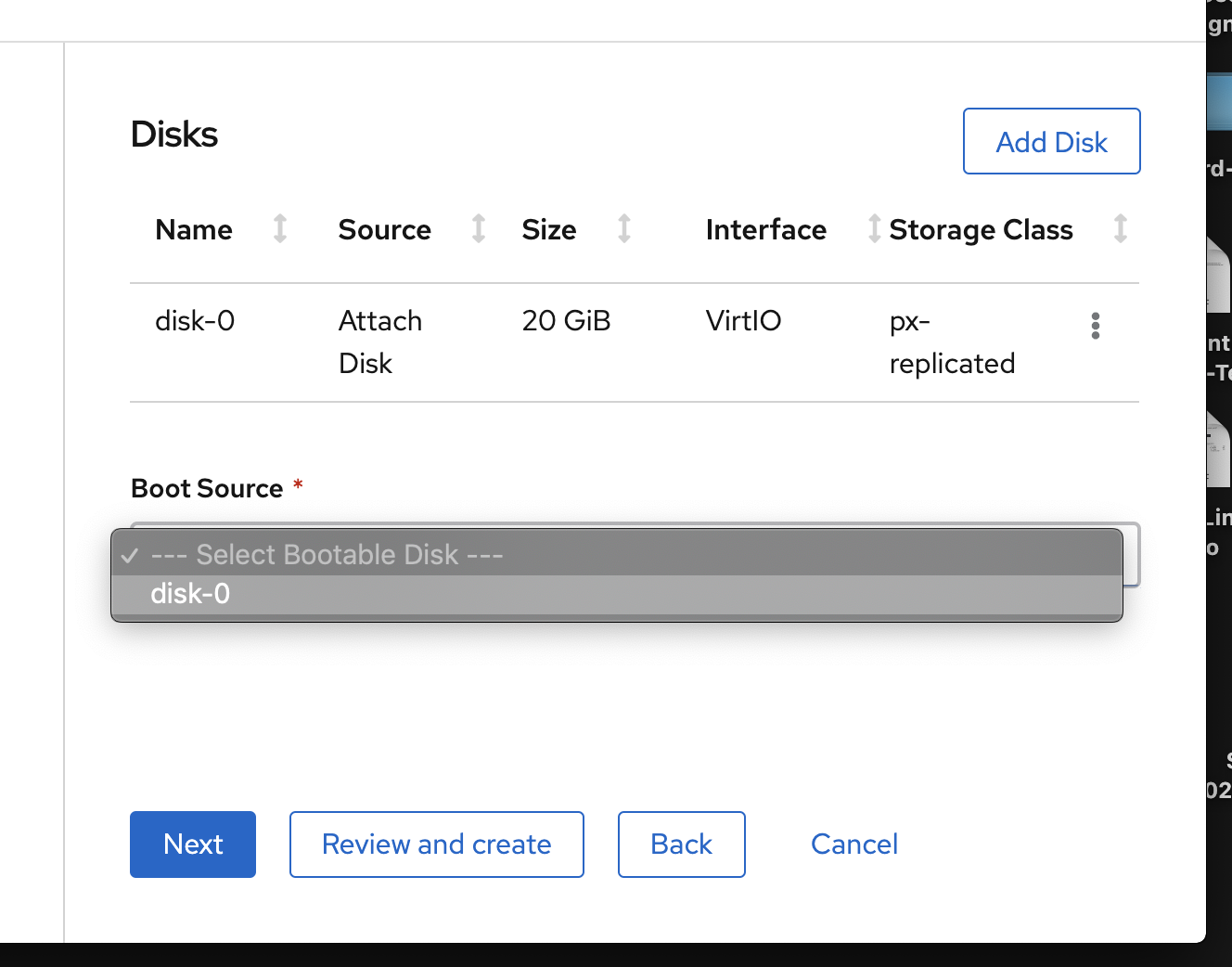

After CDI is finished importing the image, create a VM using the virtual machine wizard. Make sure and select Disk as the Source and then attach the above disk in the Storage section.

Then choose this Portworx disk as your boot source. This will instruct the VM to boot off of this disk that has Fedora loaded into it.

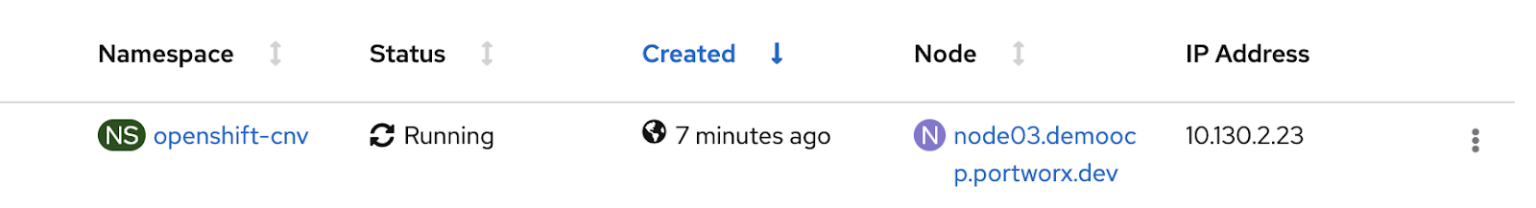

Create the VM and verify it is up and running.

Next, log in to the VM. This can be done through the UI or by using virtctl.

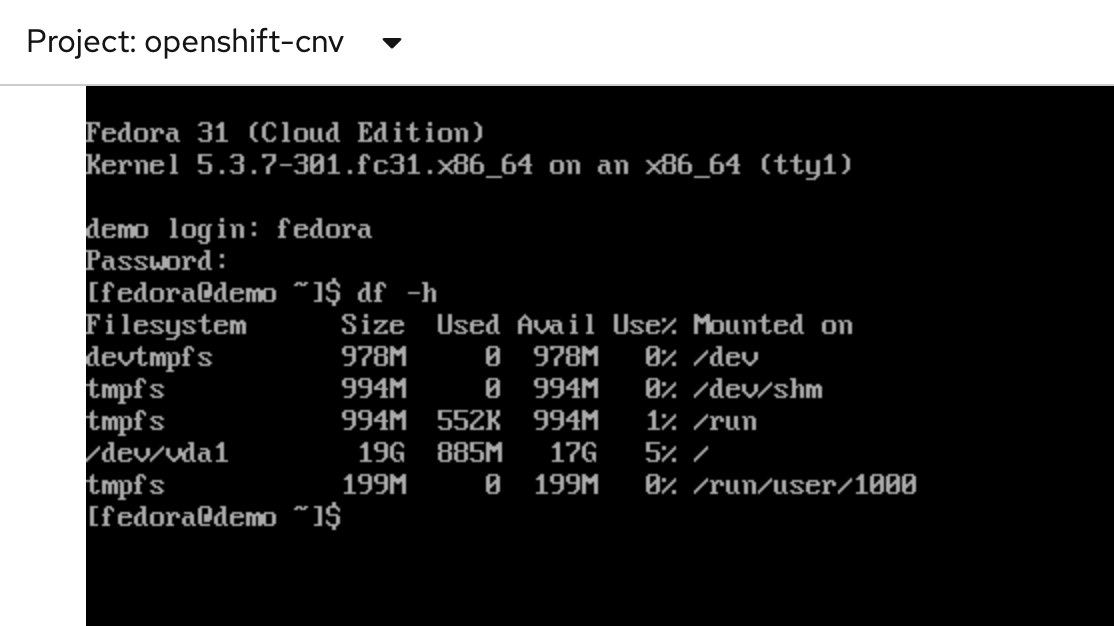

You’ll see that Portworx is providing the VM’s root disk by issuing a df -h command. Confirm it is the same size (20GB) as the original PVC that used CDI to import Fedora.

Using cloud-init to run MySQL on PWX Volume on Fedora 31

In this example, we will attach a disk to our VM, but we won’t use it as our boot disk. Instead, we will launch the VM and manually add a filesystem, mounting the volume so it can be used by our application running inside the VM.

First, set up a storage class that will be used for your root disk.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata

name: px-vm-replicated

parameters:

repl: "2"

provisioner: kubernetes.io/portworx-volume

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

Next, create a 20GB PVC that uses Containerized Data Importer (CDI) to import the Fedora Base Cloud qcow2 image.

apiVersion: v

kind: PersistentVolumeClaim

metadata:

name: "vm-pvc-1"

spec:

storageClassName: px-vm-replicated

accessModes:

- ReadWriteOnc

resources:

requests:

storage: 10Gi

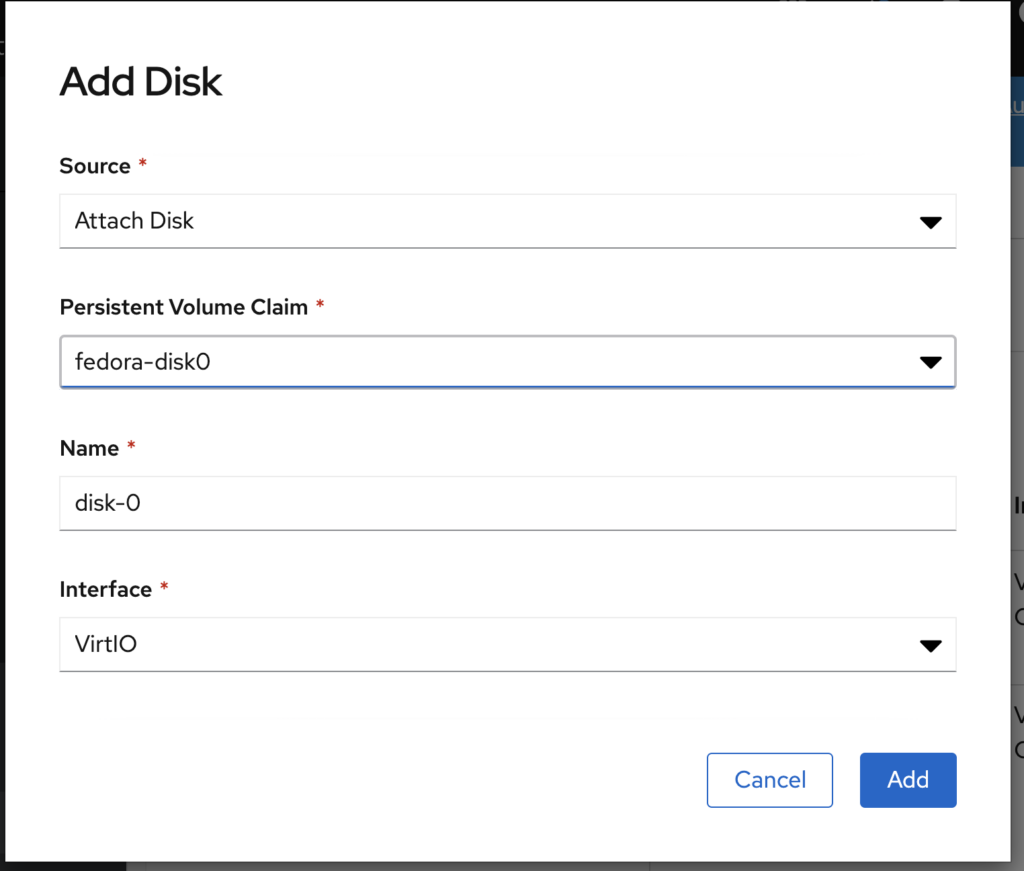

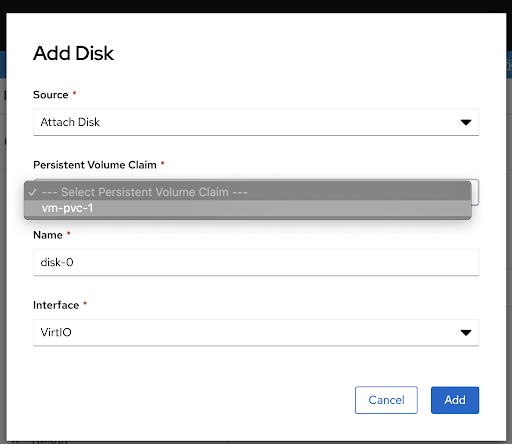

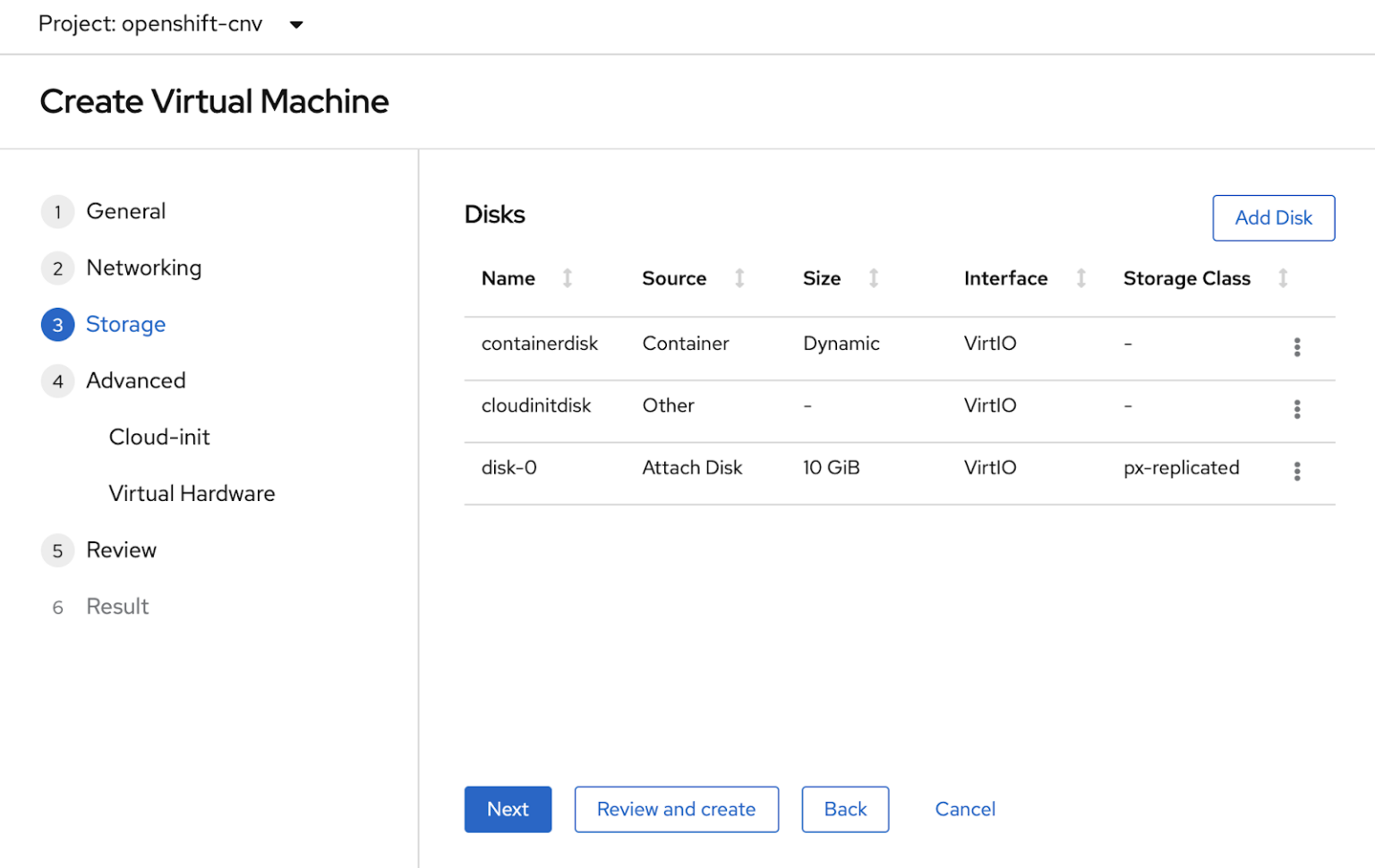

Inside the virtual machine wizard, select the PVC created above in the Storage section to make sure it is attached to the VM when it is created.

Attach a Portworx PVC to the VM, but do not make it your boot source; this will just be a space for us to use for MySQL.

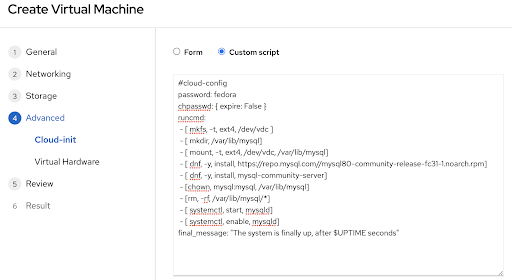

Next, use a cloud-init configuration that will create a filesystem on the Portworx disk and mount it to the /var/lib/mysql directory. Then have cloud-init enable MySQL repositories and install and start MySQL.

Next, follow the prompts in the VM wizard and create the VM. You can use the OpenShift web console or the oc command line to view the VMs that are running.

$ oc get vmi -n openshift-cnv

NAME AGE PHASE IP NODENAM

pwx-demo 3m11s Running 10.130.2.20 node03.demoocp.portworx.dev

$ oc get vm -n openshift-cnv

NAME AGE VOLUME

pwx-demo 3m36s

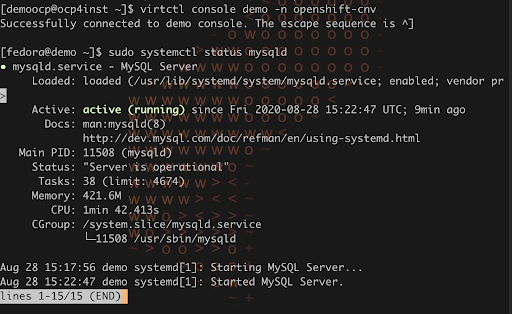

Login into the VM console using virtctl and see if MySQL is up and running by running the command systemctl status mysqld.

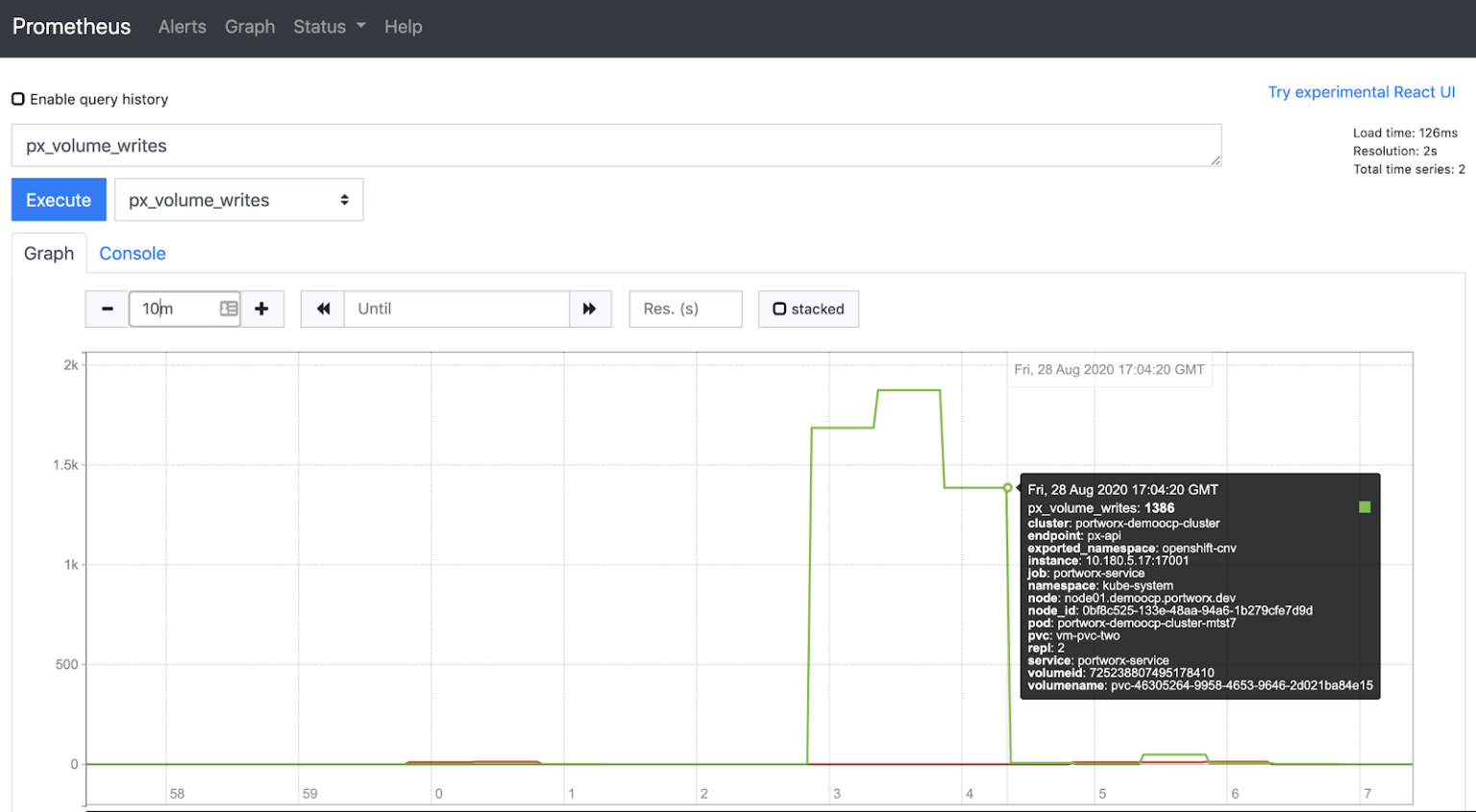

To visualize data flowing to the Portworx volume, we can install sysbench and run a simple performance benchmark. We also have Portworx monitoring with Prometheus installed so we can visualize application IO going to our Portworx volume.

Prep MySQL and install sysbench.

$ sudo grep 'temporary password' /var/log/mysqld.log

$ sudo mysql_secure_installation

$ sudo curl -s https://packagecloud.io/install/repositories/akopytov/sysbench/script.rpm.sh | sudo bash

$ sudo dnf -y install sysbench

Create a “test” database within MySQL for sysbench to use.

$ mysql> create database test;

Query OK, 1 row affected (0.82 sec)

Instruct sysbench to prepare the test database.

$ sysbench --test=oltp_read_write --table-size=500 --mysql-db=test --mysql-user=root --mysql-password=Password1! prepare

Run the sysbench Read/Write test workload.

$ sysbench --test=oltp_read_write --table-size=500 --mysql-db=test --mysql-user=root --mysql-password=Password1! --time=60 --max-requests=0 --threads=2 run

We can verify that write operations to the Portworx volume that is attached to our VM by checking Portworx monitoring with Prometheus and selecting the px_volume_writes stat.

To cleanup, instruct sysbench to run “cleanup”.

sysbench --test=oltp_read_write --mysql-db=test --mysql-user=root --mysql-password=Password1! cleanup

Demo

Check out this short demo of using Portworx with OpenShift Virtualization

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Ryan Wallner

Portworx | Technical Marketing ManagerExplore Related Content:

- openshift

- virtualization

- vm