Portworx is a cloud native storage platform to run persistent workloads deployed on a variety of orchestration engines including Kubernetes. With Portworx, customers can manage the database of their choice on any infrastructure using any container scheduler. It provides a single data management layer for all stateful services, no matter where they run.

Kubemotion is one of the core building blocks of Portworx storage infrastructure. Introduced in PortworxEnterprise 2.0, it allows Kubernetes users to migrate application data and Kubernetes pod configurations between clusters enabling cloud migration, backup & recovery, blue-green deployments and more.

This step-by-step guide demonstrates how to move persistent volumes associated with a stateful application from one GCP region to another.

Background

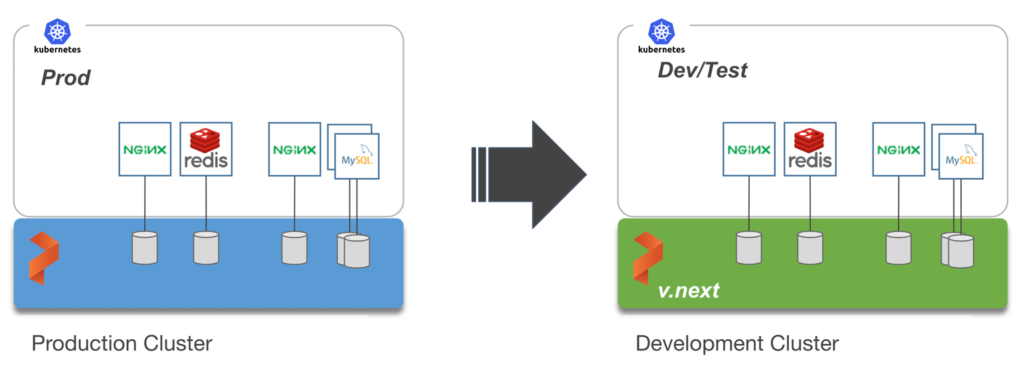

For enterprises, it’s a common scenario to run the development and test environment in one cloud region and the production environment in another. Development teams may choose a region that’s geographically closer to them while deploying production applications in another region that has low latency for the users and customers.

Even though Kubernetes makes it easy to move stateless workloads across environments, achieving parity of stateful workloads remains to be a challenge.

For this walkthrough, we will move Kubernetes persistent volumes (PV) between Google Kubernetes Engine (GKE) clusters running in Asia South (Mumbai) and Asia Southeast (Singapore). The Mumbai region is used by the development teams for dev/test, and the Singapore region as the production environment.

After thoroughly testing an application in dev/test, the team will use Portworx and Kubemotion to reliably move the storage volumes from development to production environments.

Exploring the Environments

We have two GKE clusters – dev/test and production – running in Mumbai and Singapore regions of Google Cloud Platform. Both of them have the latest version of Portworx cluster up and running.

The dev/test environment currently runs a LAMP-based content management system that needs to be migrated to production.

Tip: To navigate between two kubeconfig contexts representing different clusters, use the

It runs to deployments – MySQL and WordPress.

For a detailed tutorial on configuring a highly available WordPress stack on GKE, please refer to this guide.

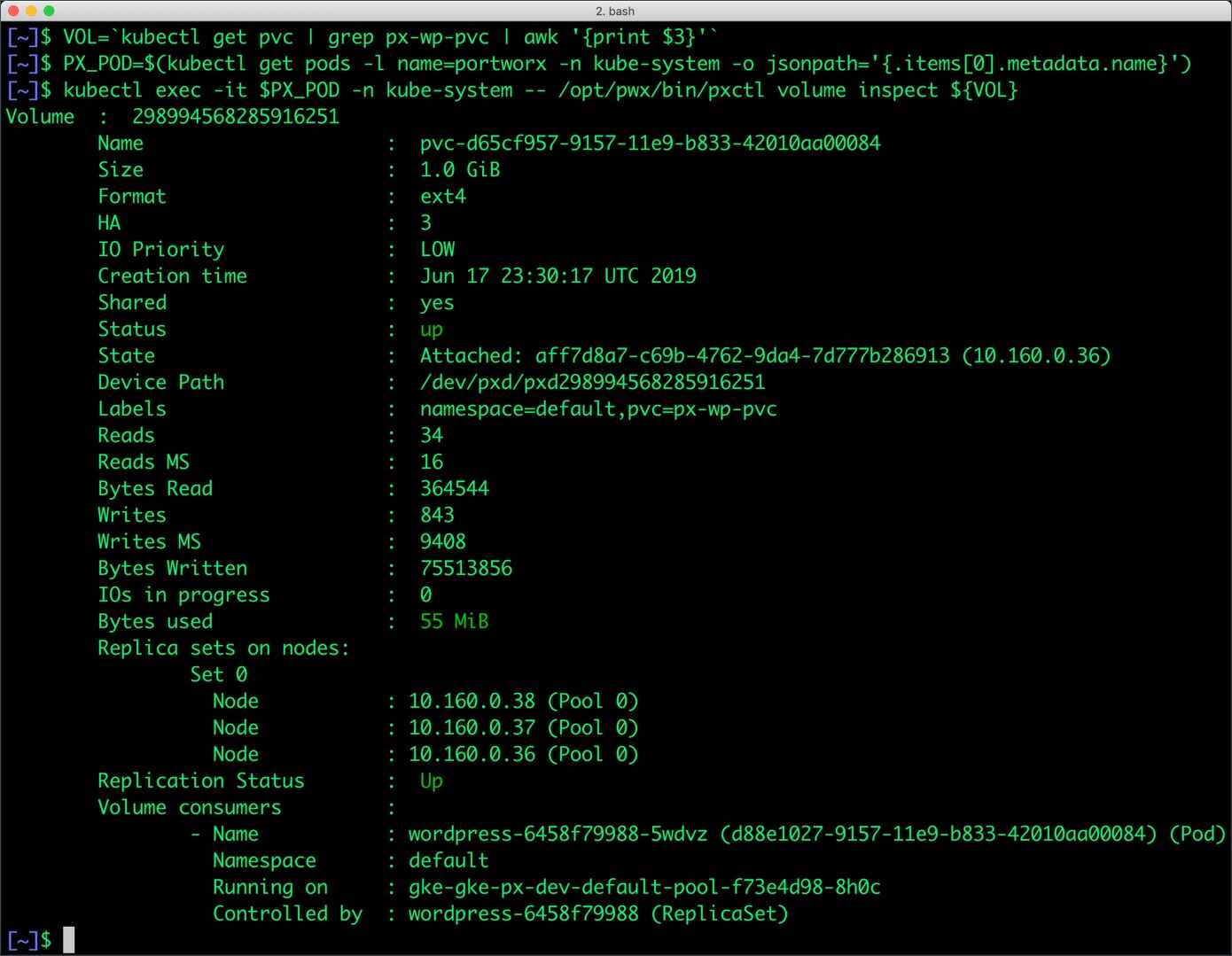

The persistent volumes attached to these pods are backed by a Portworx storage cluster.

The below volume is attached to the MySQL pod.

For the WordPress CMS, there is a shared Portworx volume attached to the pods.

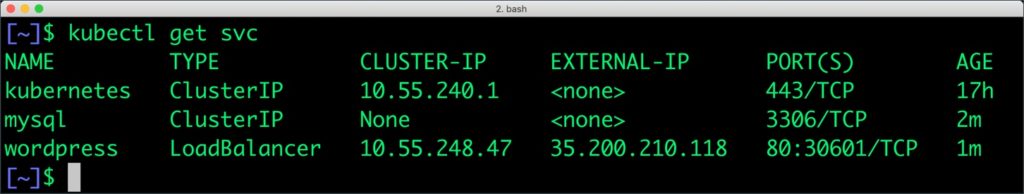

The fully configured application is accessed through the IP address of the load balancer.

We will now migrate this application along with its state and configuration from dev/test to production.

Preparing the Source and Target Environments

Before we can move the volumes, we need to configure the source and destination clusters.

Follow the below steps to prepare the environments.

Note: Kubemotion may also be configured with Kubernetes secrets. Since the scenario is based on Google Cloud Platform, we are demonstrating the integration with Google Cloud KMS and Service Accounts.

Enabling the GCP APIs

In your GCP account, make sure that the Google Cloud Key Management Service (KMS) APIs are enabled. Portworx integrates with Google Cloud KMS to store Portworx secrets for Volume Encryption and Cloud Credentials.

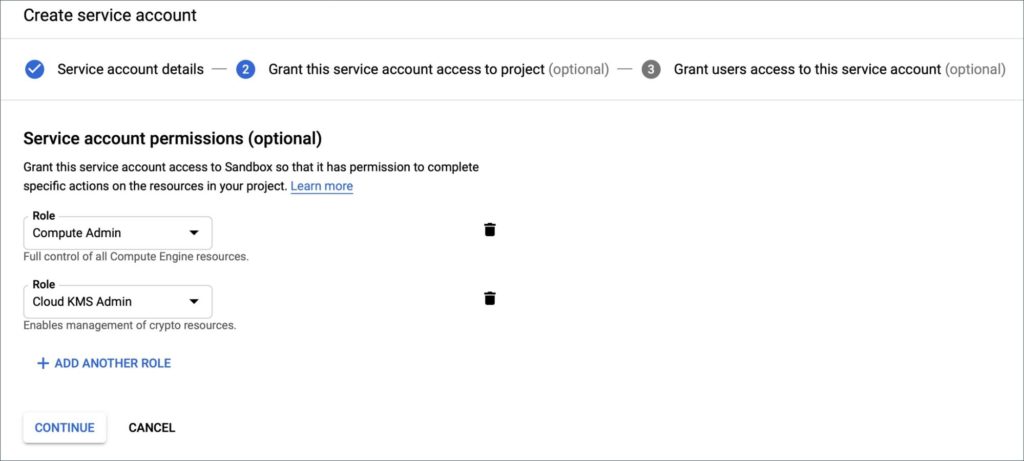

Creating the Service Account

After enabling the APIs, create a service account that has roles compute admin and KMS admin added.

The last step involves the creation of a file that contains the private key. Copy the downloaded account file in a directory gcloud-secrets/ and rename it gcloud.json to create a Kubernetes secret from it.

Configuring the KMS Keyring

Run the below command to create a keyring that will be used by Portworx. Make sure that while creating the asymmetric key you specify the purpose of the key as Asymmetric decrypt.

$ gcloud kms keyrings create portworx --location global

Run the below command on both the clusters to create a Kubernetes secret from the service account file.

Creating the Kubernetes Secret

$kubectl -n kube-system create secret generic px-gcloud --from-file=gcloud-secrets/ --from-literal=gcloud-kms-resource-id=projects//locations//keyRings//cryptoKeys//cryptoKeyVersions/1

Make sure to replace the Project ID, Key Ring Name and Asymmetric Key Name in the above command with the correct values.

Both the clusters now have a secret that provides access to Google Cloud Platform KMS and GCE APIs.

Patching Portworx Deployment

Now, we need to update the Portworx DaemsonSet to provide access to KMS.

$ kubectl edit daemonset portworx -n kube-system

Add the “-secret_type”, “gcloud-kms” arguments to the portworx container in the DaemonSet.

containers:

- args:

- -c

- testclusterid

- -s

- /dev/sdb

- -x

- kubernetes

- -secret_type

- gcloud-kms

name: portworx

We are now ready to patch the Portworx DaemonSet to add the secret created in the previous step.

cat < patch.yaml

spec:

template:

spec:

containers:

- name: portworx

env:

- name: GOOGLE_KMS_RESOURCE_ID

valueFrom:

secretKeyRef:

name: px-gcloud

key: gcloud-kms-resource-id

- name: GOOGLE_APPLICATION_CREDENTIALS

value: /etc/pwx/gce/gcloud.json

volumeMounts:

- mountPath: /etc/pwx/gce

name: gcloud-certs

volumes:

- name: gcloud-certs

secret:

secretName: px-gcloud

items:

- key: gcloud.json

path: gcloud.json

EOF

Apply the patch and wait till all the Portworx pods are back in Running state.

$ kubectl -n kube-system patch ds portworx --patch "$(cat patch.yaml)" --type=strategic

Before proceeding, make sure that you applied the above changes to both the source and destination clusters.

Pairing the GKE Clusters

Before starting the migration, we need to pair the destination cluster with the source.

Getting the Cluster Token

Switch to the destination (prod) cluster and run the below commands to get the token.

$ kubectx prod

$ PX_POD=$(kubectl get pods -l name=portworx -n kube-system -o jsonpath='{.items[0].metadata.name}')

$ kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl cluster token show -j

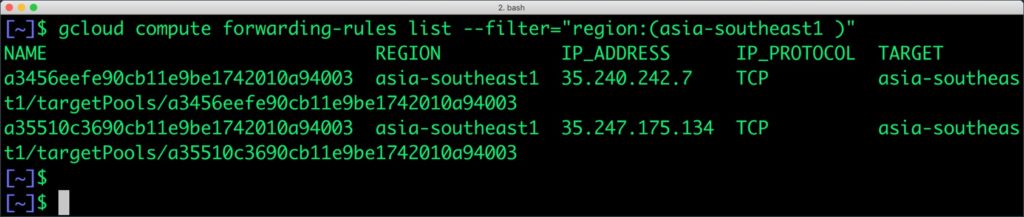

Getting the Public IP Address

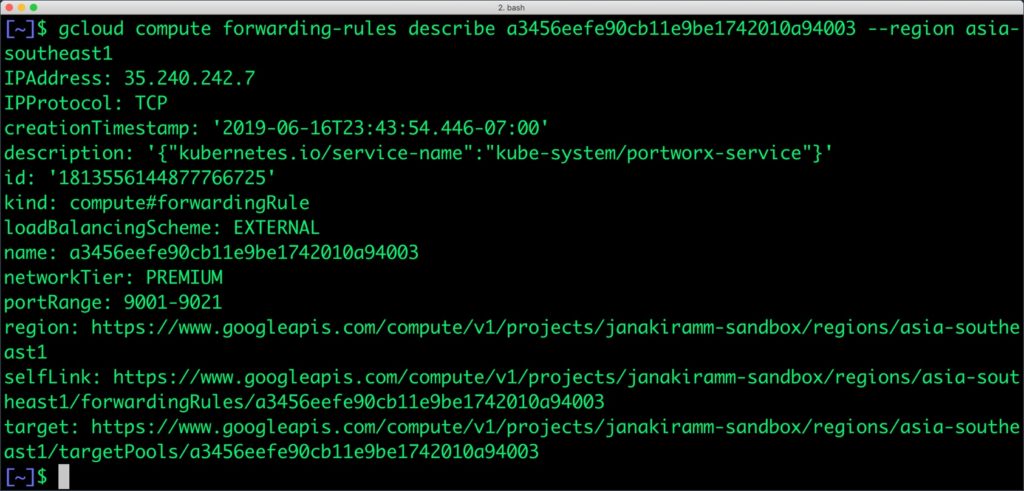

You also need the public IP address of the GCP Load Balancer associated with the destination cluster.

Retrieve the forwarding-rules associated with the destination cluster and then find the IP address of the load balancer routing the traffic to ports 9001-9021.

Creating and Verifying the Cluster Pair

Switch to the source (dev/test) cluster to create a pair. Keep the public IP address of the load balancer and the cluster token retrieved from the above steps handy.

$ kubectx dev $ kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl cluster pair create --ip 35.240.242.7 --token 3b47e4d65e8c448ced846896ad262df6d3c58f78ecc5350975dee2c63b6d9c258d8cbd1fd694f3effa8f8d926ce3e160f12d3db54d5f026ae2692ad1408bb8d7

We can verify the pairing process with the below command.

$ kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl cluster pair list

Congratulations! You have successfully paired the source and destination clusters. We are now ready to start the migration.

Moving Portworx Volumes from Source to Destination

Make sure that you are using the dev context and run the below command to start the migration of MySQL and WordPress volumes. Since there are only two volumes, we can use --all switch.

$ kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl cloudmigrate start --all

You can track the migration process with the below command:

$ kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl cloudmigrate status CLUSTER UUID: 1d0cf415-b4c1-475f-8fff-8a00d4be5d12 TASK-ID VOLUME-ID VOLUME-NAME STAGE STATUS LAST-UPDATE 9-b833-42010aa00084 Done Complete Tue, 18 Jun 2019 01:10:10 UTC bba45393-79ae-49e9-a278-fbb80fa1d02f 677245826839301303 pvc-c9da779b-9157-11e9-b833-42010aa00084 Done Complete Tue, 18 Jun 2019 01:10:11 UTC

Accessing and Mounting the Volumes in the Destination Cluster

We are now ready to access the volumes in the destination cluster. Running the pxctl volume list command confirms that the volumes are available.

$ kubectx prod

$ PX_POD=$(kubectl get pods -l name=portworx -n kube-system -o jsonpath='{.items[0].metadata.name}')

$ kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl v l

The id and names of migrated volumes can be directly used in Kubernetes deployments to create persistent volumes.

The MySQL deployment is based on the migrated volume. Notice that it is using the same id and volume name created by Kubemotion.

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: mysql

labels:

app: mysql

spec:

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

replicas: 1

template:

metadata:

labels:

app: mysql

spec:

schedulerName: stork

containers:

- name: mysql

image: mysql:5.6

imagePullPolicy: "Always"

env:

- name: MYSQL_ROOT_PASSWORD

value: password

ports:

- containerPort: 3306

volumeMounts:

- mountPath: /var/lib/mysql

name: pvc-c9da779b-9157-11e9-b833-42010aa00084

volumes:

- name: pvc-c9da779b-9157-11e9-b833-42010aa00084

portworxVolume:

volumeID: "1106055690476573550"

Now, you can access the WordPress application in the destination cluster based on the migrated volumes.

Summary

Kubemotion extends the power of portability to stateful workloads. It can be used to seamlessly migrate volumes from on-premises to public cloud (hybrid) environments and cross-cloud platforms.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Janakiram MSV

Contributor | Certified Kubernetes Administrator (CKA) and Developer (CKAD)Explore Related Content:

- gcp

- google cloud

- kubemotion

- kubernetes