I recently had the pleasure of diving into CD pipelines with Stephen Atwell of Armory. (Thank you, Todd and Justin from our Portland user group for the introduction!)

In one of our conversations, we were musing about what we could do with Portworx migrations as part of a deployment pipeline, and Stephen posed an intriguing question: Could we create a staging environment and run data integrity tests as part of a database upgrade pipeline?

One of the unique things about Armory Continuous Delivery as a Service is the ability to create gates as part of the deployment process. This means that while a deployment is “declarative,” it can enforce requirements on the deployment process—for example, a prod deployment can depend on a staging deployment, which, in turn, can depend on database migration.

We spent a few weeks and some late nights coming up with the demo below. I learned a lot about deployment pipelines, GitHub actions, and even some Python coding in the process.

Demo Overview

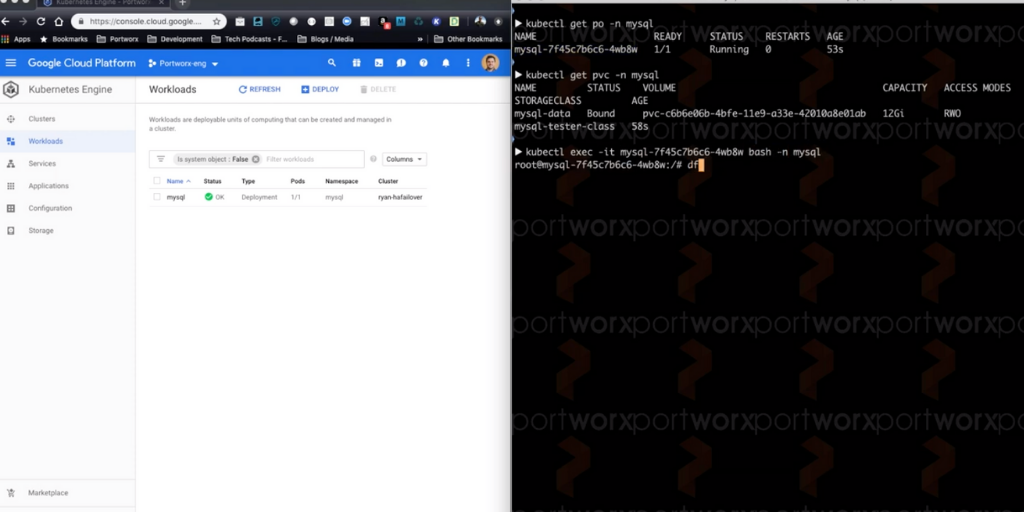

In this demo, we used a combination of tools and techniques to create a deployment process that had logic and verification built in.

Check out the video below of Stephen and me showing our workflow:

The Deployment Process

The deployment process can be broken down into three stages:

- Start a database migration job. This is the part that directly involves Portworx, which is taking a PVC from a running MongoDB instance and moving it to a staging cluster. Of course, we have to do some cleanup first, and thanks to an open-source webhook tool, the validation logic is clean (no sleep timers here, which is the first hint that I didn’t code this).

- The staging environment gets a new copy of the MongoDB manifest. In this demo, it’s an upgrade. Once that is complete, you can perform integration tests. This is where you get to use your imagination: If you could run any bit of code against a full application stack to ensure it runs correctly, what would you do?

- Provided the above step works, roll the changes into production. Instead of using a simple declarative process, use a canary deployment that is monitoring a set of Prometheus metrics to ensure that the application is stable throughout the deployment process (and roll back if they are not).

For those who want to look through the repo we used, visit https://github.com/ccrow42/argocd.

Conclusion

If you are new to pipelines, you may be wondering what benefit the testing method above delivers over other testing methods. Having our data move as part of CD pipelines opens up a world of testing and monitoring for stateful applications. Here are some examples:

- Automated database integrity testing

- Monitoring database specific performance metrics and automated rollback

- Integrating backups at particular checkpoints that can be triggered by the pipeline

- Developer control over the data lifecycle

With Portworx, we can migrate data using the same tools we use to deploy our infrastructure as code—and achieve unprecedented agility.

This is one of my first journeys into thinking like a modern app developer. Having a tool like Armory enables testing and control that has been on my wish list for a while, and when we combine it with Portworx, we are able to integrate database changes to our stateful applications into the same pipeline that manages all other application changes.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!