FlashBlade Direct Access allows dynamic and on-demand creation of Kubernetes persistent volumes (PV) through Portworx. Pure storage administrators can now provide their DevOps teams with self-service of FlashBlade NFS-backed PVs simply by the developer creating a persistent volume claim (PVC) within Kubernetes. Save on operational costs and increase DevOps efficiency with this “better together” feature integration of Pure enterprise storage and Portworx.

You may be familiar with the Portworx proxy volume feature, which allows Portworx to utilize a user-provided NFS filesystem and present it to pods as storage. In your organization, this likely requires two user personas to effectively implement FlashBlade NFS-backed PVs into your container-based infrastructure—a storage administrator and a developer.

In comparison with Portworx proxy volumes, FlashBlade Direct Access condenses deployment of PVs to a single persona—your developers or DevOps teams. This reduces storage admin burnout and mistakes during high-churn CRUD operations (testing, CI/CD, etc.).

FlashBlade Direct Access delivers a number of advantages:

- Provides your DevOps team direct access to Pure FlashBlade, the industry’s most advanced all-flash storage solution for consolidating fast file and object data, all inside Kubernetes—and without a storage administrator necessary for every task

- Provides enterprise-grade, highly performant backing storage for critical DevOps infrastructure such as internal private registries

- Allows ReadWriteMany (RWX) volumes backed by reliable and resilient storage to ensure availability can be realized at the container layer

- Is a valid source or target for backup/restore with PX-Backup 2.1 or higher

Your organization can realize the following benefits as a result:

- Increase DevOps efficiency: Developers no longer have to wait on your storage team for reliable and performant storage.

- Increase container infrastructure resiliency: There is no more developer-led placement for backing storage of critical DevOps infrastructure “on a Linux VM somewhere.”

- Protect your data and give it mobility: Using PX-Backup 2.1, you can protect your DevOps critical data and provide the ability to deploy known good sets of data to new or existing environments.

A common first step after deploying a fresh OpenShift cluster is to configure the internal private registry. This provides your DevOps teams a local repository for the container images they will use for developing and testing applications. If these registries are backed by unreliable storage, are configured in ReadWriteOnce (RWO) mode in OpenShift, and require multiple personas to provision and configure the backing storage, your developer efficiency can be reduced or halted altogether.

Use FlashBlade Direct Access to increase your DevOps efficiency and stop worrying about rogue NFS servers that can bring your pipelines to a grinding halt in case of a failure. Let’s walk through how you might configure a highly available internal private registry for an OpenShift cluster using FlashBlade Direct Access.

FlashBlade Direct Access through Portworx

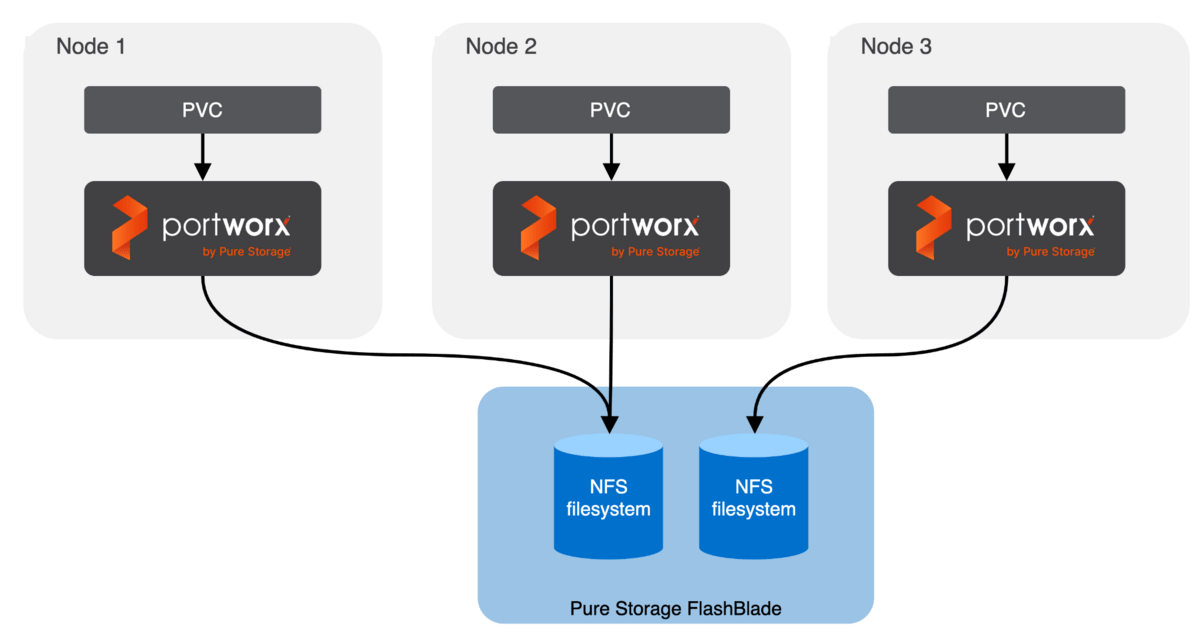

FlashBlade Direct Access allows your developers to simply create a PVC in OpenShift and get a dynamically created NFS filesystem on FlashBlade for use with their pods:

There are a few prerequisites we need to be aware of when implementing FlashBlade Direct Access. Since we are installing into OpenShift, we also need to open TCP ports 17001 through 17020 on all master and worker nodes in the OpenShift cluster.

Once we have met these requirements, we need to prepare OpenShift for the Portworx installation. Portworx needs to be aware that we want to use FlashBlade Direct Access during installation, and it needs information about the FlashBlades we want to use.

To provide this information, we’ll create a file named pure.json that contains the information about our FlashBlade and then create a secret in OpenShift prior to installing Portworx. The information in the JSON file includes:

- Management Endpoint IP: This is the IP address on the FlashBlade that Portworx will interact with for API and provisioning operations.

- API Token: This is the API token generated on the FlashBlade we will use.

- NFS Endpoint IP: This is the IP address on the FlashBlade that the NFS filesystem will be exported on.

{

"FlashBlades": [

{

"MgmtEndPoint": "10.0.0.5",

"APIToken": "T-74419f51-8c0e-1e42-aa34-1460a2cf80e1",

"NFSEndPoint": "10.0.0.4"

}

]

}

Once we have the pure.json file created, we can log in to our OpenShift cluster and create a secret from it in the kube-system namespace named px-pure-secret. This is how Portworx will detect during installation that we want to use FlashBlade Direct Access:

oc create secret generic px-pure-secret –namespace kube-system –from-file=pure.json

Now that the px-pure-secret secret that contains information about our FlashBlade is created, we can install the Portworx Enterprise Operator and create our Portworx cluster.

Install Portworx

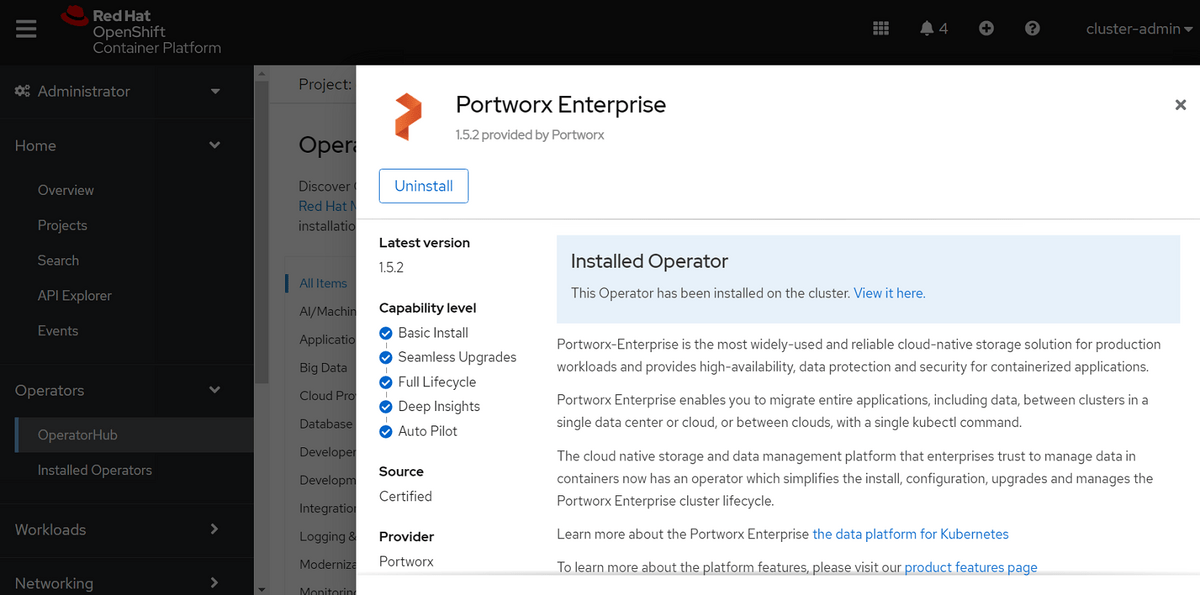

First, we need to install the Portworx Enterprise Operator in our fresh OpenShift 4.8 cluster. We’ll log in to the console of the cluster and navigate to Operator->Operator Hub, then search for and install the Portworx Enterprise Operator.

Next, let’s head over to https://central.portworx.com/ and create an installation spec for PX-Enterprise. We need to make sure to do the following during spec creation:

- Select the Operator as the installation method.

- Select On Premises for storage option.

- Ensure CSI is selected in advanced options.

- Select OpenShift 4+ in final options.

We’ll then copy the URL of the spec and apply it to our OpenShift cluster, which will install Portworx and create our StorageCluster and StorageNodes in OpenShift:

oc apply -f ‘https://install.portworx.com/2.8?operator=true&mc=false&kbver=&….’

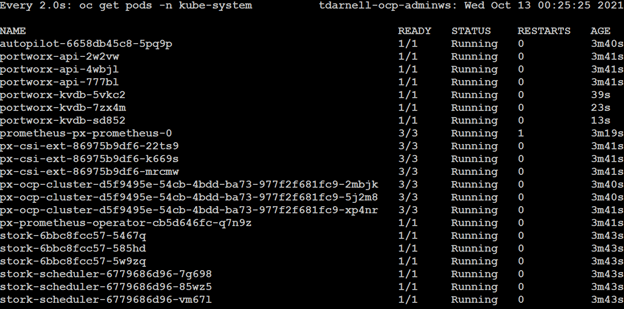

We can monitor the installation status of Portworx by watching the pods in the kube-system namespace and waiting until they are all ready by issuing the command watch oc get pods -n kube-system:

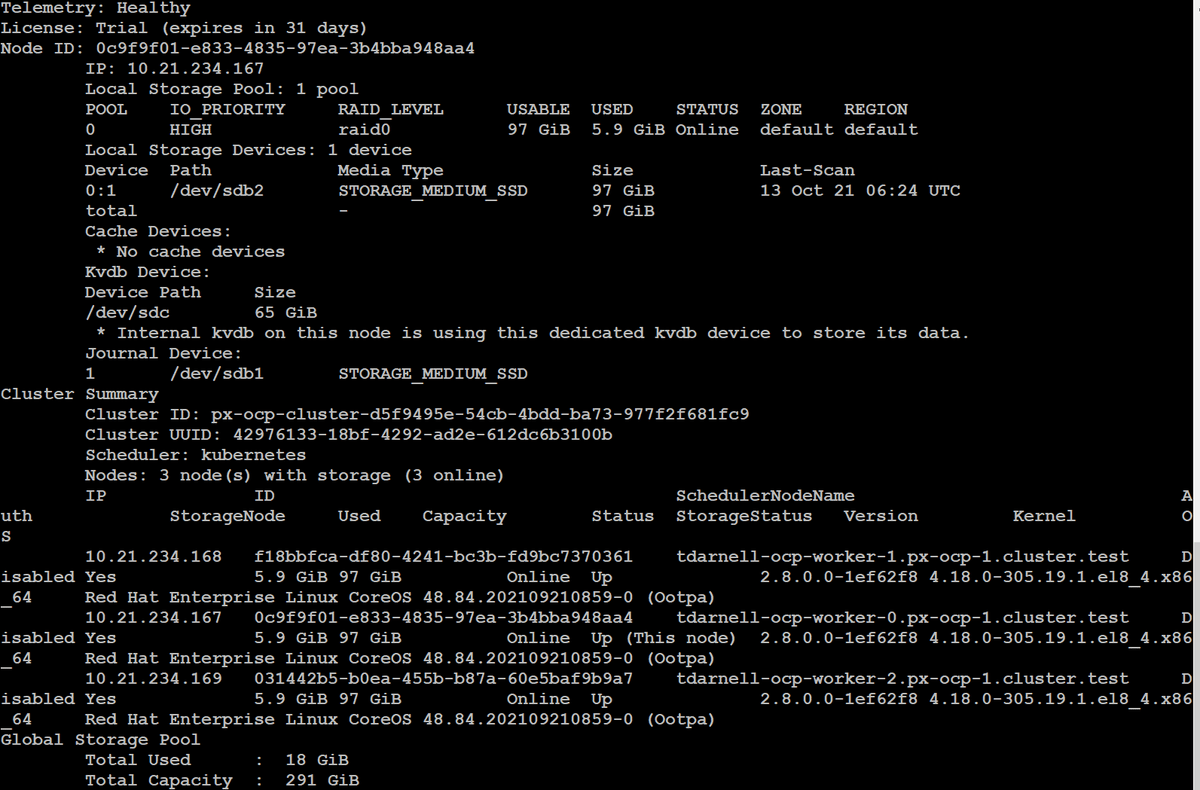

We can also verify our Portworx cluster health by running pxctl status from within one of the OpenShift worker nodes where Portworx is installed:

Now that we have Portworx installed and it is aware we want to use the FlashBlade for Direct Access provisioning, let’s create our PV that will provide our RWX backing storage for the OpenShift internal private registry.

Provision a FlashBlade Direct Access PV

Once Portworx is installed, it is simple to create a PV through Portworx for use in OpenShift. First, we will create a StorageClass that references the Portworx provisioner and indicates that we want to use FlashBlade Direct Access.

Below is an example of the StorageClass we will create so we can provision our storage. Note that we are using the pxd.portworx.com provisioner. The parameters section contains the information that tells Portworx we want to use FlashBlade Direct Access (backend: “pure_file”), in addition to providing any NFS export rules we want Portworx to pass to the FlashBlade for the NFS filesystem (pure_export_rules). The mountOptions section contains standard Kubernetes CSI options, and in this case, we are using NFSv3 over TCP.

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: flashblade-directaccess provisioner: pxd.portworx.com parameters: backend: "pure_file" pure_export_rules: "*(rw)" mountOptions: - nfsvers=3 - tcp allowVolumeExpansion: true

Now that we have the StorageClass created, we can create a PVC and get our NFS PV provisioned and ready for use to back the storage for the OpenShift internal private registry. Let’s create a 100Gi RWX PVC in the openshift-image-registry namespace, referencing the StorageClass we just created:

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nfs-registry-pvc namespace: openshift-image-registry spec: accessModes: - ReadWriteMany resources: requests: storage: 100Gi storageClassName: "flashblade-directaccess"

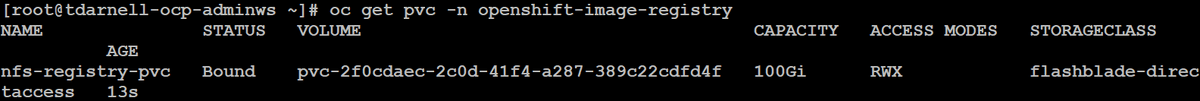

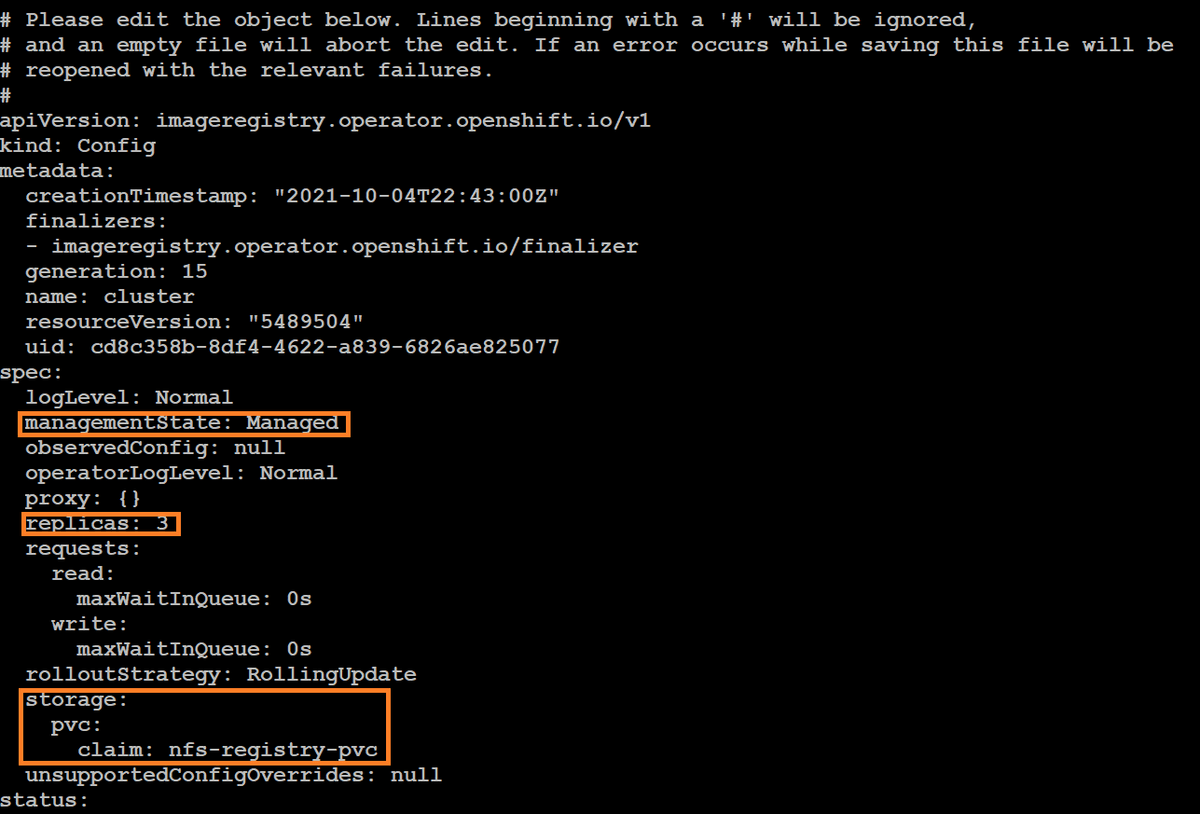

We can now issue the command oc get pvc -n openshift-image-registry to verify that we have a bound PVC that has been provisioned on the FlashBlade through Portworx:

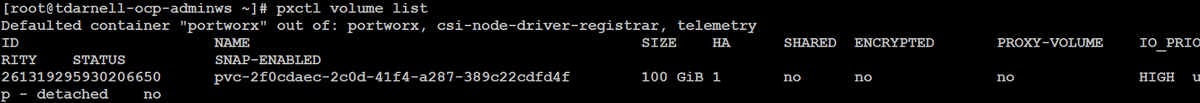

And we can also check the volume in Portworx by issuing the command pxctl volume list from one of the worker nodes:

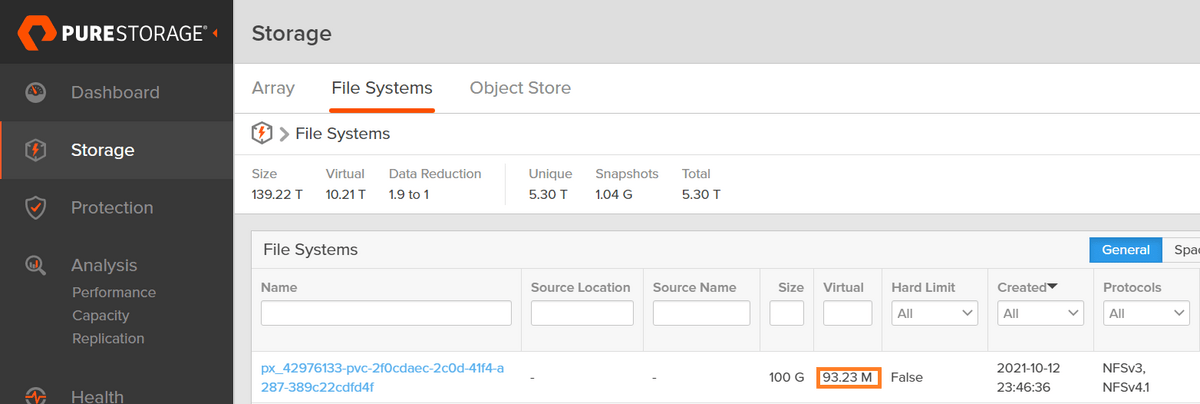

We can take a look at the resultant filesystem if we login to our FlashBlade and sort by creation date to find our NFS filesystem. We can see that there is a 100G filesystem with zero bytes consumed, and that the PV ID is appended to the filesystem name so we can easily map back our filesystem from the FlashBlade UI to our PV inside of OpenShift:

Okay, we have verified that OpenShift, Portworx, and our FlashBlade are all healthy, and we have a provisioned PV to use for the OpenShift internal private registry—so let’s configure it!

Configure and Scale the OpenShift Internal Private Registry

Now that we have resilient and reliable backing storage presented to the OpenShift cluster, we can configure the internal private registry to use it and scale the registry to be highly available.

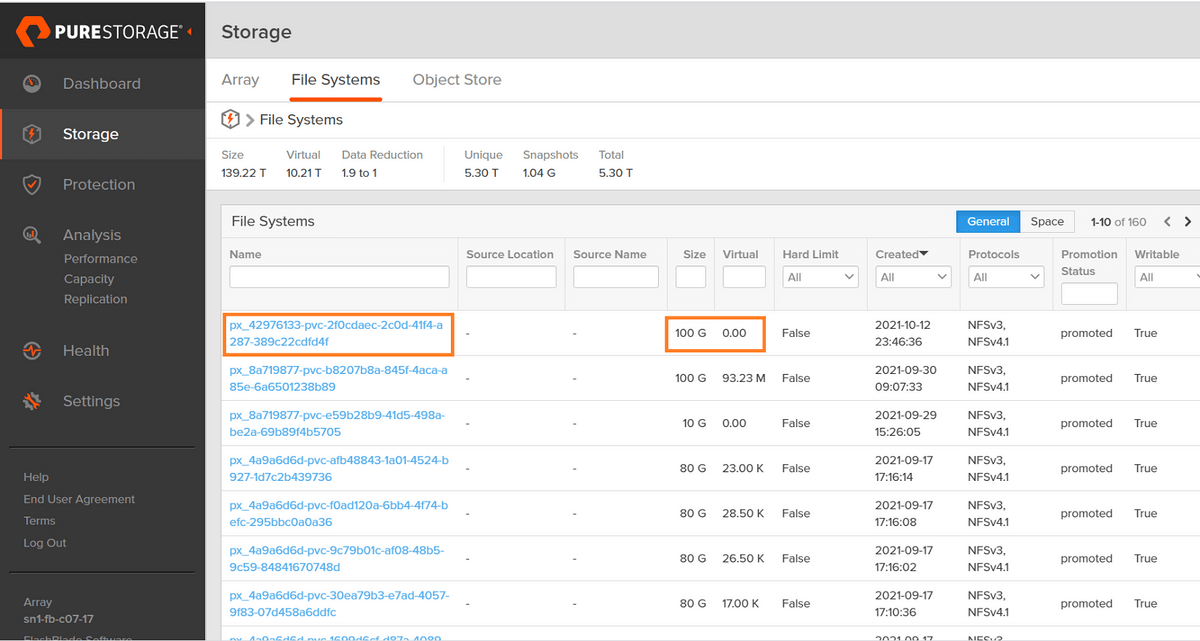

All we need to do to configure the registry is modify the operator config by issuing the command oc edit configs.imageregistry.operator.openshift.io/cluster, add our storage configuration using our PVC name, modify the number of replicas to three to ensure the registry is highly available, and set the state to Managed:

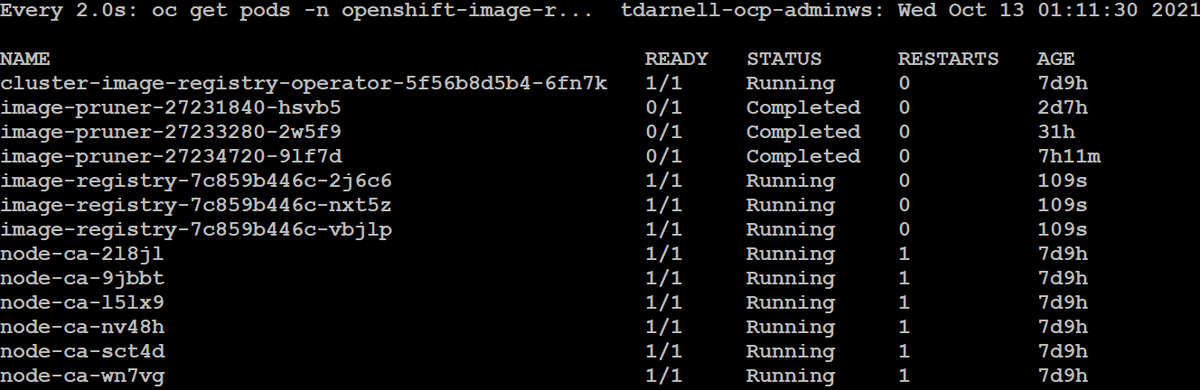

We can monitor the status of the image registry pods by issuing the command watch oc get pods -n openshift-image-registry and wait for all of the pods to become ready:

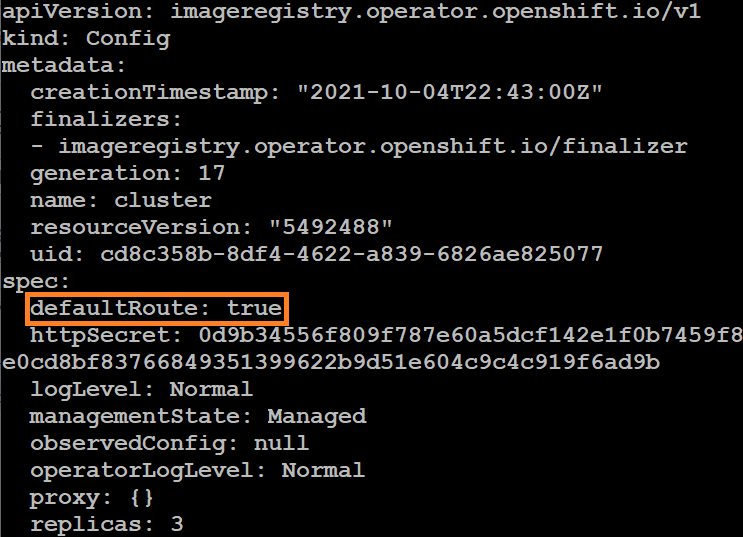

In order to use the registry, we need to add a route to its service. We can do this again by editing the config of the image registry operator and adding the defaultRoute: true key-value pair to the spec:

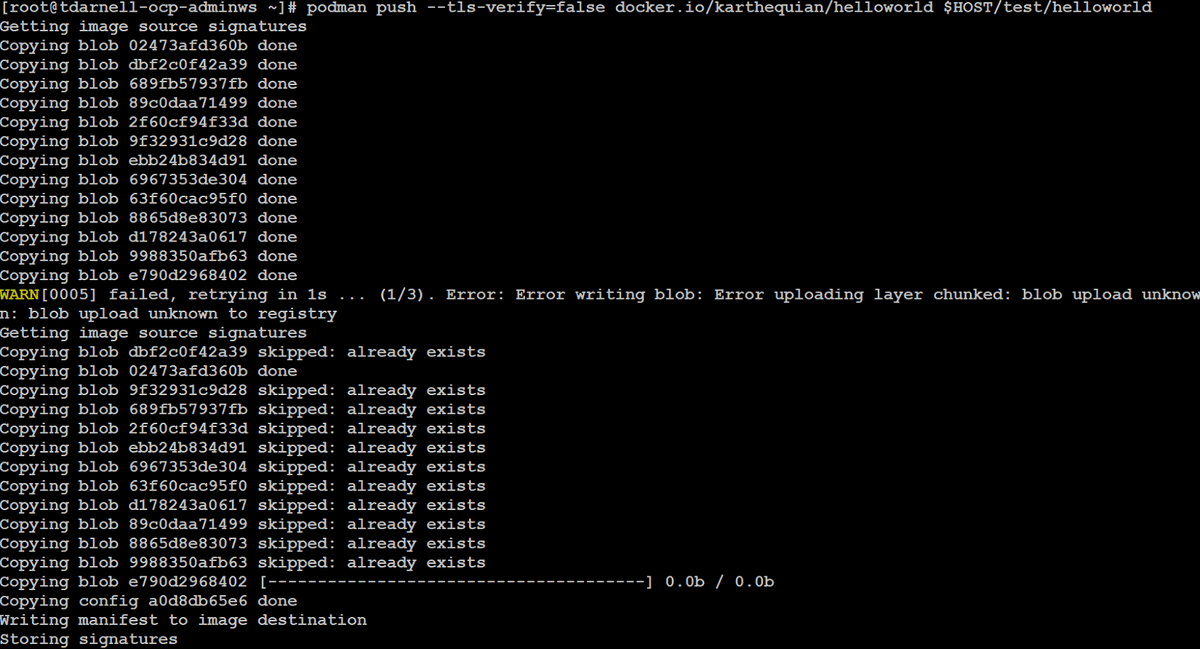

Now that we have our registry exposed, we can get the DNS name by issuing the command oc get route default-route -n openshift-image-registry, then use podman to pull, tag, and push a simple helloworld container image to it:

If we go back over to our FlashBlade UI, we should see data populated on our filesystem equal to the size of the container image we just pushed to it:

That’s it! We now have a highly available OpenShift internal private registry backed by Pure FlashBlade and presented through Portworx!

In conclusion, FlashBlade Direct Access increases your DevOps efficiency and container infrastructure resiliency by ensuring your developers are not waiting on your storage team and by providing resilient and reliable backing storage for critical DevOps infrastructure components.

Check out a demo of us configuring FlashBlade Direct Access for a highly available OpenShift internal private registry here:

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Tim Darnell

Tim is a Principal Technical Marketing Manager within the Cloud Native Business Unit at Pure Storage. He has held a variety of roles in the two decades spanning his technology career, most recently as a Product Owner and Master Solutions Architect for converged and hyper-converged infrastructure targeted for virtualization and container-based workloads. Tim joined Pure Storage in October of 2021.