We’ve recently announced a feature within Portworx Enterprise 2.12 named PX-Fast, which allows you to take advantage of the blazing speeds and low latencies that NVMe (Non-Volatile Memory Express) devices provide, and exploit those benefits inside Kubernetes. In our testing, we’ve been able to hit over a million IOPS on a single worker node, seen a decrease in latency of over 2x, and an increase of over 2x in transactions per second (TPS) in pgbench testing!

But what are these NVMe devices and how do they compare to rotational HDDs (Hard Disk Drives) and SSDs? How do they really improve performance for containerized applications and the associated storage within Kubernetes they use? And why is this such a big deal when using an enterprise-grade cloud native storage solution like Portworx Enterprise with PX-Fast enabled?

Let’s dig into these questions together, look at how Kubernetes storage works, and see how the many features within Portworx Enterprise can take advantage of NVMe and create a better experience for you when using storage within Kubernetes.

What Is NVMe and How Does It Compare to SSDs and Rotational HDDs?

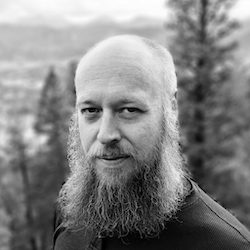

Before we dig too deep, let’s discuss infrastructure architectures that are primarily used in organizations today. Many of the infrastructure deployments these days utilize hyper-converged server architectures, which utilize local storage devices on each server. This provides compute and storage in a single box, compared to the older (but still utilized) converged infrastructure designs where compute and storage were separate entities that worked together. Even instances in the cloud these days utilize these hyper-converged architectures – you can present local storage to your cloud instances that have different capabilities in terms of IOPS, throughput, and capacity to fit your needs.

Rotational Hard Disk Drives – The Long-Time Standard

In the “good old days”, we didn’t have many choices in terms of local storage – you were basically limited to choosing from 5.4k, 7.2k, 10k, or 15k RPM rotational disk storage which would provide better IOPS capabilities the faster the platters on the disk spun. Throughput was determined by the seek time that the drive could support, and how the data was written to sectors and blocks on the rotating platters.

Rotational storage was the standard for decades – but was limited in terms of performance because (most) disk drives only had one actuator to move the heads that could read from or write to the spinning platters inside the disk drive. Large caches within hard drives helped with increasing performance, but spinning disks were really limited to the laws of physics and how quickly the mechanical components of the drive could operate, which limited our local and Storage Area Network (SAN) performance capabilities.

Enter Solid State Drives – From Horse to Automobile

Henry Ford famously stated that if he would have asked people, they would want “faster horses” instead of a new technology like an automobile – we all know how that worked out and how much of a game-changer the Ford Model T was! I like to compare that to how SSD changed the game for storage compared to spinning disk – there was really no comparison in terms of reliability, simplicity, and performance. SSDs are based on memory technology that we had used for many years in our desktops and servers, but with a slight twist in terms of how the transistors are used.

Instead of the memory being volatile and getting erased when the host machine was powered off, these SSDs andNAND (Not-AND logic gate) flash technology gave us the capability to persistently store the state of the memory even when power was not applied, and gave us super fast flash memory that could be used as local storage in our desktops and servers! This brought huge increases in IOPS and throughput for local storage, and brought latencies from milliseconds that spinning disk provided into the microseconds range. Applications and operating systems benefited from this – if you are old enough to remember your first OS boot after upgrading from a hard disk drive to an SSD, you can relate to how huge this improvement was!

Conveniently, SSDs used the same type of SATA interface that modern spinning disks used – making it easy to swap out spinning disks with SSDs – but this did limit the full capabilities of the flash-based storage.

NVMe – Getting Power to All Four Tires

To stick with our car/transportation analogy, while SSDs allowed us to go really fast in a straight line, they were like a very powerful rear-wheel drive sports car – you had a ton of potential energy to transfer to the ground, but could only get a limited amount of it to the ground via two tires – and if you wanted to drive fast around curves, forget it.

NVMe drives bring an interface based on PCIe instead of SATA, enabling more bandwidth down to the flash memory and allowing the capabilities of the flash memory to be more fully utilized – similar to a modern all-wheel drive sports car that can transfer power to the ground with all four tires instead of two. This gives us the capability to see latencies drop yet again from microseconds that SSDs gave us, to nanoseconds with NVMe devices. Not only do latencies improve, but with PCIe offering over 25 times the data transfer capabilities than SATA, IOPS and throughput capabilities to and from the flash memory improve considerably as well.

What Does This Mean for Containers and Kubernetes?

While raw device performance is important, we also need to ensure that the applications using it can take advantage of the performance benefits of these devices. The hardware<->software interface has always been a challenge to make as efficient as possible – if you had software that was limiting the performance of the hardware, then the cost and performance benefits dwindled to negligible returns. Ensuring low overhead at the software layer via device drivers or software defined storage solutions is critical to ensure that you are able to maximize your investment and reap all of the benefits that hardware like NVMe devices can provide.

Another area of consideration is the proliferation of sharing hardware across multiple applications. When we moved from bare metal to virtualization, we saw bottlenecks pop up everywhere in our hardware architectures, especially related to storage devices. No longer could we support IOPS and throughput requirements with a single disk or a RAID1 mirror when we had multiple virtual machines accessing our storage layer – we had to group and pool multiple disks (sometimes tens of disks) in our SANs or hyper converged servers to support the IOPS and throughput requirements of multiple virtual machines to properly function and be performant for the applications they hosted.

While virtualization forced us to update our methodologies for storage infrastructure design, it was nothing when compared to the churn that we see with containerization and especially when an orchestrator like Kubernetes is introduced into the environment. Whereas we could see tens or hundreds of virtual disks provisioned on a single datastore when virtualizing applications, we now can see thousands of volumes provisioned in a Kubernetes cluster – and when we see the Create/Read/Update/Delete (CRUD) churn that Kubernetes can create, the problem is exponential.

Having an efficient, low-overhead storage layer with Portworx Enterprise and backing your cloud native storage solution for Kubernetes storage with high-bandwidth and extremely performant NVMe devices can ensure that your applications are rarely if ever starved at the storage layer in terms of IOPS and throughput. This, combined with the ultra low latencies provided from NVMe devices, ensures that the volume provisioning and I/O utilization for the applications running on Kubernetes can operate at high speeds and at massive scale.

Not Everyone Runs All-NVMe – Now What? Storage Pools and Tiering!

While server manufacturers are packing in high densities of NVMe devices in servers these days, and you can create cloud instances with all-NVMe backed storage drives, these can be cost-prohibitive and a waste of resources if you don’t need all of that power from a storage perspective. Or, you might have drives or even external storage arrays that you still want to use storage from for your Kubernetes environment to maximize your investment and extend your equipment lifecycle.

But let’s face it – not all infrastructure designs are going to be all-NVMe. At the peak of converged infrastructure days, storage vendors provided the capability to have different types of disk or flash available that could be pooled and then volumes created from those pools to provide different capabilities – whether it be high IOPS, throughput, capacity, or other capabilities. When exposed at the virtualization layer, we could have different datastores created from these pools to run virtual machines on, and even individual disks created across multiple datastores for a single virtual machine that required multiple specific capabilities.

For example, we could have the virtual machine operating system disk backed by 15k spinning disk storage, a separate virtual disk presented to the virtual machine for application storage backed by SSD, and a separate virtual disk presented to the virtual machine backed by 7.2k spinning disk for data archive purposes. Some virtualization solutions even give us the capability to create policies that map to pools that have specific capabilities based on the backing storage devices, and use those policies when provisioning virtual disks.

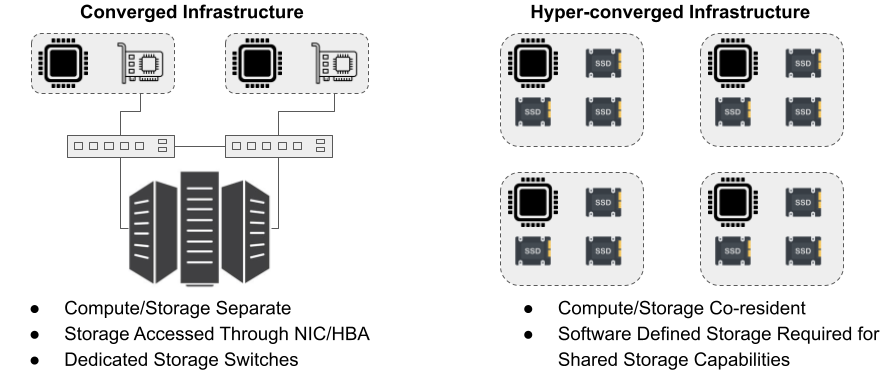

We can do this for Kubernetes storage and the volumes we provision to containers as well – regardless if we are running on-premises or in the public cloud! As a cloud native storage solution for Kubernetes storage, Portworx Enterprise allows you to present disparate disk types to your worker nodes, create pools from those disks, and then provision Kubernetes persistent volumes for containers to use.

To do this, you could have a certain number of NVMe disks, SSDs, and spinning disks in each of your worker nodes, and create different pools in Portworx using them as backing storage, then create individual StorageClasses for containers to use when mounting persistent volumes. You could even add some disks from an external storage array, create a pool in Portworx for those disks, and have a converged plus hyper converged storage configuration for your Kubernetes storage configuration. In the public cloud, you could provision a mix of io1/2, gp2/3, and st1 EBS volumes to your EKS worker node cloud instances with each volume having different IOPS/throughput capabilities, and create the same type of tiered configuration.

What Other Benefits Can Storage Tiering Bring?

Besides configuring for specific IOPS and throughput capabilities, proper design of storage tiering can lower cost and increase efficiencies by right-sizing the storage that is needed – especially when using NVMe drives or high-performance volume types in cloud environments. One of the big challenges we see when using rotational HDDs or even SSDs at times is that several disks must be used to achieve performance requirements, yet when pooled, are oversized for capacity.

For example, let’s say you need a total of 1,000 IOPS for performance, but only 1TB of capacity. If the disks you are using can only support 100 IOPS each but are 1TB in size, to reach your IOPS requirement you would need 10 disks – which means that you are provisioning 10TB of disks when you only need 1TB! This just brings forward the existing problem from older converged infrastructure designs of needing massive numbers of disks to meet performance requirements and having lots of wasted capacity – you really want to shrink that performance:capacity ratio in order to run your infrastructure efficiently and reduce spend on storage.

You can shrink this performance:capacity ratio with features like PX-Fast in Portworx Enterprise that provide very little overhead to ensure you are getting as much performance out of storage devices like NVMe drives. You can take advantage of storage pooling in addition to PX-Fast to truly right size what you need in terms of IOPS, throughput, and latency while ensuring no overprovisioning of capacity – and then create storage pools for non-NVMe backing devices for containers that have different or lesser storage requirements. Provisioning the persistent volumes for your containers is also made simple and easy by creating Kubernetes StorageClasses that map back to specific Portworx pools – creating the policy/spec-driven provisioning and lifecycle experience that we’ve become accustomed to in virtualization environments, but all native to Kubernetes!

Portworx Brings Even More Enterprise Features to Kubernetes Storage and NVMe Infrastructure

While providing access to underlying storage with very little overhead is one of the core benefits of Portworx Enterprise, we’ve really tried to enhance the Kubernetes storage experience you get in addition to the near-native NVMe performance you can achieve with PX-Fast and storage pooling with Portworx Enterprise. When used together with PX-Fast, features like Portworx I/O Profiles and Portworx Application I/O Control can provide even better application performance and allow you to fine-tune cost and performance in both on-premises and cloud environments.

Portworx I/O Profiles

Portworx I/O Profiles allow you to increase the performance of your application even further by optimizing the way that Portworx interacts with the underlying backing disks within a Portworx storage pool. Our db I/O profile batches flush operations when using databases, our db_remote profile coalesces multiple sync operations into a single sync when the volume is configured for 2 or more replicas, and our sequential profile optimizes the read-ahead algorithm to increase performance for sequential I/O patterns.

Don’t know what your application does? No problem – if you use our autoprofile, Portworx will automatically detect the type of I/O your application is generating and set the appropriate profile! Check out our documentation about I/O Profiles to learn how to set the profile parameter in a StorageClass or by using our pxctl utility to set on an existing volume.

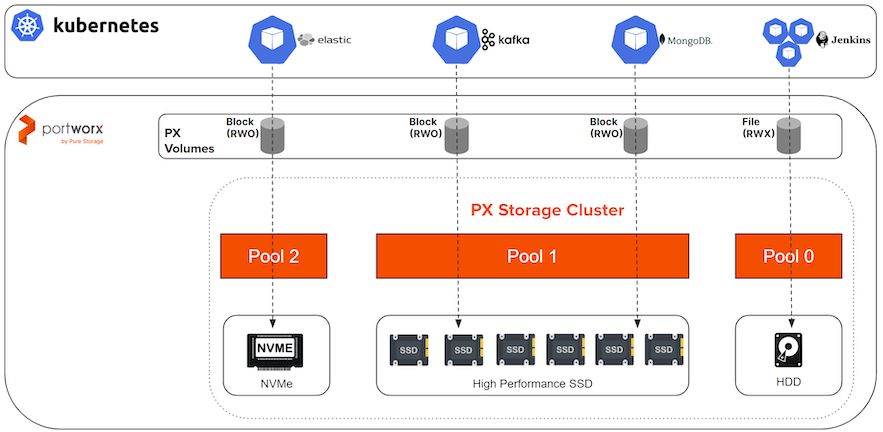

Portworx Application I/O Control

Portworx Application I/O Control allows you to constrain specific volumes to have IOPS or throughput limits. This is valuable when you might have a noisy neighbor situation, where a specific application is consuming the majority of the IOPS or bandwidth from a Portworx storage pool.

You can also use this feature to carve up resources from a pool for a certain number of applications – this can be extremely useful when using it in conjunction with PX-Fast and NVMe drives. If you have multiple applications which are running on a Portworx pool backed by an NVMe device and you want to assign the volume used by each of them 20,000 IOPS or 200MB/s of throughput, it’s as simple as configuring a StorageClass parameter when provisioning the volumes, or using our pxctl utility to modify an existing volume. In cloud environments, this can drive right-sizing of instance volumes and enables you to enjoy cost controls on your storage and fine-tune your requirements and usage of those volumes!

To learn more about this feature, check out our documentation to see how easy it is to set IOPS or throughput limits on the volumes you provision to your containers when using Portworx Enterprise.

Put It All Together and Test It Out!

We’ve talked about the differences between spinning disk, SSDs, and NVMe devices – and how NVMe devices can be beneficial to Kubernetes environments. We’ve also covered how storage pooling with Portworx enables you to perform storage tiering and right-size your storage infrastructure for operational and cost efficiencies – regardless if you are running on-premises or in the public cloud! Finally, we’ve covered a few Portworx features that when combined with PX-Fast and NVMe infrastructure can help drive even more efficiency and performance at the cloud native storage layer, and bring enterprise functionality to your Kubernetes storage architectures.

Make sure to check out our video and blog post on PX-Fast and NVMe, and if you’d like to learn more and try out a free 30-day trial of Portworx Enterprise, visit https://central.portworx.com and get started enjoying enterprise storage functionality within Kubernetes today!