What is application modernization?

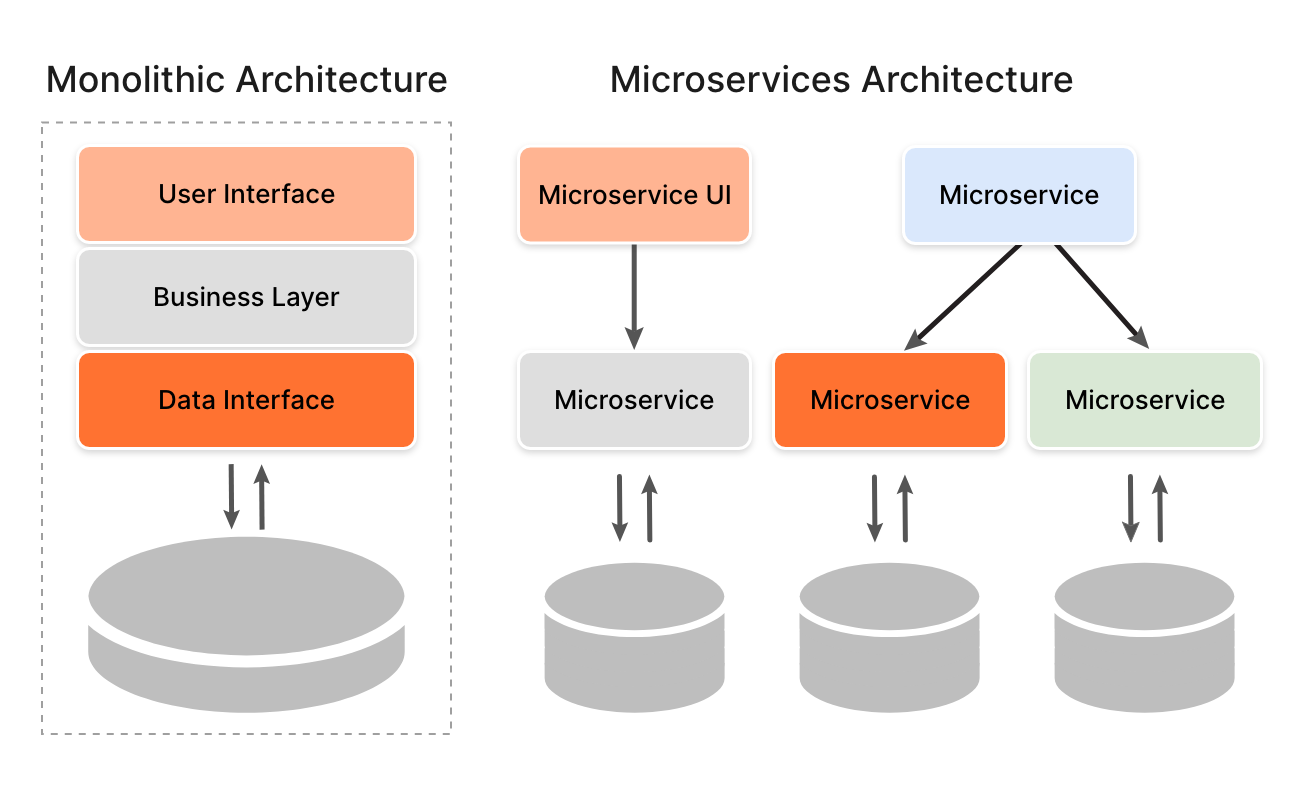

Application modernization is the process of transforming legacy monolithic systems, commonly used before the rise of public cloud and mobile applications in the late 2000s, into modern, modular architectures. Traditional applications were tightly coupled systems that were difficult to scale and had resource-intensive processes. Updating one component or fixing a bug would require making changes in the entire application, leading to increased complexity and slower development cycles.

Using modernization strategies, organizations can enhance scalability, reduce maintenance costs, and accelerate innovation. Incremental modernization ensures legacy systems integrate smoothly with new components, minimizing disruptions while improving agility, efficiency, and time to market. Microservices, containerization, and orchestration platforms like Kubernetes are common enablers for this transformation.

What is a modern application?

When an application takes transformative approaches like a distributed architecture pattern that enhances maintenance, scalability, and adaptability it’s called a modern application. In legacy applications, updating an existing feature or adding new functionality could impact the whole application’s performance since the application’s components are tightly coupled.

For modern applications, these components are containerized and communicate with each other via APIs, message queues, and other similar mechanisms, enabling seamless updates or feature additions without disrupting the entire system. Event-driven designs ensure responsiveness, while an API-first approach simplifies integrations and promotes reusability.

Modern applications are built to adapt to changing demands, handle failures gracefully, and support consistent updates. They leverage practices like load balancing, service discovery, and caching. Modern applications also incorporate observability by design that provides insights into application performance. They are resilient and aligned with business needs, enabling faster innovation and long-term flexibility.

Why modernize legacy applications?

Legacy monolithic systems slow down innovation because they use a single, tightly connected codebase, making updates difficult and risky. Changes in one part can affect the whole application, leading to slower development and higher maintenance costs. They depend on outdated infrastructure, which limits scalability and flexibility. This makes it even more challenging to adapt to new business needs, causing delays in time-to-market and inefficiencies over time.

Modernization often reduces these challenges by transitioning to modular architectures like microservices. Microservices allow teams to work independently on individual services, each with its functionality, which can be scaled, updated, or modified without impacting the rest of the application. This approach enables faster feature releases, simplifies updates, and enhances reliability by isolating failures and minimizing disruptions to the overall system.

Containers and Kubernetes play a critical role in modernization by enabling modular, portable, and scalable applications. Containers isolate workloads, making updates and deployments faster and less risky, while Kubernetes automates scaling, failover, and resource optimization. By leveraging cloud native technologies, modernization offers better resource utilization, automation, and resilience.

Who should modernize applications?

Organizations of all sizes should consider application modernization to overcome challenges like high maintenance costs, limited scalability, and reliance on outdated infrastructure. Legacy applications often lack cloud capabilities, leading to poor resource utilization and non-elastic behavior tied to specific hardware.

Businesses that want to enable faster feature delivery, support mobile and modern web interfaces, and leverage cloud-native technologies for better scalability and efficiency should take a transformative approach toward modernization. It empowers businesses to adapt quickly, reduce risks, and align with evolving user expectations in a competitive digital landscape.

The Role of Containers and Kubernetes in Application Modernization

Containers and Kubernetes are essential to application modernization, enabling scalability, portability, and efficiency. Containers provide isolated environments, ensuring consistent performance and simplifying the shift from monoliths to microservices.

Kubernetes automates container deployment, scaling, and management, enhancing fault tolerance, optimizing resources, and ensuring high availability. Together, they streamline updates, improve reliability, and support cloud-native development. This combination empowers organizations to innovate faster, reduce costs, and adapt to evolving business needs with ease.

Monolithic vs. Microservice Architectures

As technology advances, organizations are moving away from the old monolithic design approach, in which all services are tightly coupled within a unified codebase. They prefer a modern approach known as microservice architecture, in which services are broken down into smaller, loosely coupled components that can be delivered independently.

Here’s a comparison of both of these approaches with a few parameters:

| Feature | Monolithic Architecture | Microservices Architecture |

| Deployment | Entire codebase at once. | Services are deployed independently. |

| Scalability | Scaling one component may require replicating the entire system. | Scale-specific services based on demand. |

| Performance | High performance initially, but tight coupling and complex dependencies can hamper performance. | Performance optimization for each service. However, inter-service communication can cause delays, which might slow the system. |

| Flexibility | Flexibility is limited, since service updates can affect the entire application. | With isolated environments for each service, flexibility is high. |

| Complexity | Initially simpler, but complexity grows with application expansion. | Requires robust strategies for managing communication between services, handling data consistency, and ensuring fault tolerance. |

| Resource Usage | Scaling may result in inefficient resource utilization because it affects the entire application. | Effective utilization of resources when essential services are increased. |

Infrastructure for Modern Applications

When transitioning to modern applications, organizations must determine the function of the supporting infrastructure such as Kubernetes, containers, and virtual machines (VMs) for deploying and managing applications. The following are some common infrastructure comparisons to support modern applications

Virtual Machines vs Containers

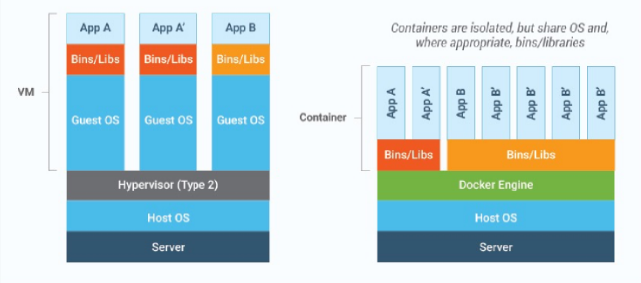

VMs use a hypervisor to run multiple guest operating systems on a host, providing strong isolation by including their own OS, libraries, and applications. Containers, on the other hand, run directly on the host OS kernel, packaging only the application and its dependencies.

Source: https://portworx.com/blog/kubernetes-vs-virtual-machines/

Let us compare some of their features:

| Feature | Virtual Machines (VMs) | Containers |

| Isolation Level | Strong isolation since each VM runs its operating system and hence very secure | Weaker isolation; containers share the host OS kernel and hence kernel vulnerabilities can affect containers |

| Resource Usage | High due to full OS, kernel, and hypervisor overhead | Lightweight, as containers share system resources |

| Startup Time | Slower startup (minutes) | Fast startup (seconds) |

| Orchestration | Managed using hypervisor tools like VMware or Hyper-V | Managed with container orchestration tools like Kubernetes |

| Scalability | Limited by high overhead for each VM | Highly scalable with minimal resource consumption |

| Use Cases | Suitable for running multiple OS environments | Ideal for microservices and cloud native applications |

VMs can also be run in containers using a lightweight hypervisor within a container for legacy applications, edge computing, and development. Tools like KubeVirt and Firecracker enable this but increase complexity and resource usage.

For better security and hybrid cloud compatibility, it is a common practice to deploy containers in VMs. It combines VM isolation with container efficiency, ideal for multi-tenant environments and hybrid setups like Kubernetes on VMs.

Containers and Kubernetes

Although containers can help with application deployment, managing many containers across multiple environments can be difficult. This is where Kubernetes comes into the picture. Kubernetes facilitates containerized application deployment, scalability, and management.

| Feature | Containers | Kubernetes |

| Functionality | Package applications and dependencies | Orchestrate deployment, scaling, and management of containers |

| Scalability | Limited by host resources and requires manual scaling management | Automatically manages scaling based on demand. |

| Management Complexity | Simpler management for individual containers. | More complex due to orchestration capabilities but offers a self-heal ability and constantly monitors container health and restarts or replaces containers that crash or fail. |

| Use Cases | Ideal for single applications or small-scale microservices. | Best for managing large-scale container deployments across clusters. |

Having said that, Kubernetes can be resource-intensive, requiring a significant amount of computing power to run its control plane components such as the API server, controller manager, and etcd database as well as worker nodes. It requires a significant learning curve and is difficult to set up, configure, and manage. It demands extensive expertise in containerization, networking, security, and orchestration.

On-premises, Hybrid, and Public Cloud for Modern Applications

Modern, containerized applications can thrive in any of these environments, but each has its own set of pros and cons:

On-Premises: With on-premise deployment, you get complete ownership of the infrastructure allowing precise configuration for container orchestration. Let us look at some of its benefits:

- Data Security and Compliance: Ideal for industries with stringent data residency and privacy regulations.

- Performance Optimization: Proximity to hardware enables fine-tuned performance for applications sensitive to latency.

Here are some of the challenges associated with it:

- High Initial Investment: Requires significant upfront costs for hardware, software, and setup.

- Limited Scalability: Scaling requires procuring and provisioning additional resources, which is slow compared to the cloud.

- Operational Overhead: Ongoing maintenance, updates, and resource management add complexity.

- Resource Underutilization: Risks over-provisioning hardware that may remain idle during low-demand periods.

Public Cloud: Public clouds are hosted in third-party data centers. With them, you get managed services and access to tools like Amazon ECS, Google Kubernetes Engine (GKE), or Azure AKS which reduces operational overhead significantly. Most of these providers of managed services have pay-as-you-go pricing that minimizes upfront investment and provides predictable costs for many workloads. Here are some of its benefits:

- Rapid Scalability: Easily scale containerized workloads up or down based on demand.

- Global Availability: Deploy applications closer to end users with geographically distributed data centers.

- Innovation Enablement: Rapid adoption of emerging technologies like serverless and AI/ML tools.

Though this sounds very exciting, it also has some associated risks:

- Vendor Lock-In: Heavy reliance on a single cloud provider can limit flexibility and lead to higher costs over time.

- Data Security Concerns: Sharing infrastructure with other tenants may pose security and compliance challenges.

- Unpredictable Costs: Mismanagement of resources, such as leaving containers running unnecessarily, can lead to budget overruns.

Hybrid Cloud: In this type of environment, the workloads are distributed across on-premises and public cloud environments for optimal resource utilization. This is very suitable for organizations that are transitioning from legacy to modern architectures. Let us look at some of its benefits:

- Gradual Modernization: Move specific workloads or applications to the cloud without disrupting on-premises systems.

- Scalability: Leverage cloud resources during demand spikes while retaining critical workloads on-premises.

You can leverage cloud resources during demand spikes while retaining critical workloads on-premises considering some of the following challenging factors:

- Increased Complexity: Managing and integrating multiple environments requires advanced orchestration and monitoring tools.

- Cost Overhead: Combining on-premises and cloud resources can increase costs if not optimized.

- Network Latency: Data transfer between on-premises and cloud can introduce delays for some workflows.

The role of Kubernetes storage and container data management in application modernization

Modern applications rely on containerized or Kubernetes-native storage solutions to support stateful and data-driven complex workloads. In this section, we will talk about the role of container and Kubernetes storage in managing such containerized workloads that rely on cloud providers for resource management.

Persistent storage for containerized and Kubernetes applications

For application modernization, persistent storage plays an important role in a containerized environment as it ensures data persistence across application restarts or crashes.

Persistent Volumes (PVs) and Persistent Volume Claims(PVCs) in Kubernetes offer a solution that helps decouple storage from applications. It makes an application more flexible, easy to scale, and portable, keeping the data accessible.

Why modern applications need cloud-native Kubernetes storage

Modern applications require robust storage solutions that can handle dynamic workloads and offer data persistence. One of the reasons is they have large volumes and a variety of incoming data which if not managed correctly could lead to inconsistency across. Let us understand more such reasons:

Data-Intensive Applications: Analytics, databases, and AI/ML workloads require persistent storage solutions that can efficiently manage large volumes of data.

- Analytics: PVs can help store intermediate results and historical data for tools like Apache Spark or Apache Flink. Kubernetes allows for horizontal scaling of analytics jobs. When demand increases, additional pods can be spun up to handle the increased load, while persistent storage ensures that all necessary data is readily available.

- Databases: StatefulSets provides a way to manage stateful applications like databases by ensuring that each pod has a unique identity and stable storage, offering consistent access to its data. For databases like PostgreSQL or MongoDB, you can automate tasks such as scaling, backups, and failover using Kubernetes operators.

- AI/ML: Machine learning frameworks like TensorFlow or PyTorch can access large datasets stored in Persistent Volumes or cloud block storage. This allows models to be trained on extensive datasets without interruptions due to pod failures. Also, with Kubernetes’ capability to allow dynamic resource allocation, you can automatically provision additional CPU or GPU resources during model training, ensuring optimal performance.

Data resilience: Kubernetes storage solutions often include features like automated backups, snapshots, and disaster recovery options. Organizations can implement backup strategies such as full, incremental, and differential backups that ensure business continuity even during failures. This gives teams the flexibility to optimize storage usage while ensuring they can restore to specific points in time.

With support to configure snapshots, you can get a point-in-time representation of data stored in Persistent Volumes. In the event of data corruption or accidental deletion, snapshots allow for rapid restoration of the application state without needing to be restored from a full backup.

Solutions like Portworx provide the option to set up synchronous or asynchronous disaster recovery, which helps replicate data across different Kubernetes clusters or even across different geographical locations. It ensures that if one cluster fails due to a local disaster, such as a power outage or natural calamity, another cluster can take over with minimal downtime.

Integration with DevOps toolchains: Kubernetes storage solutions integrated with DevOps solutions like CI/CD pipelines allow for automated data management during application deployments. It helps maintain data integrity throughout the software development lifecycle. The pipeline can automatically trigger updates to the associated PVs, ensuring that the latest data schema is applied without manual intervention.

Another benefit of using Kubernetes in CI/CD pipelines is creating consistent environments across development, testing, and production stages. This helps mitigate issues that may arise from discrepancies in data states during testing versus production.

Kubernetes offers easy Integration with monitoring solutions like Prometheus and logging solutions like ELK (Elasticsearch, Logstash, Kibana) Stack that helps gain insights into application behavior, identify bottlenecks, and enhance overall performance.

Overview of container / Kubernetes storage solutions

The Container Storage Interface (CSI) enables Kubernetes to interact with various storage systems, allowing developers to use different types of storage without requiring application-level changes. In this section, we will understand CSI and explore container-native storage solutions.

CSI Background

CSI was introduced to address the challenges of integrating storage solutions into Kubernetes. Storage vendors had to wait for Kubernetes releases to introduce new features or bug fixes, delaying time to market.

With the introduction of CSI in Kubernetes, storage providers can now expose new storage systems without altering the core Kubernetes code. This enhances flexibility and security while providing users with more storage management options. CSI allows organizations to explore various storage solutions, such as block, file, and object storage, based on their requirements without being locked into a single vendor.

While this seems good, the abstraction provided by CSI may introduce some performance overhead compared to native integrations. If not appropriately managed, this overhead can affect latency-sensitive applications.

Container-native solutions

Container-native solutions integrate very well with Kubernetes APIs, allowing for dynamic provisioning of PVs based on application needs. This capability enables automatic scaling and efficient resource utilization. These solutions are optimized for stateful applications that require consistent performance and low latency. They often include features like intelligent caching and optimized I/O paths that improve overall application responsiveness.

Solutions like Portworx provide features such as snapshots, replication, and backup capabilities that enhance data management in Kubernetes environments. Replication enables data redundancy across clusters, ensuring high availability and disaster recovery. To get started with a leading container data management solution for modern applications – inclusive of automated storage operations, data backup and protection, and more – try Portworx for free today.