PX-Autopilot for Capacity Management delivers intelligent and fully customizable rules-based optimization and automation of storage management for Kubernetes. Portworx PX-Autopilot for Capacity Management is a valuable component of Portworx Enterprise, the leading storage platform for Kubernetes. Save time and money while enabling your teams to deliver rich business applications—without worrying about disk and storage management. PX-Autopilot for Capacity Management lets you

- Resize PVCs when they are running out of capacity

- Scale backend Portworx storage pools to accommodate increasing usage

- Rebalance volumes across Portworx storage pools when they come unbalanced

This ultimately helps your organization

- Provision only the storage you need now with automated scaling on demand

- Circumvent the cloud vendors’ capacity-to-performance ratios to deliver high I/O performance, even for small volumes

- Optimize application performance with a fully customizable rules-based engine

- Integrate with Amazon EBS, Google Persistent Disk, Azure Managed Disks, VMware vSphere, as well as Pure Storage FlashArray for storage automation

Storage capacity management is often an extremely time-intensive and disruptive exercise. Reduce that overhead and ensure your teams are focused on building applications rather than managing infrastructure. Expanding storage for multiple applications in a large OpenShift cluster can easily take over 20 hours. Spend that time more wisely and let Portworx PX-Autopilot for Capacity Management intelligently scale on demand while also delivering improved application uptime and performance—and halving your cloud storage costs. If your organization needs more control, you can opt to integrate action approvals via kubectl or GitOps for added DevOps integrations.

Automated Storage Pool Expansion with Pure FlashArray

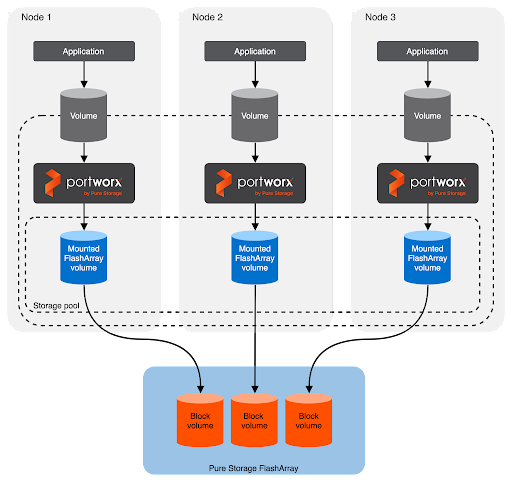

When Portworx is deployed to Red Hat OpenShift, it can be configured with automated disk provisioning for Pure Storage FlashArray.

This allows Kubernetes administrators to configure Portworx with automation of LUN creation and attachment for Portworx storage pools available to OpenShift. This also allows administrators to provision only as much storage as they need, as PX-Autopilot will allow them to automatically scale when cluster usage rises.

To enable Pure Storage FlashArray LUNs to connect over iSCSI, the OpenShift cluster must first be configured with iscsid and multipathd and have the iscsi-initiator-utils installed.

We will install Portworx with FlashArray and use iSCSI to attach our cloud drives to OpenShift. Red Hat CoreOS comes pre-installed with iscsid and a configured initiator. To enable iscsi and multipath for Portworx, apply the following MachineConfig to the cluster. This will enable iscsi and multipathd needed by the Pure Storage FlashArray integration on each worker node. It targets the worker nodes by using the label machineconfiguration.openshift.io/role: worker.

Note: This MachineConfig technique was tested on a OpenShift 4.8 installed cluster using the `openshift-installer` for vsphere.

> Note: The source data string after the line “source: data:text/plain;charset=utf-8;base64,” for the multipath.conf is base64 encoded. You may need or want to update the multipath.conf file to suite your environments needs, to do this, you can run “echo ‘<string>’ | base64 -d” to decode the config file. If you want to update it, make your changes and re-encode it using base64.

apiVersion: machineconfiguration.openshift.io/v1 kind: MachineConfig metadata: labels: machineconfiguration.openshift.io/role: worker name: 99-worker-enable-iscsid-mpath spec: config: ignition: version: 3.2.0 storage: files: - path: /etc/multipath.conf mode: 0644 overwrite: false contents: source: data:text/plain;charset=utf-8;base64,IyBkZXZpY2UtbWFwcGVyLW11bHRpcGF0aCBjb25maWd1cmF0aW9uIGZpbGUKCiMgRm9yIGEgY29tcGxldGUgbGlzdCBvZiB0aGUgZGVmYXVsdCBjb25maWd1cmF0aW9uIHZhbHVlcywgcnVuIGVpdGhlcjoKIyAjIG11bHRpcGF0aCAtdAojIG9yCiMgIyBtdWx0aXBhdGhkIHNob3cgY29uZmlnCgojIEZvciBhIGxpc3Qgb2YgY29uZmlndXJhdGlvbiBvcHRpb25zIHdpdGggZGVzY3JpcHRpb25zLCBzZWUgdGhlCiMgbXVsdGlwYXRoLmNvbmYgbWFuIHBhZ2UuCgpkZWZhdWx0cyB7Cgl1c2VyX2ZyaWVuZGx5X25hbWVzIG5vCglmaW5kX211bHRpcGF0aHMgeWVzCgllbmFibGVfZm9yZWlnbiBeJAp9CgpibGFja2xpc3RfZXhjZXB0aW9ucyB7CiAgICAgICAgcHJvcGVydHkgKFNDU0lfSURFTlRffElEX1dXTikKfQoKYmxhY2tsaXN0IHsKfQo= systemd: units: - enabled: true name: iscsid.service - name: multipathd.service enabled: true

# oc create -f iscsi-mpath-mc.yaml machineconfig.machineconfiguration.openshift.io/99-worker-enable-iscsid-mpath created

The OpenShift worker nodes will be configured one by one and may become NotReady for a short period of time while this occurs. It is best to monitor the OpenShift master and worker nodes until this is done as well as confirm all cluster operators are healthy before continuing to install Portworx.

> You can get the status of cluster operators by running the command `oc get clusteroperators`.

Install Portworx

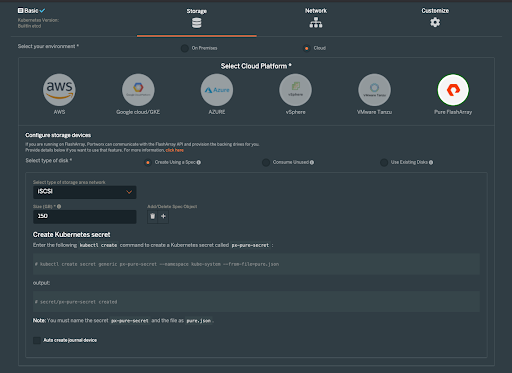

Next, you can navigate to https://central.portworx.com/ and create an installation spec to PX-Enterprise. Remember to do the following during spec creation:

- Select the Operator as the installation method.

- Choose Pure as the cloud disk provisioner.

- Select OpenShift 4+ in final options.

If you’ve completed the above, you will be provided with a StorageCluster spec similar to the below example spec. Make note of the highlighted sections showing that in this case we are installing for OpenShift using FlashArray with Portworx storage pool cloud storage devices of 150GB each to begin with. This environment is also specifically using iSCSI as our SAN type to deliver iSCSI volumes to our Portworx nodes where our Portworx virtual storage pools will be created.

kind: StorageCluster apiVersion: core.libopenstorage.org/v1 metadata: name: px-cluster-d4e65c6a-09a2-4222-87c1-b2ef36836ab8 namespace: kube-system annotations: portworx.io/is-openshift: "true" spec: image: portworx/oci-monitor:2.8.0 imagePullPolicy: Always kvdb: internal: true cloudStorage: deviceSpecs: - size=150 kvdbDeviceSpec: size=32 secretsProvider: k8s stork: enabled: true args: webhook-controller: "false" autopilot: enabled: true providers: - name: default type: prometheus params: url: http://prometheus:9090 monitoring: telemetry: enabled: true prometheus: enabled: true exportMetrics: true featureGates: CSI: "true" env: - name: PURE_FLASHARRAY_SAN_TYPE value: "ISCSI"

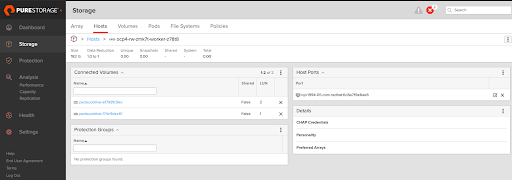

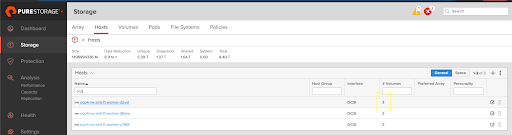

Make sure you install the Portworx Enterprise operator prior to applying the StorageCluster spec above. Once the StorageCluster spec is applied, you will see the automatically provisioned 150 GB cloud drives defined in the StorageCluster spec appear in your FlashArray backend.

Portworx should also become healthy within the OpenShift cluster during this time. You may choose to check the status of Portworx by running `pxctl status` from within one of the OpenShift worker nodes where Portworx is installed.

Autopilot Pool Expansion

In order for PX-Autopilot to be able to expand the backend FlashArray storage pool once its usage crosses a threshold condition, we need to set up an Autopilot Rule first.

> PX-Autopilot pool expansion is only a PX-Enterprise support feature and is not available within PX-Essentials.

The below autopilot rule can be applied to the OpenShift cluster using `oc apply -f rule.yaml`. The rule below states the following conditions and actions:

- Condition: If the pool capacity on any given Portworx node is above 50%

- Condition: Pools on any given Portworx node should not exceed 1TB in size.

- Action: Scale the pool by 50% as long the pool will remain at or below 1TB. Scale by adding a disk.

apiVersion: autopilot.libopenstorage.org/v1alpha1 kind: AutopilotRule metadata: name: pool-expand spec: enforcement: required ##### conditions are the symptoms to evaluate. All conditions are AND'ed conditions: expressions: # pool available capacity less than 50% - key: "100 * ( px_pool_stats_available_bytes/ px_pool_stats_total_bytes)" operator: Lt values: - "50" # pool total capacity should not exceed 1T - key: "px_pool_stats_total_bytes/(1024*1024*1024)" operator: Lt values: - "1000" ##### action to perform when condition is true actions: - name: "openstorage.io.action.storagepool/expand" params: # resize pool by scalepercentage of current size scalepercentage: "50" # when scaling, add disks to the pool scaletype: "add-disk"

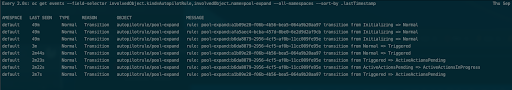

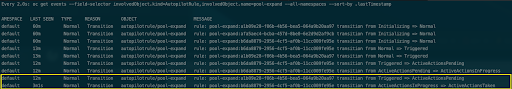

After applying the autopilot rule, you may look at the OpenShift events and search for the AutopilotRule object with the name pool-expand. This will show each of the pools being initialized to normal, as they are all within the threshold of 50%. Note: We see three events because there are three storage nodes within one storage pool each in this cluster.

oc get events --field-selector involvedObject.kind=AutopilotRule,involvedObject.name=pool-expand --all-namespaces --sort-by .lastTimestamp Every 2.0s: oc get events --field-selector involvedObject.kind=AutopilotRule,involvedObject.name=pool-expand --all-namespaces --sort-by .lastTimestamp Thu Sep 9 11:07:23 2021 NAMESPACE LAST SEEN TYPE REASON OBJECT MESSAGE default 7m51s Normal Transition autopilotrule/pool-expand rule: pool-expand:a1b09e28-f06b-4b56-bea5-064a9b20aa97 transition from Initializing => Normal default 7m51s Normal Transition autopilotrule/pool-expand rule: pool-expand:afa5aec4-bcba-457d-8be0-6e2d9d2af9cb transition from Initializing => Normal default 7m51s Normal Transition autopilotrule/pool-expand rule: pool-expand:b6da8879-2956-4cf5-af0b-11cc009fe95e transition from Initializing => Normal

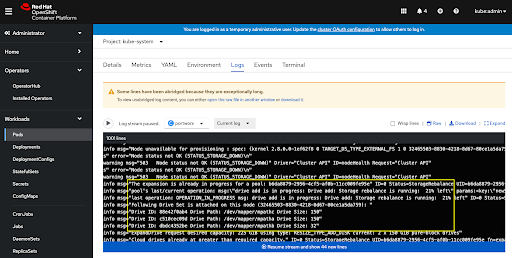

As the pool begins to fill due to usage, the events will show the state change from “Normal” to “Triggered.” This indicates that Autopilot has detected a rule condition within the inference engine. Once a pool is triggered, it will be placed into ActiveActionPending, then ActiveActionInProgress. During this time, Portworx will make sure to only expand a single storage pool and rebalance the storage pools one at a time so the cluster remains healthy and responsive.

For a given Portworx node that is connected to the backend FlashArray, you should see an additional disk (three instead of two) added to the host, indicating that Autopilot performed the expand operation by adding a disk.

To check the progress of the pool expansion, you can look at the nodes’ Portworx logs and make note of the “Expansion is already in progress for pool” log entries and the percentage that the expansion has left to rebalance.

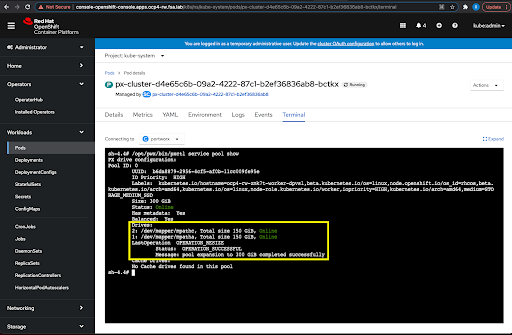

Once this operation is complete, you may use the pxctl service pool show command to see the expand operation has occurred and that there is an additional disk based on the AutopilotRule.

The events will also show the triggered condition as ActiveActionsTaken to indicate the “expand” operation is complete and that the action has been taken.

Once this is done, Autopilot will resume watching for the condition to be true for the pools and their new sizes. Autopilot rules continue to work, even after they have been triggered, as long as the action will not meet the maximum limit on the size of the pool. If the maximum size limit of the pool has been met, the action will increase it to only meet this maximum size.

In conclusion, PX-Autopilot can help significantly reduce time and complexity when supporting stateful workloads within your OpenShift clusters. Thousands of applications and multiple tenants can be used in OpenShift projects at the same time. Allowing PX-Autopilot to monitor potential capacity issues, take action to fix them, and rebalance data at the same time is a major operational efficiency for OpenShift storage administrators. Check out the demo below to see Autopilot in action on Red Hat OpenShift.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!