Alright, welcome back to Portworx Lightboard Sessions, today we’re gonna focus on Portworx on OpenShift. So we’re gonna cover the basic OpenShift deployment, whether it’s on-prem or in the cloud, and how Portworx fits into this architecture. The first thing I wanna start with is most, at least production, or even decent-scale clusters have a set of master nodes. These are our three master nodes, and they provide the APIs and the interactions for the users go through the APIs. They also host, typically, at CD clusters, meaning that there’s a state stored on each one of these masters about information of our OpenShift cluster. The next thing I wanna cover is, OpenShift can be deployed on-prem or in the cloud. Think AWS, Azure, GCP, those types of infrastructure providers. And on-prem, this is typically bare metal, so you could have storage arrays and racks of servers underneath here, providing the compute and storage for your applications and OpenShift infrastructure. Either way, you have a set of nodes or VMs that deploy in OS. And these OS can be typically RHEL or CentOS.

Now, once the OS is deployed, OpenShift can be deployed to those RHEL nodes, and this box here represents OpenShift. OpenShift is deployed on a set of infrastructure nodes, including the masters, right? So three of these nodes can be master nodes, and the rest can be hosted for your infrastructure applications or developers’ production, various workloads. And Portworx gets deployed on top of OpenShift. So Portworx is cloud-native storage, and it provides the persistent volumes and dynamic provisioning for OpenShift workloads that need persistence, such as databases and things like that. And we’ll go into it a little bit more. But it gets deployed on top of Kubernetes. In this case, it gets deployed on top of OpenShift, and Portworx runs on each one of these nodes as well. Not the masters, though, just the worker nodes.

So say that our on-prem infrastructure provides a set of LUNs to our nodes, and now in the cloud, this may be EBS or Google Persistent Disk. On-prem, it might be a storage array attached, providing a LUN, or directly-attached storage in the form of SSDs, NVMEs, and SATA drives. What Portworx will do when it gets installed, it’ll go down into the OS and consume that LUN or drive. And what this does, it provides Portworx with a way to create a single globally available storage pool across every one of our OpenShift nodes. And so, you can deploy workloads across your OpenShift cluster, and Portworx will take care of where the data is attached to your container. If containers fail, it’ll follow the container, and so forth.

How does a user actually interact with Portworx? The first thing that a user will do is typically, they’ll have some code, say, in GitHub, and this code will reference a storage class as part of the YAML file that it’s defined. The storage class can be provided with a number of different parameters, such as replication, I/O priority, various things, I/O profile, specifically for, say, databases. Priority is more like high, medium, low, so we can say, “We want high.” And REPL, replication, we say, “Let’s do three replicas.” Our storage cluster will have this information, and a YAML file, which will define a database, or a staple service, will reference that storage class. Now this gets deployed to the cluster, and a service comes up, say this is your database, or a staple service, Portworx will dynamically provision a volume with these parameters for this database container, and this is what we call the PV in OpenShift and Kubernetes.

The reference to what it wants is the PVC, which has the storage class name in it for dynamic provisioning. Now, because we have three replicas, Portworx goes ahead and stores replicas in three locations, hence Replica 3 across the OpenShift cluster, therefore, keeping this data highly available, and in the case of failure of container for OpenShift node, the database can come back up as soon as OpenShift can reschedule it on another node in the OpenShift cluster, regardless of the underlying infrastructure or where a LUN is attached, because it manages the replication underneath.

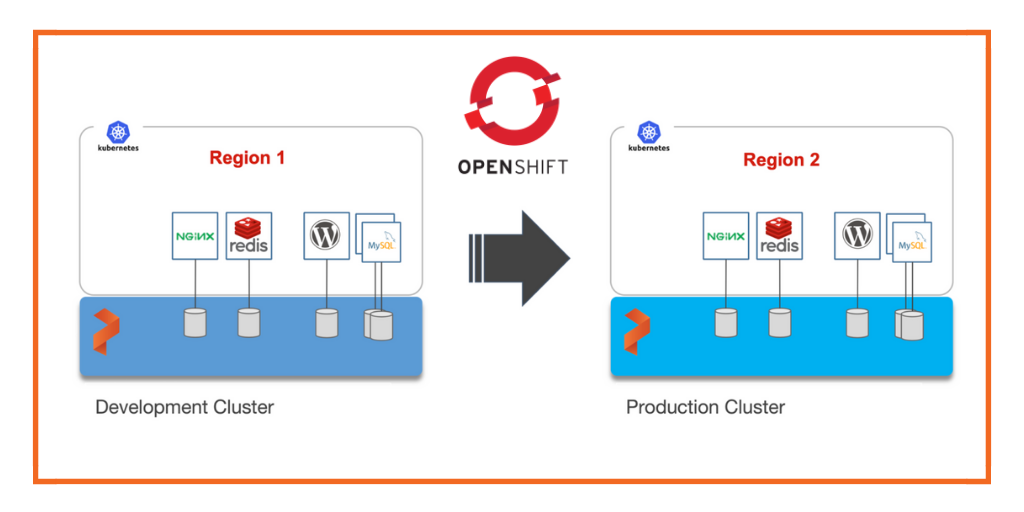

This is the high-level core value of Portworx on OpenShift, and in future discussions, we’ll talk about if you have multiple OpenShift clusters, and OpenShift can provide DR using Portworx in between them. One thing that’s worth mentioning here is that OpenShift can dynamically scale out, and Portworx goes ahead and scales out with it, no problem, as long as the configuration is present in the OpenShift cluster. Stay tuned for more. We’ll talk about openshift DR, backup and restore, and more in the future on OpenShift. Thanks for watching.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!