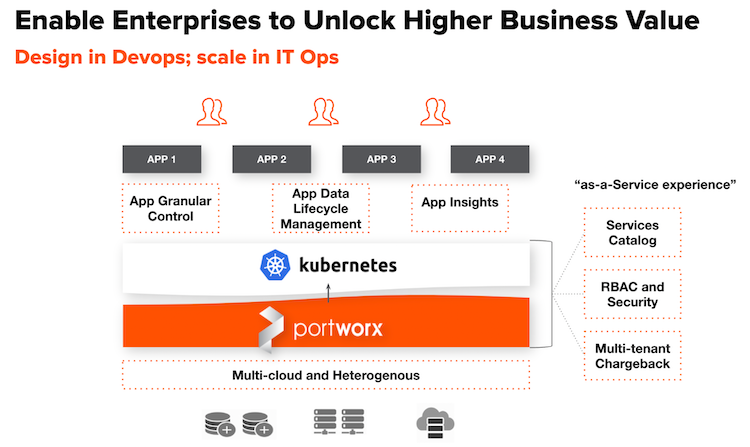

With all the data in the latest CNCF survey showing tremendous adoption of container platforms and Kubernetes, it is clear that Kubernetes has become a de-facto way for small and large organizations to deploy modern applications. It is important to understand that not only are the application teams looking for agility but also are focussed on enterprise scale as they expand the use cases moving up the stack with production-grade apps. We are at a juncture in this journey where we have to carefully balance the requirements from DevOps and IT Operations teams to help them provide the “as-a-Service” experience for their organizations.

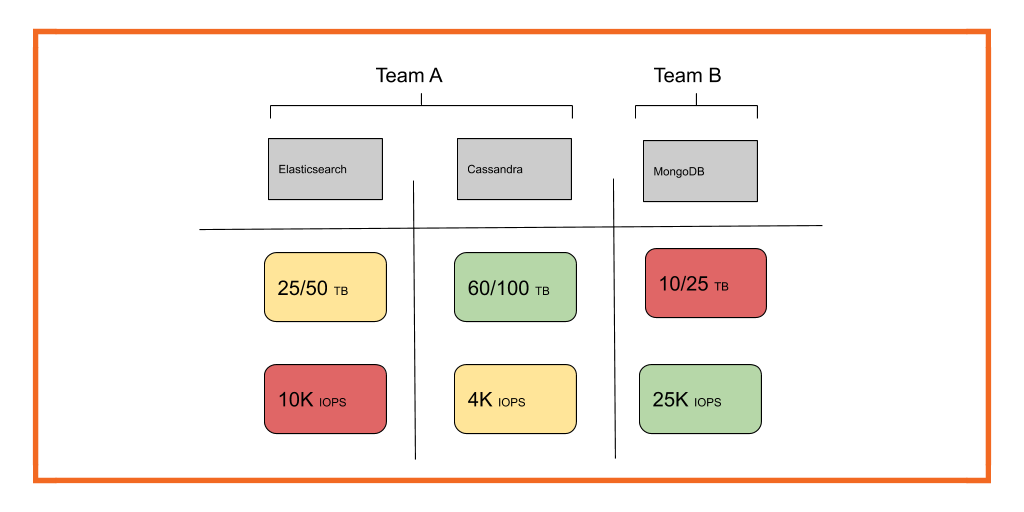

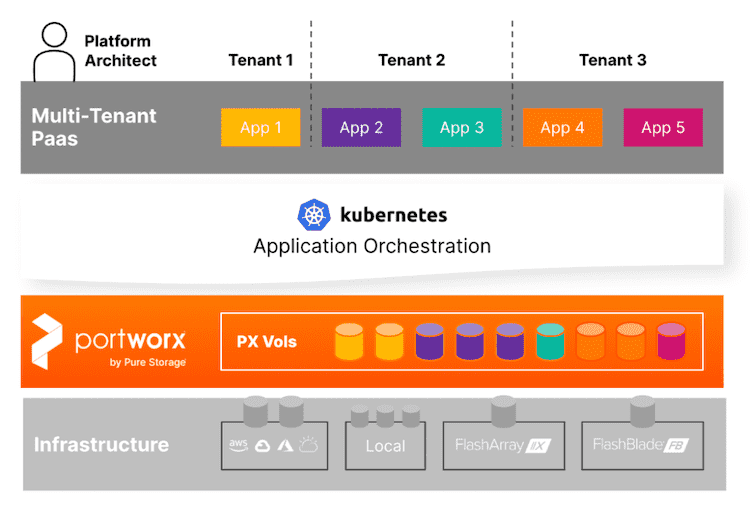

With Portworx Enterprise, we are obsessed with how the applications are deployed and managed in heterogeneous environments and resolving the new challenges that the platform owners face with the increasing scale. As we work with several-sized customers, we appreciate the challenges they face as they build their organization-wide platforms with one of the major goals to keep their infrastructure costs in control. For these Platform-as-a-Service (PaaS) initiatives, it is increasingly important for platform owners to enforce strict SLAs on the applications when it comes to consuming critical infrastructure resources. When it comes to storage, they want to ensure that different applications consume I/O and bandwidth on the clusters in proportion to the business value. With the Application I/O Control capability in Portworx Enterprise 2.10, we wanted to give the platform owners additional tools in their armory to exert this control where applicable. As explained in this blog, this new capability gives the platform owners flexible options to control the I/O and bandwidth consumed by the application at per volume level and even define it as a policy in the StorageClass definition itself.

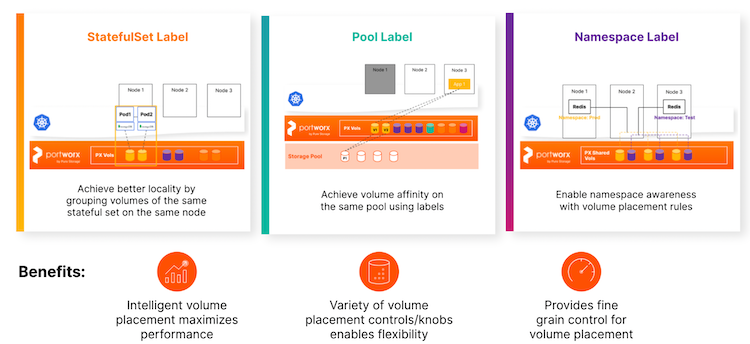

In a distributed environment, there are multitudes of ways to deploy applications but sometimes there are specific needs on how the underlying volumes are placed for data locality, redundancy and dependencies relative to other applications. Volume Placement strategy (VPS) has long been a popular feature for some of our customers to clearly set volume placement rules to meet their application deployment needs. 2.10.0 release provides advanced customizations to the VPS rules for above scenarios as outlined in the blog here.

As customers are bringing their mission critical data into their Kubernetes infrastructure, they want to ensure that their data is not only secure but also prevent any accidental loss as a result of user operations. In order to prevent such scenarios and help customers recover their data easily, we added a cool new feature aptly named “Volume Trashcan”. As explained in this blog, with this feature, platform owners can set a policy where all volumes that are deleted can be archived in a temporary bin for a configurable period of time before completely purging it.

Additionally, we have added several enhancements requested by our customers, some of which are:

- Sharedv4 service GA: This feature is now GA (it was early access till 2.9.x) and it will allow users to configure a Kubernetes Service endpoint for sharedv4 (RWX) volumes simplifying failover and overall management.

- CSI 1.5 specification: Includes support for volume health, operator install for volumesnapshot driver and snapshot control.

- Auto I/O profile for existing volumes: This release extends the auto io-profile capability to existing volumes where Portworx can detect and set the right I/O profile for the application.

- Migration enhancements: Several key migration/DR enhancements were added as part of Stork 2.9.0 release:

- Autosuspend option in migration schedules so that migration controller from the primary cluster can auto-detect applications activated on the paired DR cluster and suspend their respective migration schedules.

- Volume/resource and data transfer information in migration summary.

- Improve resource migration time with parallelization of resources.

- Daemonset-Operator migration: Customers who have initially installed Portworx Enterprise using daemonset method and now want to migrate to Operator based method, they can do it using the migration feature that will be available as ‘beta’ in Operator 1.8.0. This feature creates a configmap based on existing PX configuration and can set up the PX Operator after user approval.

Portworx Enterprise 2.10.0 supports the latest version of Kubernetes 1.23 as well as newest Openshift release 4.10.0. Portworx Enterprise 2.10.0 is now available for all customers to take advantage of these unique capabilities.

Visit the Portworx Enterprise features page to learn how you can get started.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!