With modern enterprises moving to Kubernetes, more and more applications are being developed for containers. Today, 95% of new applications are developed in containers according to 451 Research. By 2025, Gartner predicts that 85% of global businesses will be running containers in production, up from 35% in 2019. This also means that DevOps and application teams will need to coordinate an increasing number of software workflows. These workflows often consist of updates, patches, and configuration changes tested and carried out through a CI/CD pipeline. However, DevOps teams must also monitor and support these applications as they run in production, often making manual changes to help keep them running smoothly. Manual changes include adding physical storage or expanding existing application storage as they accumulate more data and see increased load. Portworx wants data management to be first class and as agile as the application stack and CI/CD pipeline. This is why AutoPilot allows for automated and GitOps integrated workflows for data management related changes such as rebalancing storage pools or increasing an applications storage space if it starts running low. In this blog we will explore these new features from AutoPilot and how they fit right into the typical DevOps pipeline enterprises use today.

Understanding AutoPilot Actions

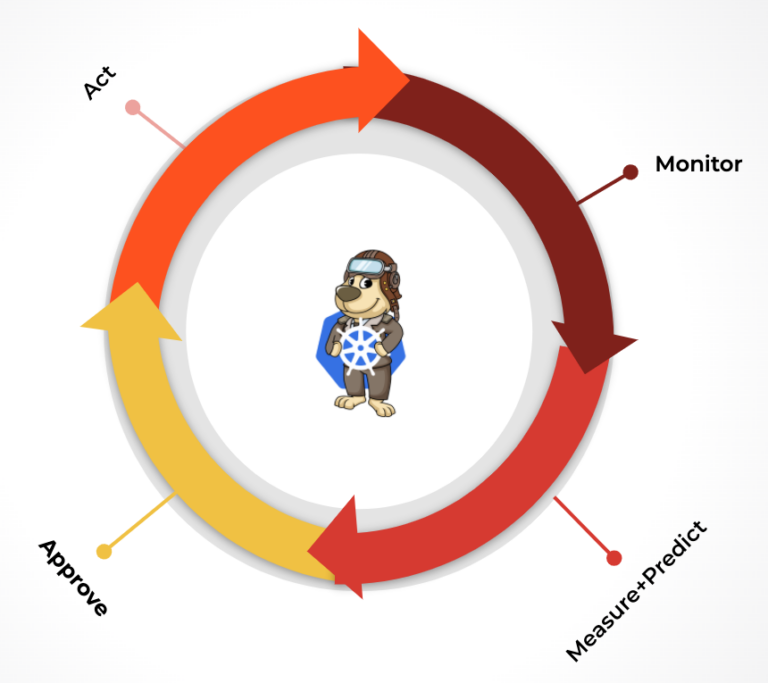

First, you should understand what AutoPilot is and why it is used. Autopilot is a rule-based engine that responds to changes from a monitoring source. Autopilot allows you to specify monitoring conditions along with actions it should take when those conditions occur. These actions are then automated, saving valuable time in operations and likely reducing downtime for applications.

Action AutoPilot supports are:

- Resizing PVCs when it is running out of capacity

- Scaling Portworx storage pools to accommodate increasing usage

- Rebalancing volumes across Portworx storage pools when they come unbalanced

Before this latest release, the actions would watch the monitoring condition until it was true, then it automatically took action. The action would also “cool down” for a configurable amount of time so that it won’t keep triggering if there was a rogue application or bad actor. Even though this is very useful, not all Kubernetes users wanted actions to automatically take action, they wanted actions to be “approved” by an admin or user with one click while still automating the complexity of the task. Sounds great, Right? This is exactly what AutoPilot 1.3 does today with integrations for typical DevOps tools used in GitOps pipelines.

Before we move forward, let’s quickly understand pool rebalancing as this is one of the newest actions that AutoPilot can automate.

Pool Rebalancing

As mentioned above pool rebalancing is an AutoPilot action itself. It’s one of the newest actions supported by AutoPilot so we’ll cover it individually. Pool rebalancing allows you to rebalance Portworx storage pools automatically when they become unbalanced. So, why would you need to rebalance Portworx storage pools?

Storage pools combine drives of like size and type on Portworx nodes. Nodes can individually have up to 32 storage pools and pools are labeled by performance (low, medium, high) based on the IOPs and latency of the drive. Volumes can also be replicated across nodes within the same type of pool. Rebalancing comes into play when overall use of the storage pools over time may lead to an unbalanced cluster. This could also happen when storage nodes are added to an existing cluster, adding storage capacity at a horizontal scale. In either case, pool rebalancing will take volume replicas and balance them across the available space in other pools.

Portworx uses a metric called “Provisioned” and calculates the deviation in the provisioned capacity across storage pools. This can be seen by using the pxctl cluster provision-status command with the wide output type. Note the example below has been truncated to more easily show PROVISIONED (MEAN-DIFF %).

> Note: provisioned status is not how much space is used, volumes are thinly provisioned so the provisioned status shows only provisioned and not used. The USED metric is also available if you need that information.

Generally, Portworx considers anything in the +/-20% range to be considered balanced and when nodes are more than 20% in either direction, pools are considered unbalanced. AutoPilot can detect and take action on these metrics or admins can check these and manually trigger a rebalance with the command pxctl service pool rebalance submit.

The cluster below represents a “balanced” cluster with all nodes within +/-20%

$ pxctl cluster provision-status --output-type wide NODE PROVISIONED (MEAN-DIFF %) 1c218a7e-2e95-424b-bfa3-20c62ae16e9 12 GiB ( -5 % ) 8622d322-aaa0-4639-a257-fda22f4095bb 52 GiB ( +11 % ) f39841a4-93a7-415b-9513-d142b4d62f33 10 GiB ( -6 % )

If a cluster becomes out of balance, a node would report >20% or <-20%.

$ pxctl cluster provision-status --output-type wide NODE PROVISIONED (MEAN-DIFF %) 1c218a7e-2e95-424b-bfa3-20c62ae16e9b 12 GiB ( -13 % ) 8622d322-aaa0-4639-a257-fda22f4095bb 112 GiB ( +26 % ) f39841a4-93a7-415b-9513-d142b4d62f33 10 GiB ( -14 % )

Check out our recent lightboard session video explaining what pool rebalancing is and how it can be used with Kubernetes.

Here is a short demo of pool rebalancing in action.

AutoPilot Approval

At this point, it should be fairly clear what the goals of AutoPilot are and what actions within a AutoPilot enabled Kubernetes cluster can do. However, getting back to DevOps culture and the use of CI/CD and GitOps, our users wanted ways to integrate approvals into their AutoPilot actions.

Type of approvals:

Approvals using kubectl

When creating AutoPilot rules you can set the parameter enforcement which tells AutoPilot how to enforce the action. In this case, you can set the rule to approvalRequired which will effectively tell the action to wait until it has been approved to continue. An example of a volume resize rule is shown below.

> Note that enforcement can be used with any AutoPilot rule. For example, you can create a rule for Pool Rebalancing which was covered earlier in this blog, use the `openstorage.io.action.storagepool/rebalance` action.

apiVersion: autopilot.libopenstorage.org/v1alpha kind: AutopilotRule metadata: name: postgres-volume-resize spec: #### enforcement indicates that actions from this rule need approval enforcement: approvalRequired ##### selector filters the objects affected by this rule given labels selector: matchLabels: app: postgres ##### conditions are the symptoms to evaluate. All conditions are AND'ed conditions: # volume usage should be less than 50% expressions: - key: "100 * (px_volume_usage_bytes / px_volume_capacity_bytes)" operator: Gt values: - "30" ##### action to perform when condition is true actions: - name: openstorage.io.action.volume/resize params: # resize volume by scalepercentage of current size scalepercentage: "100 # volume capacity should not exceed 400GiB maxsize: "400Gi"

Note, when this rule is triggered, it will not resize the volume until it is approved. Approvals are also represented as Kubernetes objects so you can use `kubectl get actionapproval` to see the current state.

$ kubectl get actionapproval -n pg NAME APPROVAL-STATE postgres-volume-resize-pvc-8eb0c590-de7d-4a06-91f7 pending

The other method you can use is to view approval status is using `kubectl events`. Simply filter for the AutoPilot rule to get the current state. You should see the rule in the state of ActionAwaitingApproval.

$ kubectl get events --field-selector involvedObject.kind=AutopilotRule,involvedObject.name=postgres-volume-resize -w LAST SEEN TYPE REASON OBJECT MESSAGE 31s Normal Transition autopilotrule/postgres-volume-resize rule: postgres-volume-resize:pvc-8eb0c590-de7d-4a06-91f7-1fde15d6647c transition from Normal => Triggere 0s Normal Transition autopilotrule/postgres-volume-resize rule: postgres-volume-resize:pvc-8eb0c590-de7d-4a06-91f7-1fde15d6647c transition from Triggered => ActionAwaitingApproval

Next, a user or admin who has permissions to access the action approval can patch the approval using kubectl.

kubectl patch actionapproval -n pg <name> --type=merge -p '{"spec":{"approvalState":"approved"}}'

Then the events should continue showing that the volume was resized.

6s Normal Transition autopilotrule/postgres-volume-resize rule: postgres-volume-resize:pvc-8eb0c590-de7d-4a06-91f7-1fde15d6647c transition from ActionAwaitingApproval => ActiveActionsPending 0s Normal Transition autopilotrule/postgres-volume-resize rule: postgres-volume-resize:pvc-8eb0c590-de7d-4a06-91f7-1fde15d6647c transition from ActiveActionsPending => ActiveActionsInProgress

Check out this shot demo of using AutoPilot Action Approvals with kubectl.

Approvals using GitOps

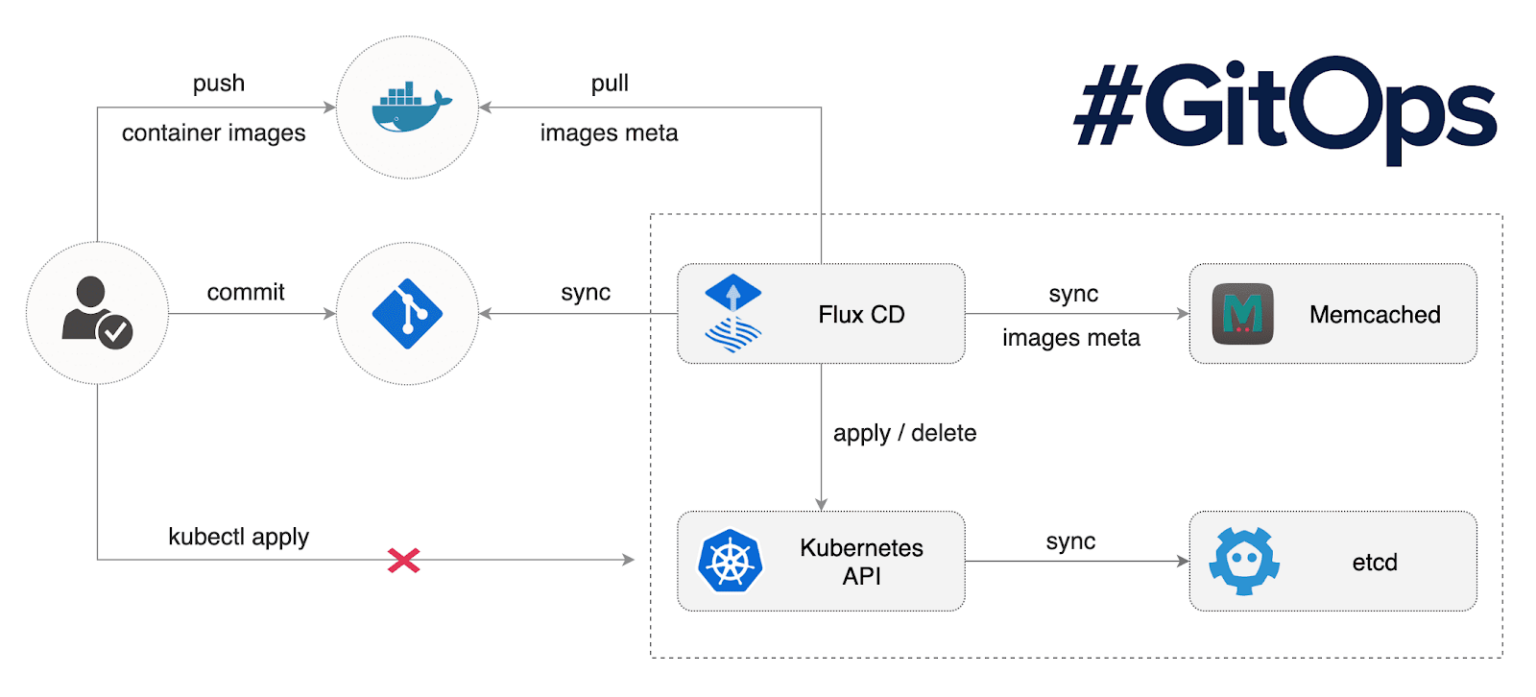

The second option is to use GitOps integrated with AutoPilot action approvals. To do this, Portworx can use Flux as a GitOps tool to enable this type of approval directly with GitHub.

Image Credit – https://fluxcd.io/

The required steps for enabling AutoPilot for GitOps approvals are:

- Setup GitOps in your cluster using flux.

- Configure Autopilot to provide access to your Github repository used for GitOps

- Create AutopilotRule with approvals enabled

- Approve or Decline the actions by approving or closing Github PRs respectively

Once your Portworx/Kubernetes cluster has been setup with Flux for GitOps, the steps for AutoPilot rules are very similar. First, create a rule with enforcement set to approvalRequired

> Note that enforcement can be used with any AutoPilot rule. For example, you can create a rule for Pool Rebalancing which was covered earlier in this blog, use the `openstorage.io.action.storagepool/rebalance` action.

apiVersion: autopilot.libopenstorage.org/v1alpha1 kind: AutopilotRule metadata: name: postgresql-volume-resize spec: #### enforcement indicates that actions from this rule need approval enforcement: approvalRequired ##### selector filters the objects affected by this rule given labels selector: matchLabels: app: postgres ##### conditions are the symptoms to evaluate. All conditions are AND'ed conditions: # volume usage should be less than 50% expressions: - key: "100 * (px_volume_usage_bytes / px_volume_capacity_bytes)" operator: Gt values: - "30 ##### action to perform when condition is true actions: - name: openstorage.io.action.volume/resize params: # resize volume by scalepercentage of current size scalepercentage: "100" # volume capacity should not exceed 400GiB maxsize: "400Gi"

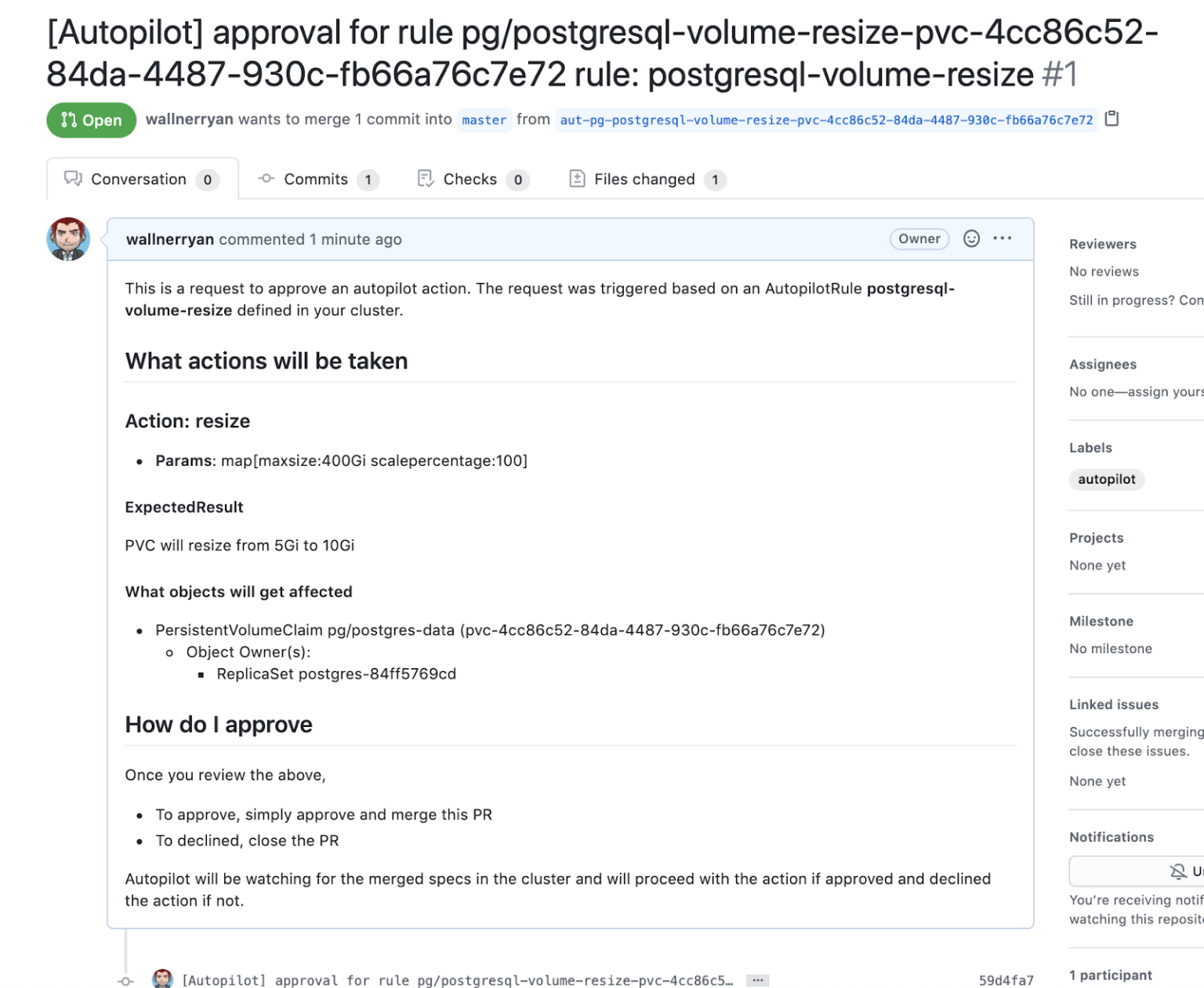

Next, when your rule is triggered the actionapproval will become pending just like when you use the kubectl method. The difference will be that there should also be a Pull Request (PR) in your GitHub repository that can be used to approve the action by simply allowing an authorized GitHub user to merge the PR.

Once the PR is merged, your rule should continue and carry out the action. Check out this short demo of GitHub action approvals below.

Conclusion

DevOps teams running Kubernetes and Portworx are closely tied to CI/CD and GitOps pipelines. Infrastructure is starting to be defined and used with these workflows using GitOps tools like Flux. We believe that the data services layer for Kubernetes should be no different and changes to this layer should also be integrated with GitOps. AutoPilot allows for GitOps integrated workflows for data related changes such as increasing an application’s PVCs storage space or using the power of rebalancing the pools in a Portworx cluster.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Ryan Wallner

Portworx | Technical Marketing ManagerLightboard Session: Data Locality with Stork (Storage Orchestrator for Kubernetes)