Automate storage capacity management for VMware Tanzu Kubernetes clusters using PX-Autopilot

According to the State of Kubernetes 2021 report from VMware, Kubernetes continues to gain momentum across all industries, with 65% of organizations running Kubernetes in production. If we look at organizations with more than 500 developers, that number jumps to 78%. This is more than 250% growth compared to the same statistic from the 2018 report. Organizations that run Kubernetes in production are seeing a number of benefits, and 58% of them list improved resource utilization as the top benefit. This is great, but there are still a few areas where we can add value and improve resource utilization from a Kubernetes storage perspective.

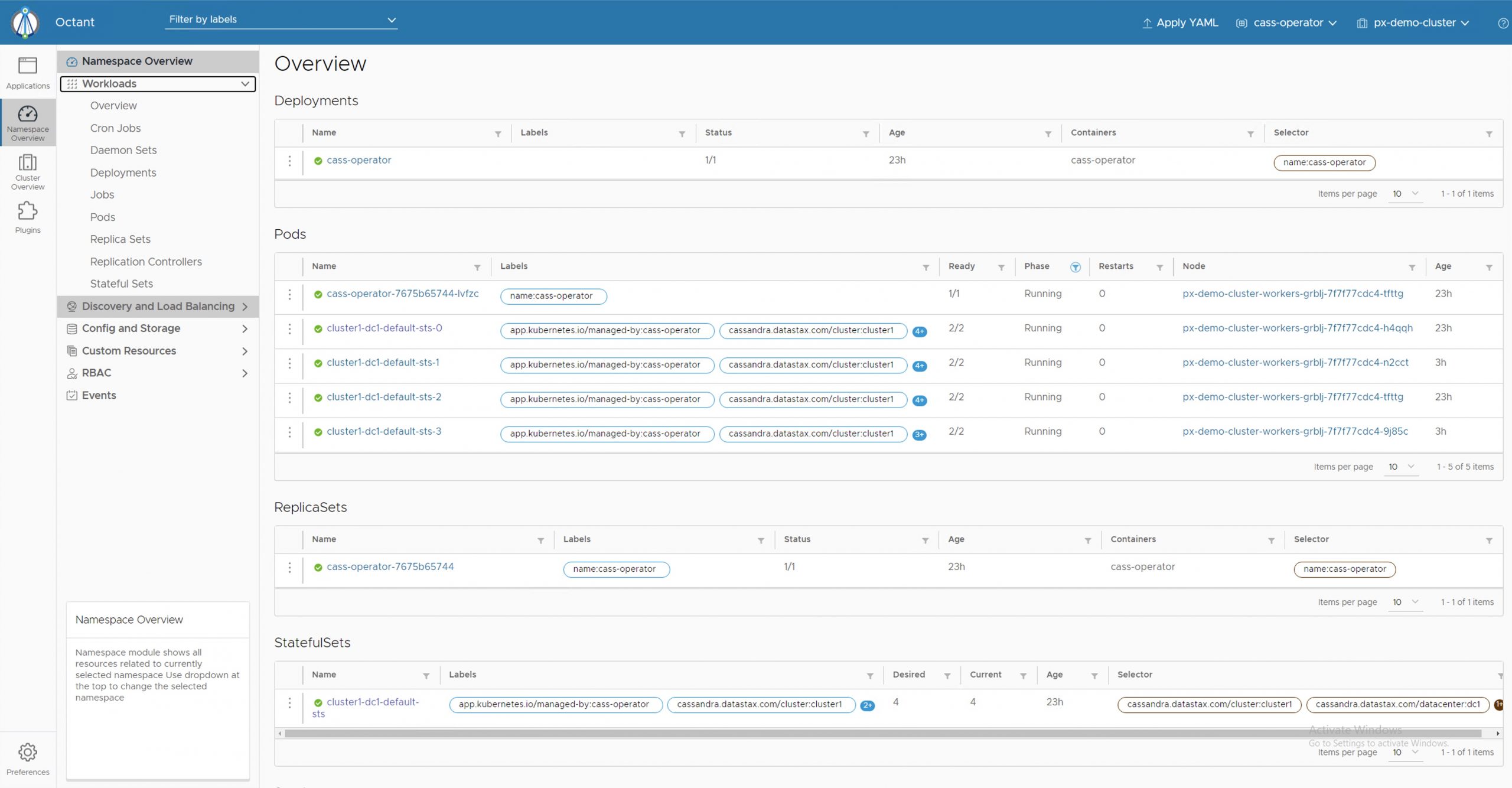

If you are a virtualization or storage administrator—and you are responsible for managing applications running in production—you rely on different tools and alerts to monitor virtual machine storage capacity utilization. Based on these alerts, you either have manual or automated steps to add more storage capacity to your VMs. This can be done by either adding more vmdks to or expanding existing vmdks for the VM in question or by expanding the LUN on your storage array to ensure that your vSphere datastore has enough available storage capacity. But, with the increased use of Kubernetes in production, you need a solution that helps you automate storage capacity management for your container-based applications as well. This is where PX-Autopilot can benefit you and make your life easier. Autopilot is a rule-based engine that responds to changes from a monitoring source. Autopilot allows you to specify monitoring conditions as well as actions it should take when those conditions are met.

In this blog, we will discuss how you can leverage PX-Autopilot to perform the following actions for your VMware Tanzu Kubernetes clusters:

- Automatically grow Persistent Volume Claims (PVCs) for your stateful applications running on VMware Tanzu Kubernetes clusters

- Automatically expand Portworx storage pools for your VMware Tanzu Kubernetes clusters

Autopilot also supports additional use cases, like expanding every Portworx storage pool in your cluster and automatically rebalancing Portworx storage pools as well.

Automatically grow PVCs for your stateful applications running on VMware Tanzu Kubernetes clusters

One of the key responsibilities for running distributed databases on Kubernetes is ensuring persistence. Kubernetes is adept at dynamically provisioning persistent volumes for applications using storage classes, but to keep your databases online, you need to ensure that their persistent volumes always have enough free storage available. If the persistent volumes reach full capacity, your database won’t be able to write new I/O, resulting in your application being offline. To help you avoid such a scenario, Portworx allows you to create Autopilot rules. You can use these rules to define conditions and actions that Autopilot needs to take when these conditions are met. Let’s look at a simple Autopilot Rule definition that allows you to automatically resize your persistent volumes.

apiVersion: autopilot.libopenstorage.org/v1alpha1 kind: AutopilotRule metadata: name: volume-resize spec: ##### selector filters the objects affected by this rule given labels selector: matchLabels: app: postgres ##### namespaceSelector selects the namespaces of the objects affected by this rule namespaceSelector: matchLabels: type: db ##### conditions are the symptoms to evaluate. All conditions are AND'ed conditions: # volume usage should be less than 50% expressions: - key: "100 * (px_volume_usage_bytes / px_volume_capacity_bytes)" operator: Gt values: - "50" ##### action to perform when condition is true actions: - name: openstorage.io.action.volume/resize params: # resize volume by scalepercentage of current size scalepercentage: "100" # volume capacity should not exceed 400GiB maxsize: "400Gi"

In the above example, we have specified a couple of selectors, both from a PVC and a Kubernetes namespace perspective. This rule will apply to all PVCs that have the label “app: postgres” and namespaces that have the label “type: db”. You can choose to use these selectors individually or together. Each Autopilot rule has a “conditions” and an “actions” section. In the conditions section, you can specify the trigger condition, and in the actions section, you can specify the steps Portworx needs to take to ensure application uptime. In our example, our trigger condition is when the persistent volume goes above 50% utilization—the action is to scale the persistent volume by 100% till you reach a maximum persistent volume of size 400GB.

As the storage or virtualization admin, you get full control over defining these conditions and actions. You can choose a higher utilization percentage and choose to only scale the persistent volume by 50% instead of doubling it every time it meets the condition. The “maxsize” parameter allows you to specify a ceiling for your persistent volumes to ensure that a single application doesn’t consume all the available storage capacity. You can deploy individual Autopilot rules for each application or use a blanket autopilot rule for all your applications running on your Tanzu Kubernetes cluster. The only thing to keep in mind when using volume resize for your persistent volumes is to use a Portworx storage class with allowVolumeExpansion set to true.

Automatically expand Portworx storage pools for your VMware Tanzu Kubernetes clusters

Now that you have seen how you can expand your persistent volumes, let’s look at the next logical step when it comes to automated storage capacity management—automatically expanding the Portworx storage pools that provide persistent storage for your VMware Tanzu Kubernetes clusters. This feature of Portworx autopilot really comes in handy when your Portworx storage pool is nearing capacity and you need to automatically add more capacity to ensure that your applications stay online and your Kubernetes cluster can deploy more applications.

Portworx works with Tanzu Kubernetes clusters by automatically provisioning VMDK files on your vSphere datastore, mounting those vmdks to your Tanzu Kubernetes cluster worker nodes, and aggregating those virtual disks into storage pools that provide persistent storage to your applications running on Tanzu Kubernetes clusters. Let’s look at an example Autopilot rule that helps you automatically expand your storage pools.

apiVersion: autopilot.libopenstorage.org/v1alpha1 kind: AutopilotRule metadata: name: pool-expand spec: enforcement: required ##### conditions are the symptoms to evaluate. All conditions are AND'ed conditions: expressions: # pool available capacity less than 50% - key: "100 * ( px_pool_stats_available_bytes/ px_pool_stats_total_bytes)" operator: Lt values: - "50" # volume total capacity should not exceed 400GiB - key: "px_pool_stats_total_bytes/(1024*1024*1024)" operator: Lt values: - "400" ##### action to perform when condition is true actions: - name: "openstorage.io.action.storagepool/expand" params: # resize pool by scalepercentage of current size scalepercentage: "100" # when scaling, add disks to the pool scaletype: "add-disk"

This rule checks whether the available capacity for your Portworx storage pool is less than 50%, and whenever this condition is met, it automatically scales your storage pool by 100%. Under the covers, Portworx provisions an additional virtual disk, mounts it to the Tanzu Kubernetes Cluster worker node that hosts the storage pool, and expands the Portworx storage pool. As an admin, you can also specify the max size of your storage pool. In this case, we specified the size as 400GB per storage pool.

Both storage capacity management operations that we discussed above are completely automated and need no admin intervention—eliminating the need for a ticketing system completely. This feature is crucial when you want to start small and scale your infrastructure resources with your workloads. But, if this feels too automated for you and you are not ready for Autopilot to take over your storage capacity management, you can also use the action approvals workflow features in Autopilot to have an approval workflow in place, either through kubectl or by integrating it with your GitOps workflows. By using action approvals, Portworx still monitors for your conditions and performs the actions, but instead of automatically performing the actions when the conditions are met, it introduces an additional step where the admin needs to approve Autopilot requests before an action can be taken.

You can use both capacity management Autopilot rules discussed in this blog (volume expansion and storage pool expansion) individually, or you can use them together, as demonstrated in the video below. Using them together allows you to streamline your capacity management workflow for your production environments while still enforcing upper limits to your persistent volumes and your storage pool configurations.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Bhavin Shah

Sr. Technical Marketing Manager | Cloud Native BU, Pure Storage

Deploying a highly available Cassandra cluster on VMware Tanzu with Portworx

Portworx Enterprise 2.8: deeper Pure integration, Tanzu support, and much more

Automating Kubernetes Data Management with GitOps