My colleague Gou blogged about why Docker’s recent support for volume plugins has been fantastic for Portworx. I want to take it one step further and do a deep dive on what happens under the hood.

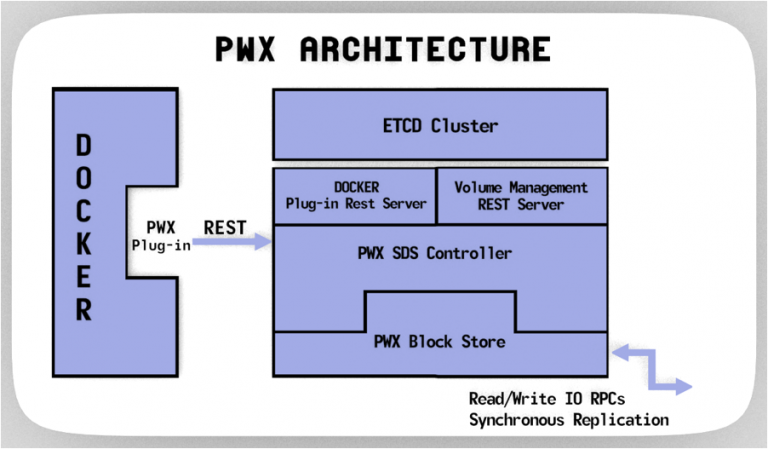

PORTWORX HIGH LEVEL ARCHITECTURE

The Portworx stack consists of three pieces:

- PXC is a container aware distributed scale-out block storage implementation. In addition to providing container granular storage operations such as clones and snapshots, PXC providessynchronous replication of storage blocks across a configured replication set. Another important feature is that, although data is replicated across a subset of nodes in the cluster, all the nodes in the cluster can access the data. These two features play a key role in ensuring high availability of Docker volumes.

- The PXC orchestrator is responsible for clustered storage provisioning. The guts of software defined storage is provided at this layer. The PXC orchestrator understands the physical storage makeup of each node and has knowledge of all the provisioned volumes in the cluster as well as their container mappings. Information is stored and propagated across the cluster via an Etcd cluster. Based on a volume specification and the availability of resources, the PXC orchestrator determines a replication set; data will be mirrored across this replication set.

- PWX Management layer provides a REST interface to provision and manage volumes. The PWX CLI interacts with this layer. This is the layer that implements the Docker plugin interface as well. The implementation of this Docker plugin server relies on the same interfaces as CLI facing REST implementation.

DOCKER VOLUME PLUGINS IN ACTION

To demonstrate volume plugins, we first provision a volume on PXC. Since PXC exports a block device, it is possible to specify file systems in the volume spec. We choose “ext4” in this example and a replication factor of two. The provisioned volume is assigned a cluster wide unique ID. We then inspect the volume configuration and note that the replica set indeed has two machines.

We then start a mysql container designating PXC as the storage provider and verify that when the container starts up, its storage is indeed derived from PXC.

At this point all writes to the volume are handled by PXC and synchronously replicated to one other node in the replication set. In the event of a failure, when a container scheduler such as swarm stands up the container in a different node on the cluster, Docker talks the the volume plugin API to the PWX stack running on the new node and mounts the same volume. It is not necessary for the container to be scheduled on the node that has the replicated data; PXC supports remote data access. A complete example will show a real application built with compose, scheduled with swarm and continue seamlessly in the event of a failure. That will be the subject of a live demo!

Did you enjoy what you just read? Learn more about Docker storage, Kubernetes storage or DC/OS storage.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!