This post is part of our ongoing series on running Cassandra on Kubernetes. We’ve published a number of articles about running Cassandra on Kubernetes for specific platforms and for specific use cases. If you are looking for a specific Kubernetes platform, check out these related articles.

Running HA Cassandra on Amazon Elastic Container Service for Kubernetes (EKS)

Running HA Cassandra on Google Kubernetes Engine (GKE)

Running HA Cassandra on Red Hat OpenShift

Running HA Cassandra on Azure Kubernetes Service (AKS)

Running HA Cassandra on IBM Cloud Kubernetes Service (IKS)

Running HA Cassandra on IBM Cloud Private

And now, onto the post…

Rancher Kubernetes Engine (RKE) is a light-weight Kubernetes installer that supports installation on bare-metal and virtualized servers. RKE solves a common issue in the Kubernetes community: installation complexity. With RKE, Kubernetes installation is simplified, regardless of what operating systems and platforms you’re running.

Portworx is a cloud native storage platform to run persistent workloads deployed on a variety of orchestration engines including Kubernetes. With Portworx, customers can manage the database of their choice on any infrastructure using any container scheduler. It provides a single data management layer for all stateful services, no matter where they run.

This tutorial is a walk-through of the steps involved in deploying and managing a highly available Cassandra NoSQL database on a Kubernetes cluster deployed in AWS through RKE.

In summary, to run HA Cassandra on Amazon you need to:

- Install a Kubernetes cluster through Rancher Kubernetes Engine

- Install a cloud native storage solution like Portworx as a DaemonSet on Kubernetes

- Create a storage class defining your storage requirements like replication factor, snapshot policy, and performance profile

- Deploy Cassandra as a StatefulSet on Kubernetes

- Test failover by killing or cordoning nodes in your cluster

- Optional – Take an app consistent snapshot of Cassandra

- Optional – Bootstrap a new Cassandra cluster from a snapshot backup

How to set up a Kubernetes Cluster with RKE

RKE is a tool to install and configure Kubernetes in a choice of environments including bare-metal, virtual machines, and IaaS. For this tutorial, we will be launching a 3-node Kubernetes cluster in Amazon EC2.

For a detailed step-by-step guide, please refer to this tutorial from The New Stack.

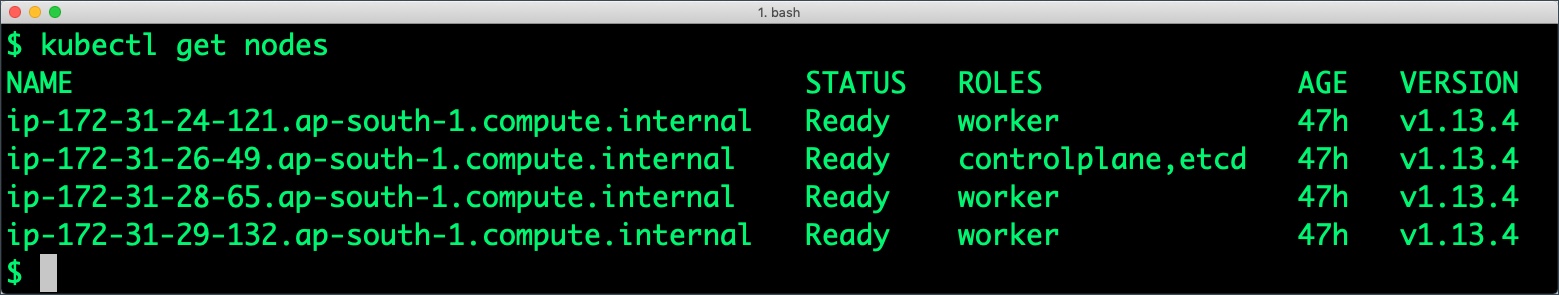

By the end of this step, you should have a cluster with one master and three worker nodes.

Installing Portworx in Kubernetes

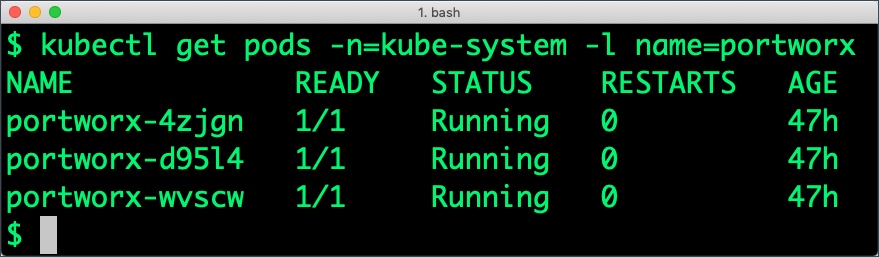

Installing Portworx on RKE-based Kubernetes is not different from installing it on a Kubernetes cluster setup through Kops. Portworx documentation has the steps involved in running the Portworx cluster in a Kubernetes environment deployed in AWS.

The New Stack tutorial mentioned in the previous section also covers all the steps to deploy Portworx DaemonSet in Kubernetes.

Once the Kubernetes cluster is up and running, and Portworx is installed and configured, we will deploy a highly available Cassandra NoSQL database as a StatefulSet.

Creating a storage class for Cassandra

Once the Kubernetes cluster is up and running, and Portworx is installed and configured, we will deploy a highly available Cassandra database.

Through storage class objects, an admin can define different classes of Portworx volumes that are offered in a cluster. These classes will be used during the dynamic provisioning of volumes. The storage class defines the replication factor, I/O profile (e.g., for a database or a CMS), and priority (e.g., SSD or HDD). These parameters impact the availability and throughput of workloads and can be specified for each volume. This is important because a production database will have different requirements than a development Jenkins cluster.

In this example, the storage class that we deploy has a replication factor of 3s with I/O profile set to “db,” and priority set to “high.” This means that the storage will be optimized for low latency database workloads like Cassandra and automatically placed on the highest performance storage available in the cluster.

$ cat > px-cassandra-sc.yaml << EOF kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: px-storageclass provisioner: kubernetes.io/portworx-volume parameters: repl: "3" io_profile: "db_remote" priority_io: "high" fg: "true" EOF

Create the storage class and verify its available in the default namespace.

$ kubectl create -f px-cassandra-sc.yaml storageclass.storage.k8s.io "px-storageclass" created $ kubectl get sc NAME PROVISIONER AGE px-storageclass kubernetes.io/portworx-volume 24s stork-snapshot-sc stork-snapshot 27m

Deploying Cassandra StatefulSet on Kubernetes

Finally, let’s create a Cassandra cluster as a Kubernetes StatefulSet object. Like a Kubernetes deployment, a StatefulSet manages pods that are based on an identical container spec. Unlike a deployment, a StatefulSet maintains a sticky identity for each of their Pods. For more details on StatefulSets, refer to Kubernetes documentation.

A StatefulSet in Kubernetes requires a headless service to provide network identity to the pods it creates. The following command and the spec will help you create a headless service for your Cassandra installation.

$ cat > px-cassandra-svc.yaml << EOF

apiVersion: v1

kind: Service

metadata:

labels:

app: cassandra

name: cassandra

spec:

clusterIP: None

ports:

- port: 9042

selector:

app: cassandra

EOF

$ kubectl create -f px-cassandra-svc.yaml service "cassandra" created

Now, let’s go ahead and create a StatefulSet running Cassandra cluster based on the below spec.

cat > px-cassandra-app.yaml << EOF

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: cassandra

spec:

selector:

matchLabels:

app: cassandra

serviceName: cassandra

replicas: 3

template:

metadata:

labels:

app: cassandra

spec:

schedulerName: stork

containers:

- name: cassandra

image: cassandra:3

ports:

- containerPort: 7000

name: intra-node

- containerPort: 7001

name: tls-intra-node

- containerPort: 7199

name: jmx

- containerPort: 9042

name: cql

env:

- name: CASSANDRA_SEEDS

value: cassandra-0.cassandra.default.svc.cluster.local

- name: MAX_HEAP_SIZE

value: 512M

- name: HEAP_NEWSIZE

value: 512M

- name: CASSANDRA_CLUSTER_NAME

value: "Cassandra"

- name: CASSANDRA_DC

value: "DC1"

- name: CASSANDRA_RACK

value: "Rack1"

- name: CASSANDRA_AUTO_BOOTSTRAP

value: "false"

- name: CASSANDRA_ENDPOINT_SNITCH

value: GossipingPropertyFileSnitch

volumeMounts:

- name: cassandra-data

mountPath: /var/lib/cassandra

volumeClaimTemplates:

- metadata:

name: cassandra-data

annotations:

volume.beta.kubernetes.io/storage-class: px-storageclass

labels:

app: cassandra

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

EOF

$ kubectl apply -f px-cassandra-app.yaml statefulset.apps "cassandra" created

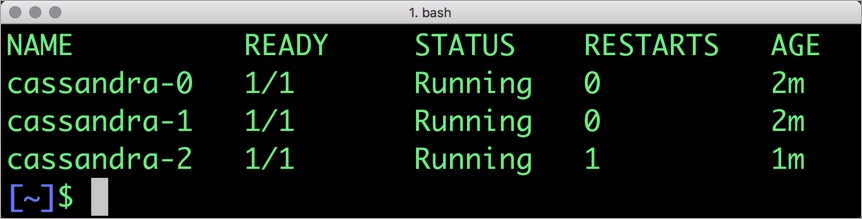

Verify that all the pods are in the Running state before proceeding further.

$ kubectl get statefulset NAME DESIRED CURRENT AGE cassandra 3 2 45s

$ kubectl get pods NAME READY STATUS RESTARTS AGE cassandra-0 1/1 Running 0 2m cassandra-1 1/1 Running 0 1m cassandra-2 1/1 Running 1 43s

Let’s also check if persistent volume claims are bound to the volumes.

$ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE cassandra-data-cassandra-0 Bound pvc-c79d0e58-b1b6-11e8-8c6b-027b3f29e3d8 1Gi RWO px-storageclass 4m cassandra-data-cassandra-1 Bound pvc-e173fdf6-b1b6-11e8-8c6b-027b3f29e3d8 1Gi RWO px-storageclass 3m cassandra-data-cassandra-2 Bound pvc-fa515912-b1b6-11e8-8c6b-027b3f29e3d8 1Gi RWO px-storageclass 2m

Notice the naming convention followed by Kubernetes for the pods and volume claims. The arbitrary number attached to each object indicates the association of pods and volumes.

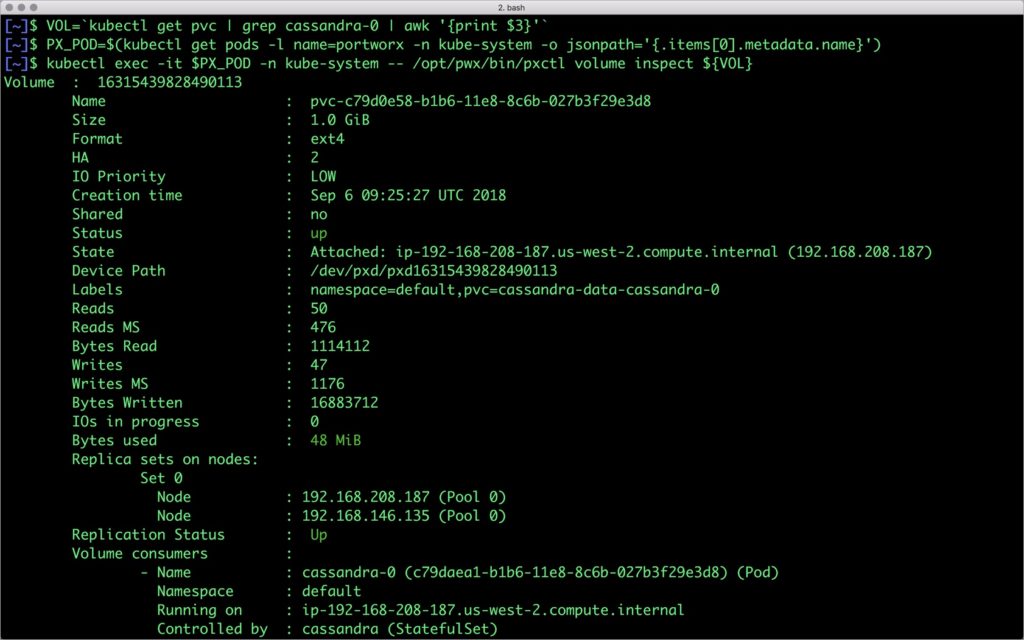

We can now inspect the Portworx volume associated with one of the Cassandra pods by accessing the pxctl tool.

$ VOL=`kubectl get pvc | grep cassandra-0 | awk '{print $3}'`

$ PX_POD=$(kubectl get pods -l name=portworx -n kube-system -o jsonpath='{.items[0].metadata.name}')

$ kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl volume inspect ${VOL}

Volume : 16315439828490113

Name : pvc-c79d0e58-b1b6-11e8-8c6b-027b3f29e3d8

Size : 1.0 GiB

Format : ext4

HA : 2

IO Priority : LOW

Creation time : Sep 6 09:25:27 UTC 2018

Shared : no

Status : up

State : Attached: ip-192-168-208-187.us-west-2.compute.internal (192.168.208.187)

Device Path : /dev/pxd/pxd16315439828490113

Labels : namespace=default,pvc=cassandra-data-cassandra-0

Reads : 50

Reads MS : 476

Bytes Read : 1114112

Writes : 47

Writes MS : 1176

Bytes Written : 16883712

IOs in progress : 0

Bytes used : 48 MiB

Replica sets on nodes:

Set 0

Node : 192.168.208.187 (Pool 0)

Node : 192.168.146.135 (Pool 0)

Replication Status : Up

Volume consumers :

- Name : cassandra-0 (c79daea1-b1b6-11e8-8c6b-027b3f29e3d8) (Pod)

Namespace : default

Running on : ip-192-168-208-187.us-west-2.compute.internal

Controlled by : cassandra (StatefulSet)

The output from the above command confirms the creation of volumes that are backing Cassandra nodes.

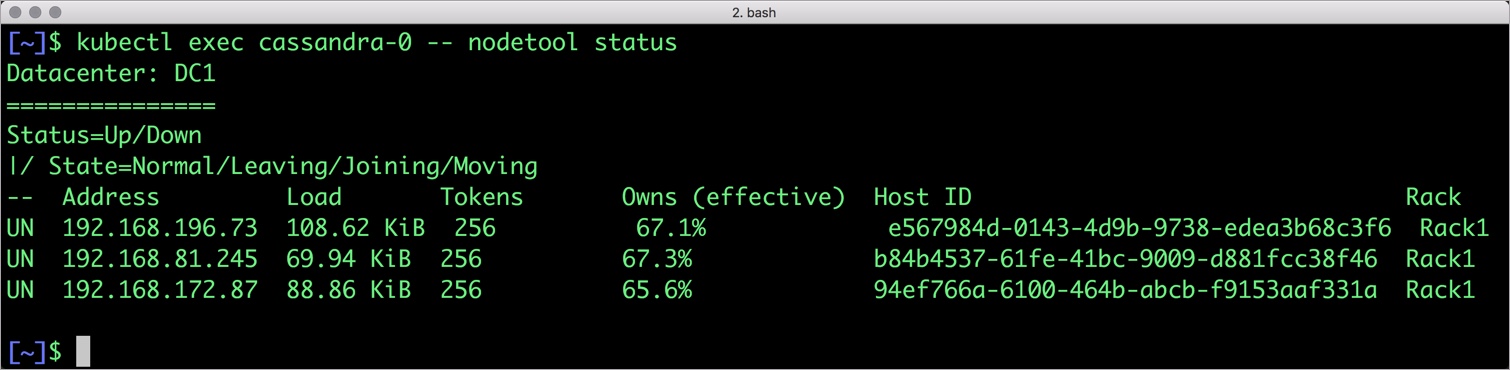

We can also use Cassandra’s nodetool to check the status of the cluster.

$ kubectl exec cassandra-0 -- nodetool status Datacenter: DC1 =============== Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns (effective) Host ID Rack UN 192.168.196.73 108.62 KiB 256 67.1% e567984d-0143-4d9b-9738-edea3b68c3f6 Rack1 UN 192.168.81.245 69.94 KiB 256 67.3% b84b4537-61fe-41bc-9009-d881fcc38f46 Rack1 UN 192.168.172.87 88.86 KiB 256 65.6% 94ef766a-6100-464b-abcb-f9153aaf331a Rack1

To get the pods and hosts associated with the Cassandra cluster, run the below command:

$ kubectl get pods -l app=cassandra -o json | jq '.items[] | {"name": .metadata.name,"hostname": .spec.nodeName, "hostIP": .status.hostIP, "PodIP": .status.podIP}'

{

"name": "cassandra-0",

"hostname": "ip-192-168-208-187.us-west-2.compute.internal",

"hostIP": "192.168.208.187",

"PodIP": "192.168.196.73"

}

{

"name": "cassandra-1",

"hostname": "ip-192-168-146-135.us-west-2.compute.internal",

"hostIP": "192.168.146.135",

"PodIP": "192.168.172.87"

}

{

"name": "cassandra-2",

"hostname": "ip-192-168-99-43.us-west-2.compute.internal",

"hostIP": "192.168.99.43",

"PodIP": "192.168.81.245"

}

Failing over Cassandra pod on Kubernetes

Populating sample data

Let’s populate the database with some sample data by accessing the first node of the Cassandra cluster. We will do this by invoking Cassandra shell, cqlsh in one of the pods.

$ kubectl exec -it cassandra-0 -- cqlsh Connected to Cassandra at 127.0.0.1:9042. [cqlsh 5.0.1 | Cassandra 3.11.3 | CQL spec 3.4.4 | Native protocol v4] Use HELP for help. cqlsh>

Now that we are inside the shell, we can create a keyspace and populate it.

CREATE KEYSPACE classicmodels WITH REPLICATION = { 'class' : 'SimpleStrategy', 'replication_factor' : 3 };

CONSISTENCY QUORUM;

Consistency level set to QUORUM.

use classicmodels;

CREATE TABLE offices (officeCode text PRIMARY KEY, city text, phone text, addressLine1 text, addressLine2 text, state text, country text, postalCode text, territory text);

INSERT into offices(officeCode, city, phone, addressLine1, addressLine2, state, country ,postalCode, territory) values

('1','San Francisco','+1 650 219 4782','100 Market Street','Suite 300','CA','USA','94080','NA');

INSERT into offices(officeCode, city, phone, addressLine1, addressLine2, state, country ,postalCode, territory) values

('2','Boston','+1 215 837 0825','1550 Court Place','Suite 102','MA','USA','02107','NA');

INSERT into offices(officeCode, city, phone, addressLine1, addressLine2, state, country ,postalCode, territory) values

('3','NYC','+1 212 555 3000','523 East 53rd Street','apt. 5A','NY','USA','10022','NA');

INSERT into offices(officeCode, city, phone, addressLine1, addressLine2, state, country ,postalCode, territory) values

('4','Paris','+33 14 723 4404','43 Rue Jouffroy abbans', NULL ,NULL,'France','75017','EMEA');

INSERT into offices(officeCode, city, phone, addressLine1, addressLine2, state, country ,postalCode, territory) values

('5','Tokyo','+81 33 224 5000','4-1 Kioicho',NULL,'Chiyoda-Ku','Japan','102-8578','Japan');

INSERT into offices(officeCode, city, phone, addressLine1, addressLine2, state, country ,postalCode, territory) values

('6','Sydney','+61 2 9264 2451','5-11 Wentworth Avenue','Floor #2',NULL,'Australia','NSW 2010','APAC');

INSERT into offices(officeCode, city, phone, addressLine1, addressLine2, state, country ,postalCode, territory) values

('7','London','+44 20 7877 2041','25 Old Broad Street','Level 7',NULL,'UK','EC2N 1HN','EMEA');

INSERT into offices(officeCode, city, phone, addressLine1, addressLine2, state, country ,postalCode, territory) values

('8','Mumbai','+91 22 8765434','BKC','Building 2',NULL,'MH','400051','APAC');

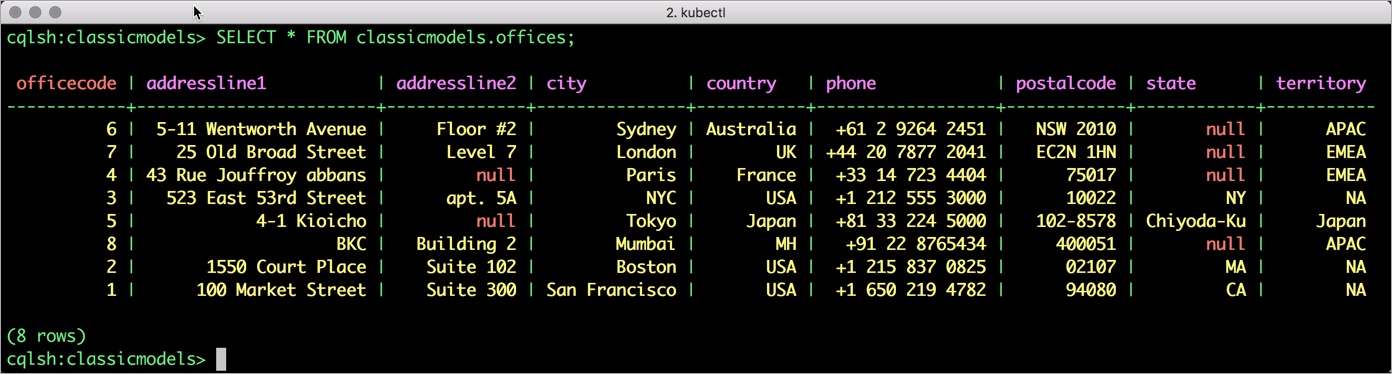

Let’s verify that the data is populated.

SELECT * FROM classicmodels.offices;

officecode | addressline1 | addressline2 | city | country | phone | postalcode | state | territory

------------+------------------------+--------------+---------------+-----------+------------------+------------+------------+-----------

6 | 5-11 Wentworth Avenue | Floor #2 | Sydney | Australia | +61 2 9264 2451 | NSW 2010 | null | APAC

7 | 25 Old Broad Street | Level 7 | London | UK | +44 20 7877 2041 | EC2N 1HN | null | EMEA

4 | 43 Rue Jouffroy abbans | null | Paris | France | +33 14 723 4404 | 75017 | null | EMEA

3 | 523 East 53rd Street | apt. 5A | NYC | USA | +1 212 555 3000 | 10022 | NY | NA

5 | 4-1 Kioicho | null | Tokyo | Japan | +81 33 224 5000 | 102-8578 | Chiyoda-Ku | Japan

8 | BKC | Building 2 | Mumbai | MH | +91 22 8765434 | 400051 | null | APAC

2 | 1550 Court Place | Suite 102 | Boston | USA | +1 215 837 0825 | 02107 | MA | NA

1 | 100 Market Street | Suite 300 | San Francisco | USA | +1 650 219 4782 | 94080 | CA | NA

(8 rows)

cqlsh:classicmodels>

Exit from the client shell to return to the host.

You can run the select query by accessing cqlsh from any of the pods of the StatefulSet.

Run nodetool again to check the replication of the data. The below command shows that the hosts on which the row with officecode=6 is available.

$ kubectl exec -it cassandra-0 -- nodetool getendpoints classicmodels offices 6 192.168.172.87 192.168.81.245 192.168.196.73

Simulating node failure

Let’s get the node name where the first pod of Cassandra StatefulSet is running.

$ NODE=`kubectl get pods cassandra-0 -o json | jq -r .spec.nodeName`

Now, let’s simulate the node failure by cordoning off the Kubernetes node.

$ kubectl cordon ${NODE}

node/ip-172-31-29-132.ap-south-1.compute.internal cordoned

The above command disabled scheduling on one of the nodes.

$ kubectl get nodes NAME STATUS ROLES AGE VERSION ip-172-31-24-121.ap-south-1.compute.internal Ready worker 47h v1.13.4 ip-172-31-26-49.ap-south-1.compute.internal Ready controlplane,etcd 47h v1.13.4 ip-172-31-28-65.ap-south-1.compute.internal Ready worker 47h v1.13.4 ip-172-31-29-132.ap-south-1.compute.internal Ready,SchedulingDisabled worker 47h v1.13.4

Let’s go ahead and delete the pod cassandra-0 running on the node that is cordoned off.

$ kubectl delete pod cassandra-0 pod "cassandra-0" deleted

Kubernetes controller now tries to create the pod on a different node.

$ kubectl get pods NAME READY STATUS RESTARTS AGE cassandra-0 0/1 ContainerCreating 0 2s cassandra-1 1/1 Running 0 54m cassandra-2 1/1 Running 1 53m

Wait for the pod to be in Running state on the node.

$ kubectl get pods NAME READY STATUS RESTARTS AGE cassandra-0 1/1 Running 0 1m cassandra-1 1/1 Running 0 54m cassandra-2 1/1 Running 1 53m

Finally, let’s verify that the data is still available.

Verifying that the data is intact

Let’s access the data in the first pod of the StatefulSet – cassandra-0.

$ kubectl exec cassandra-0 -- cqlsh -e 'select * from classicmodels.offices'

officecode | addressline1 | addressline2 | city | country | phone | postalcode | state | territory

------------+------------------------+--------------+---------------+-----------+------------------+------------+------------+-----------

6 | 5-11 Wentworth Avenue | Floor #2 | Sydney | Australia | +61 2 9264 2451 | NSW 2010 | null | APAC

7 | 25 Old Broad Street | Level 7 | London | UK | +44 20 7877 2041 | EC2N 1HN | null | EMEA

4 | 43 Rue Jouffroy abbans | null | Paris | France | +33 14 723 4404 | 75017 | null | EMEA

3 | 523 East 53rd Street | apt. 5A | NYC | USA | +1 212 555 3000 | 10022 | NY | NA

5 | 4-1 Kioicho | null | Tokyo | Japan | +81 33 224 5000 | 102-8578 | Chiyoda-Ku | Japan

8 | BKC | Building 2 | Mumbai | MH | +91 22 8765434 | 400051 | null | APAC

2 | 1550 Court Place | Suite 102 | Boston | USA | +1 215 837 0825 | 02107 | MA | NA

1 | 100 Market Street | Suite 300 | San Francisco | USA | +1 650 219 4782 | 94080 | CA | NA

(8 rows)

Observe that the data is still there and all the content is intact! We can also run the nodetool again to see that the new node is indeed a part of the StatefulSet.

$ kubectl exec cassandra-1 -- nodetool status Datacenter: DC1 =============== Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns (effective) Host ID Rack UN 192.168.148.159 100.44 KiB 256 100.0% fd1610c8-7745-49eb-b801-983cde4e1b85 Rack1 UN 192.168.81.245 186.62 KiB 256 100.0% b84b4537-61fe-41bc-9009-d881fcc38f46 Rack1 UN 192.168.172.87 196.54 KiB 256 100.0% 94ef766a-6100-464b-abcb-f9153aaf331a Rack1

Capturing Application Consistent Snapshots to Restore Data

Portworx enables storage admins to perform backup and restore operations through the snapshots. 3DSnap is a feature to capture consistent snapshots from multiple nodes of a database cluster. This is highly recommended when running a multi-node Cassandra cluster as a Kubernetes StatefulSet. 3DSnap will create the snapshot from each of the node in the cluster, which ensures that the state is accurately captured from the distributed cluster.

3DSnap allows administrators to execute commands just before taking the snapshot and right after completing the task of taking a snapshot. These triggers will ensure that the data is fully committed to the disk before the snapshot. Similarly, it is possible to run a workload-specific command to refresh or force sync immediately after restoring the snapshot.

This section will walk you through the steps involved in creating and restoring a 3DSnap for the Cassandra StatefulSet.

Creating a 3DSnap

It’s a good idea to flush the data to the disk before initiating the snapshot creation. This is defined through a rule, which is a Custom Resource Definition created by Stork.

$ cat > px-cassandra-rule.yaml << EOF

apiVersion: stork.libopenstorage.org/v1alpha1

kind: Rule

metadata:

name: px-cassandra-rule

spec:

- podSelector:

app: cassandra

actions:

- type: command

value: nodetool flush

EOF

Create the rule from the above YAML file.

$ kubectl create -f px-cassandra-rule.yaml rule.stork.libopenstorage.org "px-cassandra-rule" created

We will now initiate a 3DSnap task to backup all the PVCs associated with the Cassandra pods belonging to the StatefulSet.

$ cat > px-cassandra-snap.yaml << EOF

apiVersion: volumesnapshot.external-storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: cassandra-3d-snapshot

annotations:

portworx.selector/app: cassandra

stork.rule/pre-snapshot: px-cassandra-rule

spec:

persistentVolumeClaimName: cassandra-data-cassandra-0

EOF

$ kubectl create -f px-cassandra-snap.yaml volumesnapshot.volumesnapshot.external-storage.k8s.io "cassandra-3d-snapshot" created

Let’s now verify that the snapshot creation is successful.

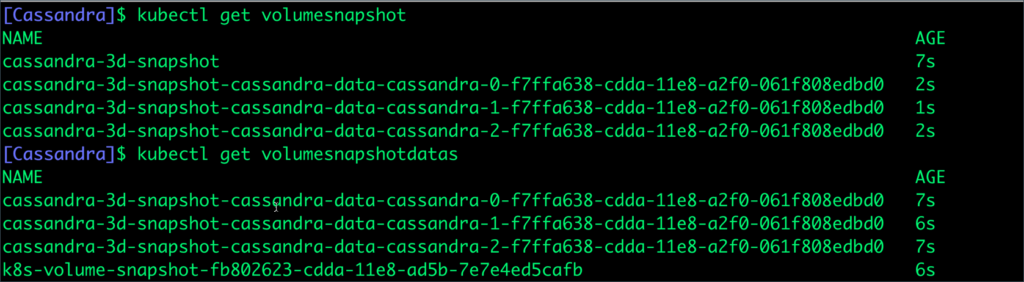

$ kubectl get volumesnapshot NAME AGE cassandra-3d-snapshot 7s cassandra-3d-snapshot-cassandra-data-cassandra-0-f7ffa638-cdda-11e8-a2f0-061f808edbd0 2s cassandra-3d-snapshot-cassandra-data-cassandra-1-f7ffa638-cdda-11e8-a2f0-061f808edbd0 1s cassandra-3d-snapshot-cassandra-data-cassandra-2-f7ffa638-cdda-11e8-a2f0-061f808edbd0 2s

$ kubectl get volumesnapshotdatas NAME AGE cassandra-3d-snapshot-cassandra-data-cassandra-0-f7ffa638-cdda-11e8-a2f0-061f808edbd0 7s cassandra-3d-snapshot-cassandra-data-cassandra-1-f7ffa638-cdda-11e8-a2f0-061f808edbd0 6s cassandra-3d-snapshot-cassandra-data-cassandra-2-f7ffa638-cdda-11e8-a2f0-061f808edbd0 7s k8s-volume-snapshot-fb802623-cdda-11e8-ad5b-7e7e4ed5cafb 6s

Restoring from a 3DSnap

Let’s now restore from the 3DSnap. Before that, we will simulate the database crash by deleting the StatefulSet and associated PVCs.

$ kubectl delete sts cassandra statefulset.apps "cassandra" deleted

$ kubectl delete pvc -l app=cassandra persistentvolumeclaim "cassandra-data-cassandra-0" deleted persistentvolumeclaim "cassandra-data-cassandra-1" deleted persistentvolumeclaim "cassandra-data-cassandra-2" deleted

Now our Kubernetes cluster has no database running. Let’s go ahead and restore the data from the snapshot before relaunching Cassandra StatefulSet.

We will now create three Persistent Volume Claims (PVCs) from existing 3DSnap with exactly the same volume name that the StatefulSet expects. When the pods are created as a part of the statefulset, they point to the existing PVCs which are already populated with the data restored from the snapshots.

Let’s create three PVCs from the 3DSnap snapshots. Notice how the annotation points to the snapshot in each PVC manifest.

$ cat > px-cassandra-pvc-0.yaml << EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cassandra-data-cassandra-0

annotations:

snapshot.alpha.kubernetes.io/snapshot: "cassandra-3d-snapshot-cassandra-data-cassandra-0-f7ffa638-cdda-11e8-a2f0-061f808edbd0"

spec:

accessModes:

- ReadWriteOnce

storageClassName: stork-snapshot-sc

resources:

requests:

storage: 5Gi

EOF

$ cat > px-cassandra-pvc-1.yaml << EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cassandra-data-cassandra-1

annotations:

snapshot.alpha.kubernetes.io/snapshot: "cassandra-3d-snapshot-cassandra-data-cassandra-0-f7ffa638-cdda-11e8-a2f0-061f808edbd0"

spec:

accessModes:

- ReadWriteOnce

storageClassName: stork-snapshot-sc

resources:

requests:

storage: 5Gi

EOF

$ cat > px-cassandra-pvc-2.yaml << EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cassandra-data-cassandra-0

annotations:

snapshot.alpha.kubernetes.io/snapshot: "cassandra-3d-snapshot-cassandra-data-cassandra-2-f7ffa638-cdda-11e8-a2f0-061f808edbd0"

spec:

accessModes:

- ReadWriteOnce

storageClassName: stork-snapshot-sc

resources:

requests:

storage: 5Gi

EOF

Create the PVCs from the above definitions.

$ kubectl create -f px-cassandra-snap-pvc-0.yaml persistentvolumeclaim "cassandra-data-cassandra-0" created $ kubectl create -f px-cassandra-snap-pvc-1.yaml persistentvolumeclaim "cassandra-data-cassandra-1" created $ kubectl create -f px-cassandra-snap-pvc-2.yaml persistentvolumeclaim "cassandra-data-cassandra-2" created $ kubectl create -f px-cassandra-app.yaml statefulset.apps "cassandra" created

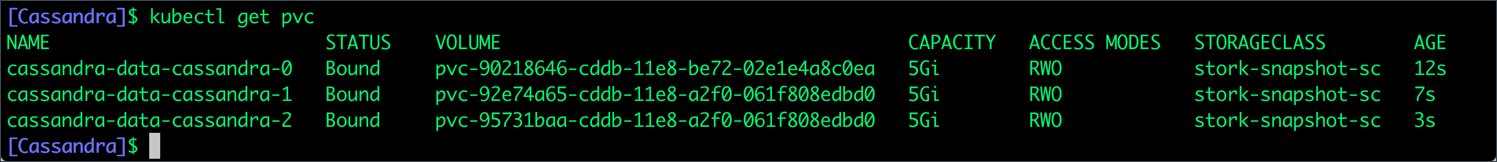

Verify that the new PVCs are ready and bound.

$ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE cassandra-data-cassandra-0 Bound pvc-90218646-cddb-11e8-be72-02e1e4a8c0ea 5Gi RWO stork-snapshot-sc 12s cassandra-data-cassandra-1 Bound pvc-92e74a65-cddb-11e8-a2f0-061f808edbd0 5Gi RWO stork-snapshot-sc 7s cassandra-data-cassandra-2 Bound pvc-95731baa-cddb-11e8-a2f0-061f808edbd0 5Gi RWO stork-snapshot-sc 3s

With the PVCs in place, we are ready to launch the statefulset with no changes to the YAML file. Everything remains exactly the same while the data is already restored from the snapshots.

$ kubectl create -f px-cassandra-app.yaml statefulset.apps "cassandra" created

Check the data through the cqlsh from one the Cassandra pods.

$ kubectl exec cassandra-0 -- cqlsh -e 'select * from classicmodels.offices'

officecode | addressline1 | addressline2 | city | country | phone | postalcode | state | territory

------------+------------------------+--------------+---------------+-----------+------------------+------------+------------+-----------

6 | 5-11 Wentworth Avenue | Floor #2 | Sydney | Australia | +61 2 9264 2451 | NSW 2010 | null | APAC

7 | 25 Old Broad Street | Level 7 | London | UK | +44 20 7877 2041 | EC2N 1HN | null | EMEA

4 | 43 Rue Jouffroy abbans | null | Paris | France | +33 14 723 4404 | 75017 | null | EMEA

3 | 523 East 53rd Street | apt. 5A | NYC | USA | +1 212 555 3000 | 10022 | NY | NA

5 | 4-1 Kioicho | null | Tokyo | Japan | +81 33 224 5000 | 102-8578 | Chiyoda-Ku | Japan

8 | BKC | Building 2 | Mumbai | MH | +91 22 8765434 | 400051 | null | APAC

2 | 1550 Court Place | Suite 102 | Boston | USA | +1 215 837 0825 | 02107 | MA | NA

1 | 100 Market Street | Suite 300 | San Francisco | USA | +1 650 219 4782 | 94080 | CA | NA

(8 rows)

Congratulations! You have successfully restored an application consistent snapshot for Cassandra.

Summary

Portworx can be easily deployed with RKE to run stateful workloads in production on Kubernetes. Through the integration of STORK, DevOps and StorageOps teams can seamlessly run highly available database clusters in Kubernetes. They can perform traditional operations such as volume expansion, snapshots, backup and recovery for the cloud native applications.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Janakiram MSV

Contributor | Certified Kubernetes Administrator (CKA) and Developer (CKAD)Explore Related Content:

- cassandra

- databases

- kubernetes

- rancher

- rancher kubernetes engine

- RKE