Generative AI, with its need for high computational power and efficient data management, requires a robust infrastructure to operate effectively. Kubernetes has emerged as the preferred orchestrator for managing such complex AI workloads due to its scalability, fault tolerance, and multi-tenant capabilities.

Portworx, with its advanced storage solutions, plays a crucial role in enhancing the performance and reliability of generative AI applications on Kubernetes. This article examines how Portworx can support various components of the generative AI stack.

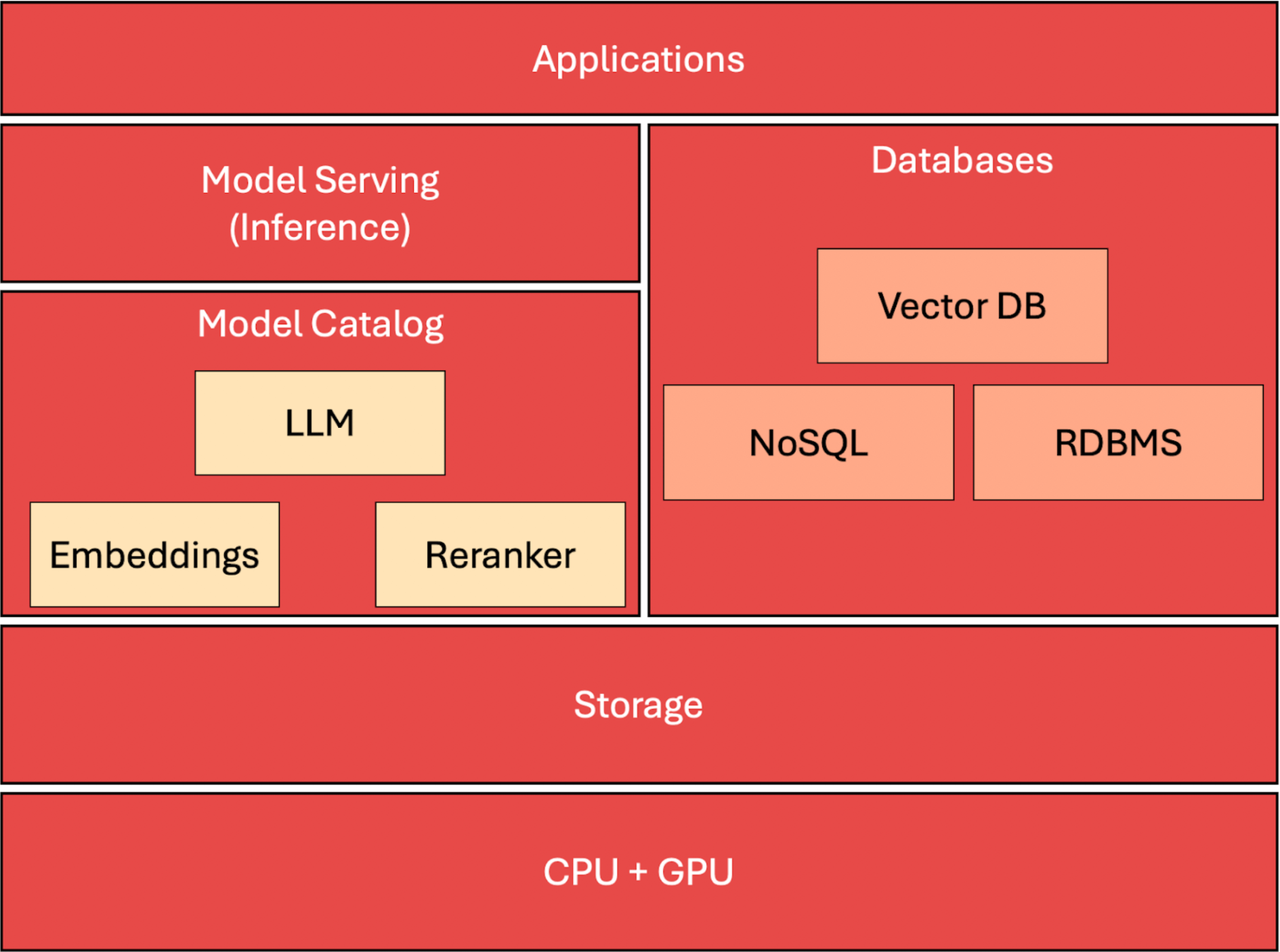

The Generative AI Stack

In the previous article, we introduced the inference stack for generative AI applications. For a quick recap, the stack consists of various building blocks deployed as microservices in Kubernetes.

Accelerated Compute

Generative AI applications demand powerful compute resources, often leveraging high-end CPUs and GPUs. Portworx ensures that the data required for these compute-intensive tasks is readily available, minimizing latency and maximizing throughput. By providing high-performance storage optimized for NVMe and SSDs, Portworx ensures seamless access to large datasets and models, critical for the accelerated compute layer. Additionally, data locality features reduce access time by storing data close to compute resources, while dynamic provisioning allows quick scaling based on compute needs.

Cloud-Native Storage Platform

The storage layer is pivotal in managing the vast volumes of data required for training and operating AI models. Portworx offers a cloud-native storage solution that guarantees high availability, scalability, and disaster recovery, essential for maintaining data integrity and performance. With multi-cloud and hybrid cloud support, Portworx enables data mobility across different environments. The platform also provides snapshots and backups for data protection and quick recovery, along with persistent volume management featuring dynamic provisioning and scaling.

Model Catalog

A model catalog centralizes the storage and management of AI models, ensuring efficient deployment and updates. Portworx’s SharedV4 volumes enable concurrent access by multiple pods, streamlining the model deployment process. The use of Persistent Volume Claims (PVCs) with ReadWriteMany (RWX) capability supports shared access, ensuring that models are accessible to all parts of an application. High availability and disaster recovery features provided by Portworx further ensure that models are always accessible and resilient against failures.

Model Serving

Serving large language models (LLMs) and other AI models requires a scalable and reliable infrastructure. Portworx provides the necessary storage performance and reliability to support these models, enabling efficient inference and low-latency responses. The integration with GPU nodes optimizes performance, while auto-scaling storage based on usage patterns and demand ensures that resources are utilized efficiently. Furthermore, Portworx supports Kubernetes-native features like ConfigMaps and Secrets for managing configurations and sensitive data, enhancing the overall efficiency and security of model serving.

Databases

Databases are integral to AI workloads, storing both structured and unstructured data. Portworx enhances database performance and reliability through its advanced storage capabilities. Optimized storage classes cater to various types of databases, including relational, NoSQL, and vector databases. The support for StatefulSets provides stable persistence and consistent network identifiers. Additionally, Portworx’s built-in features for replication, backups, and automatic failover ensure that databases remain reliable and data is protected against loss or corruption.

App Orchestration Layer

The app orchestration layer, based on frameworks like LangChain or LlamaIndex, requires reliable and scalable storage to handle interactions between users, AI models, and data stores. Portworx ensures that these stateless applications can scale efficiently and access data quickly. By providing persistent storage for caching frequently used responses, Portworx enables smooth scaling and management of the workloads. Robust security policies, including Network Policies and Role-Based Access Control (RBAC), further protect the services, ensuring a secure and efficient operation.

Summary

Kubernetes provides a powerful platform for deploying and managing generative AI applications, and Portworx enhances this ecosystem with its advanced storage solutions. By offering high-performance, scalable, and reliable storage, Portworx ensures that generative AI workloads run efficiently and effectively.

The next article will dive deeper into the specific use cases and benefits of using Portworx to run generative AI workloads, further optimizing AI applications on Kubernetes.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!

Janakiram

Janakiram is an industry analyst, strategic advisor, and a practicing architect.