Today’s Architect’s Corner is with Federico Nusymowicz, Director of Software at Software Motor Company (SMC). SMC is one of these companies that you probably haven’t heard of yet, but will revolutionize the way you live. They have invented a radically more efficient electric motor that will save enormous amounts of energy globally. Federico likens an SMC motor to an LED. In this interview, we learn about what makes an SMC motor different, and how they are using Kubernetes to build the software powering the software motor.

Can you tell me a little about your company?

Software Motor Company (SMC) was founded in 2013 to bring the benefits of the Internet of Things (IoT) to electric motors. Our mission is to develop and commercialize ultra-high performance and low-cost electric motors to optimize energy savings and the overall performance of the systems they drive.

In short, we patented, developed and are delivering the next generation of electric motors. We started marketing this device as the “LED of motors” because that framing helps explain what makes our motor unique.

Take the LED as an example. LEDs are basically the peak of energy efficiency when it comes to lighting. Now take electric motors, an industry that has seen little innovation over the past 40 years. In today’s market, you have what is called induction motors. These motors drive the air conditioning in buildings, golf carts, condensers in refrigerators, you name it. Anything that consumes electricity and turns it into mechanical energy today pretty much uses an induction motor.

These motors are very inefficient in terms of energy consumption. And most of them run at a fixed speed, which means they’re consuming 100% electricity even when they only need 20% to generate the right amount of torque. Some variable frequency drive (VFD) motors manage to consume electricity more intelligently, but the components involved in VFDs are expensive, inefficient, and often break. This forces buyers to choose amongst energy efficiency, upfront cost, reliability, and ongoing maintenance cost.

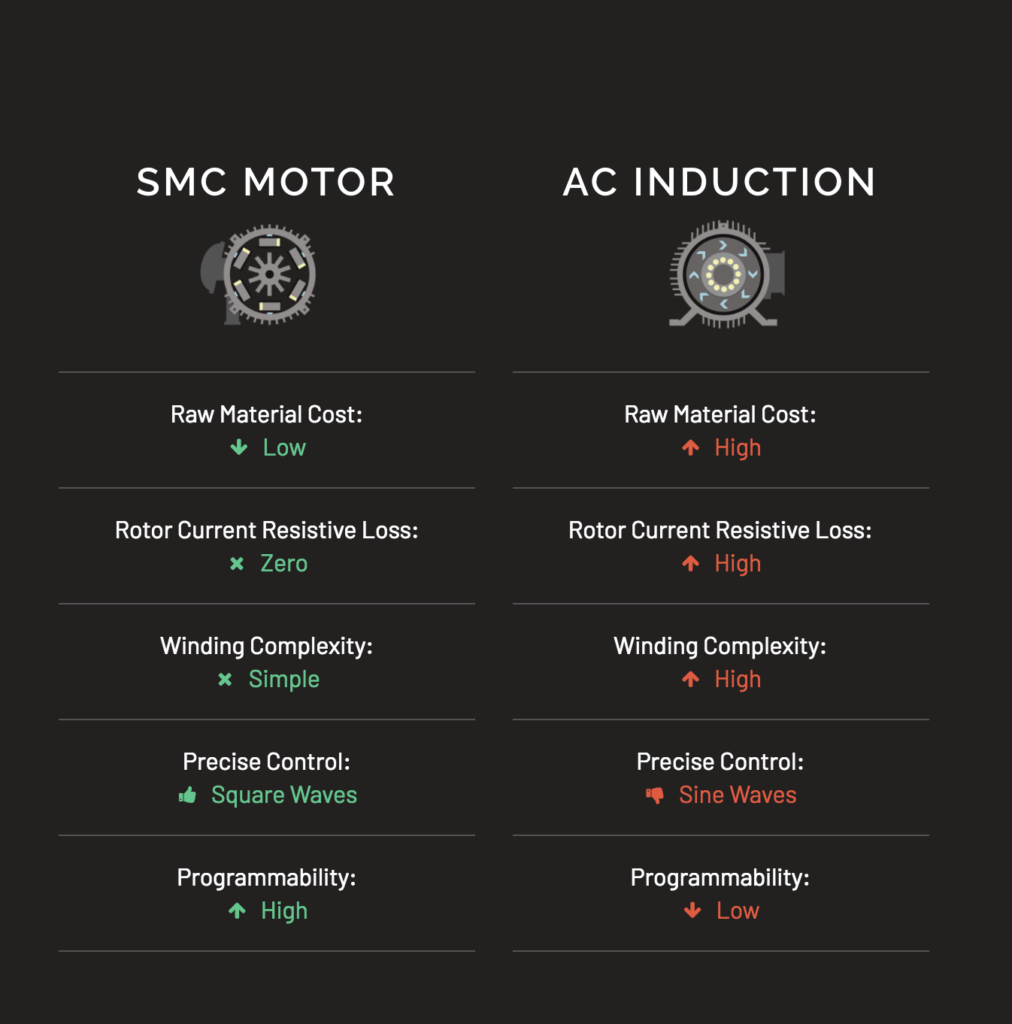

The SMC motor solves each of these problems and delivers multiple related benefits. First, our motor turns more electricity into mechanical energy, the way an LED converts more electrical energy into light. Just as important, our motor intelligently consumes the exact amount of energy required to drive connected equipment. Our motor is also more reliable than induction motors – the basic design that has been used in mines and nuclear plants for decades.

Image from https://softwaremotor.com/technology/

Our motor is more efficient at a fundamental level due to its core architecture but also because of the intelligent controls attached to it. And because our motor is connected to the cloud, we have full data on its operation. We can, therefore, predict motor or equipment failure before it ever happens. For a number of industry sectors, this is critical. Consider, for example, a pharmaceutical facility that needs to keep room temperature and air pressure within a very specific range. Our motors are capable of doing this much more effectively than any other alternative currently on the market.

Our motor is more efficient at a fundamental level due to its core architecture but also because of the intelligent controls attached to it. And because our motor is connected to the cloud, we have full data on its operation. We can therefore predict motor or equipment failure before it ever happens.

Another key feature of our system is digital twins that we developed for our motors and connected equipment. We use these models to run simulations that allow us to streamline design, manufacturing, and operation. This allows us to optimize performance, improve reliability, and reduce cost, all at the same time. SMC is creating the first motor ever to ride the tailwinds of Moore’s law.

Can you tell us a little bit about your role at the company?

I’m the Director of Software at SMC. I joined SMC late last year, which is right when the company began focusing on commercialization.

The motor itself has been an academic research project for well over 10 years. It only became commercially viable about four years ago when power electronics, the components that modulate electricity flow into the motor itself, came into their own. Because of wind turbines and self-driving cars, power electronics have become a lot cheaper and more efficient. So while the hardware was ready for commercialization years before, SMC had to tune the power electronics, reduce noise and size, and make it possible to replace existing induction motors by doing simple retrofits.

Now that our motors are being used across numerous applications, we’ve started building out the software team responsible for cloud infrastructure, analytics, simulations, and a number of other initiatives. That’s what I’m responsible for – making sure we deliver on each of these projects.

We chose Kubernetes very specifically because of portability.

Can you talk a little bit about how you’re using containers generally?

Right now we’re using containers as the packaging mechanism for our microservices. These containers are deployed and managed by the Kubernetes scheduler.

Currently, we run Kubernetes on AWS. But we chose Kubernetes very specifically because of portability.

How does Kubernetes help with application portability?

We expect some of our future customers will want to keep operating data in their own hands, depending on their industry and specific needs.

I think the majority of our first wave of customers will want to run on our public-hosted cloud, which is the most cost-effective option, and also highly secure.

As we start serving Fortune 500 customers, a number of them will have varying reasons why they want to take our entire cloud back-end and run it in their own data center or in a virtual private cloud.

What were some of the challenges that you needed to overcome in order to run databases in containers?

The first challenge was service availability. It’s pretty common that a node will go down for any number of reasons, and we don’t want to lose service when this happens. We can’t afford to be losing data from motors because that is critical information.

Back to the pharmaceutical example, if there’s some indicator that pressure is destabilizing at a customer site, we want to be able to quickly react to that. If our data stores are down, we cannot take the right actions, and if we don’t react, the pharmaceutical company might have to throw out a multimillion-dollar batch of drugs that they’re creating. High availability, therefore, is the first and foremost requirement.

It’s pretty common that a node will go down for any number of reasons, and we don’t want to lose service when this happens. We can’t afford to be losing data from motors because that is critical information.

After high availability, the next requirement was the durability of system data. There is any number of reasons data can get corrupted. You can address durability fairly well with backups and replication – which some databases like Cassandra provide out of the box – but replication becomes more difficult when you’re writing your own stateful applications.

That was another pretty cool thing about Portworx; replication works out of the box regardless of which data service sits on top.

You run on Amazon, did you look at using EBS for Kubernetes storage to solve the availability and persistence issue?

Yes, we’re running on EBS for now, but not directly. We run Portworx on top of EBS. Disaster recovery is always a goal of critical systems, and if I want to do disaster recovery in an automated way with EBS, we would have to figure out a way to reliably detach the volume from the failing node, and then reattach it to a different node. That gets messy since EBS volumes end up getting stuck. Also, the pure EBS strategy doesn’t work across availability zones.

If we have a simple application pumping data into one EBS volume, great. EBS failures are rare and likely this would work fine. But when you operate at scale, you have to plan for failure. And that’s what I like about Portworx. You can replicate all your data to other volumes automatically, so even if one volume fails, it’s no big deal because you have that data in two other places. And the replication can actually happen across availability zones as well.

Which stateful services are you running on Kubernetes?

When you operate at scale, you have to plan for failure. And that’s what I like about Portworx. You can replicate all your data to other volumes automatically, so even if one volume fails, it’s no big deal because you have that data in two other places. And the replication can actually happen across availability zones as well.

Right now we use Kafka and that’s pretty much it. We also store data in Parquet format and are evaluating the major SQL-on-Hadoop engines for OLAP. We evaluated Cassandra for time series, but are seeing more success with our Druid POC. We might go back to Cassandra in the future.

Above all, we need to retain the flexibility to use whatever tool is right for the job. And that’s one of the nicest things about Portworx. It fits with anything, regardless of what data services we might choose in the future.

What storage options for Kubernetes did you evaluate?

We compared various things before settling on Portworx. We looked at NFS, GlusterFS, and Infinit.sh. We did a paper comparison of a larger list and then did testing with a couple that looked particularly promising. One of the things that is really important to us is time, and setting up those other systems was very time-consuming.

What advice would you give someone thinking about running stateful services on Kubernetes?

Kubernetes with Portworx is probably the most flexible solution to run just about any data service imaginable with fairly minimal effort. If you’re willing to invest a small amount of time figuring out how to run data services correctly on Kubernetes, Portworx is the best choice that I’ve found in the market today.

I would start by asking questions about why they’re thinking about using microservices and containers. If you don’t have complex requirements or need to keep control over your data, there are some great hosted data services that you can use.

Assuming the person has good reason to run containers, such as the reasons I gave about privacy, control, and portability, I would urge them to think very carefully about what data storage to use and to think critically about where they were going to run these services and how the data gets backed up, how to keep services highly available, protected, etc.

This is what I liked about Portworx. Kubernetes with Portworx is probably the most flexible solution to run just about any data service imaginable with fairly minimal effort. If you’re willing to invest a small amount of time figuring out how to run data services correctly on Kubernetes, Portworx is the best choice that I’ve found in the market today.

Share

Subscribe for Updates

About Us

Portworx is the leader in cloud native storage for containers.

Thanks for subscribing!